📆 ThursdAI - Sep 18 - Gpt-5-Codex, OAI wins ICPC, Reve, ARC-AGI SOTA Interview, Meta AI Glasses & more AI news

Description

Hey folks,

What an absolute packed week this week, which started with yet another crazy model release from OpenAI, but they didn't stop there, they also announced GPT-5 winning the ICPC coding competitions with 12/12 questions answered which is apparently really really hard!

Meanwhile, Zuck took the Meta Connect 25' stage and announced a new set of Meta glasses with a display! On the open source front, we yet again got multiple tiny models doing DeepResearch and Image understanding better than much larger foundational models.

Also, today I interviewed Jeremy Berman, who topped the ArcAGI with a 79.6% score and some crazy Grok 4 prompts, a new image editing experience called Reve, a new world model and a BUNCH more! So let's dive in! As always, all the releases, links and resources at the end of the article.

ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Table of Contents

* Codex comes full circle with GPT-5-Codex agentic finetune

* Meta Connect 25 - The new Meta Glasses with Display & a neural control interface

* Jeremy Berman: Beating frontier labs to SOTA score on ARC-AGI

* This Week’s Buzz: Weave inside W&B models—RL just got x-ray vision

* Perceptron Isaac 0.1 - 2B model that points better than GPT

* Tongyi DeepResearch: A3B open-source web agent claims parity with OpenAI Deep Research

* Reve launches a 4-in-1 AI visual platform taking on Nano 🍌 and Seedream

* Ray3: Luma’s “reasoning” video model with native HDR, Draft Mode, and Hi‑Fi mastering

* World models are getting closer - Worldlabs announced Marble

* Google puts Gemini in Chrome

Codex comes full circle with GPT-5-Codex agentic finetune (X, OpenAI Blog)

My personal highlight of the week was definitely the release of GPT-5-Codex. I feel like we've come full circle here. I remember when OpenAI first launched a separate, fine-tuned model for coding called Codex, way back in the GPT-3 days. Now, they've done it again, taking their flagship GPT-5 model and creating a specialized version for agentic coding, and the results are just staggering.

This isn't just a minor improvement. During their internal testing, OpenAI saw GPT-5-Codex work independently for more than seven hours at a time on large, complex tasks—iterating on its code, fixing test failures, and ultimately delivering a successful implementation. Seven hours! That's an agent that can take on a significant chunk of work while you're sleeping. It's also incredibly efficient, using 93% fewer tokens than the base GPT-5 on simpler tasks, while thinking for longer on the really difficult problems.

The model is now integrated everywhere - the Codex CLI (just npm install -g codex), VS Code extension, web playground, and yes, even your iPhone. At OpenAI, Codex now reviews the vast majority of their PRs, catching hundreds of issues daily before humans even look at them. Talk about eating your own dog food!

Other OpenAI updates from this week

While Codex was the highlight, OpenAI (and Google) also participated and obliterated one of the world’s hardest algorithmic competitions called ICPC. OpenAI used GPT-5 and an unreleased reasoning model to solve 12/12 questions in under 5 hours.

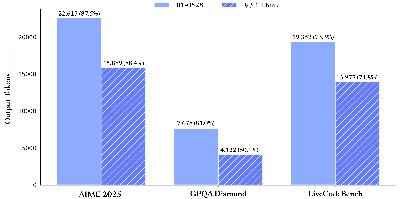

OpenAI and NBER also released an incredible report on how over 700M people use GPT on a weekly basis, with a lot of insights, that are summed up in this incredible graph:

Meta Connect 25 - The new Meta Glasses with Display & a neural control interface

Just when we thought the week couldn't get any crazier, Zuck took the stage for their annual Meta Connect conference and dropped a bombshell. They announced a new generation of their Ray-Ban smart glasses that include a built-in, high-resolution display you can't see from the outside. This isn't just an incremental update; this feels like the arrival of a new category of device. We've had the computer, then the mobile phone, and now we have smart glasses with a display.

The way you interact with them is just as futuristic. They come with a "neural band" worn on the wrist that reads myoelectric signals from your muscles, allowing you to control the interface silently just by moving your fingers. Zuck's live demo, where he walked from his trailer onto the stage while taking messages and playing music, was one hell of a way to introduce a product.

This is how Meta plans to bring its superintelligence into the physical world. You'll wear these glasses, talk to the AI, and see the output directly in your field of view. They showed off live translation with subtitles appearing under the person you're talking to and an agentic AI that can perform research tasks and notify you when it's done. It's an absolutely mind-blowing vision for the future, and at $799, shipping in a week, it's going to be accessible to a lot of people. I've already signed up for a demo.

Jeremy Berman: Beating frontier labs to SOTA score on ARC-AGI

We had the privilege of chatting with Jeremy Berman, who just achieved SOTA on the notoriously difficult ARC-AGI benchmark using checks notes... Grok 4! 🚀

He walked us through his innovative approach, which ditches Python scripts in favor of flexible "natural language programs" and uses a program-synthesis outer loop with test-time adaptation. Incredibly, his method achieved these top scores at 1/25th the cost of previous systems

This is huge because ARC-AGI tests for true general intelligence - solving problems the model has never seen before. The chat with Jeremy is very insightful, available on the podcast starting at 01:11:00 so don't miss it!

ThursdAI - Recaps of the most high signal AI weekly spaces is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

This Week’s Buzz: Weave inside W&B models—RL just got x-ray vision

You know how every RL project produces a mountain of rollouts that you end up spelunking through with grep? We just banished that misery. Weave tracing now lives natively inside every W&B Workspace run. Wrap your training-step and rollout functions in @weave.op, call weave.init(), and your traces appear alongside loss curves in real time. I can click a spike, jump straight to the exact conversation that tanked the reward, and diagnose hallucinations without leaving the dashboard. If you’re doing any agentic RL, please go treat yourself. Docs: https://weave-docs.wandb.ai/guides/tools/weave-in-workspaces

Open Source

Open source did NOT disappoint this week as well, we've had multiple tiny models beating the giants at specific tasks!

Perceptron Isaac 0.1 - 2B model that points better than GPT ( X, HF, Blog )

One of the most impressive demos of the week came from a new lab, Perceptron AI. They released Isaac 0.1, a tiny 2 billion parameter "perceptive-language" model. This model is designed for visual grounding and localization, meaning you can ask it to find things in an image and it will point them out. During the show, we gave it a photo of my kid's Harry Potter alphabet poster and asked it to "find the spell that turns off the light."

Not only did it correctly identify "Nox," but it drew a box around it on the poster. This little 2B model is doing things that even huge models like GPT-4o and Claude Opus can't, and it's completely open source. Absolutely wild.

Moondream 3 preview - grounded vision reasoning 9B MoE (2B active) (<a href="https://x.com/vikhyatk/status/19