Five Orders of Magnitude: Analog Gain Cells Slash Energy and Latency for Ultra-Fast LLMs

Description

In this episode, we explore an innovative approach to overcoming the notorious energy and latency bottlenecks plaguing modern Large Language Models (LLMs).

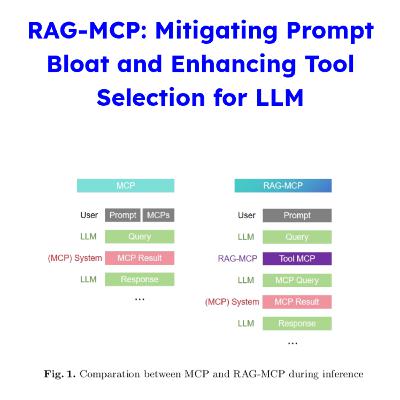

The core of generative LLMs, powered by Transformer networks, relies on the self-attention mechanism, which frequently accesses and updates the large Key-Value (KV) cache. On traditional Graphical Processing Units (GPUs), loading this KV-cache from High Bandwidth Memory (HBM) to SRAM is a major bottleneck, consuming substantial energy and causing latency.

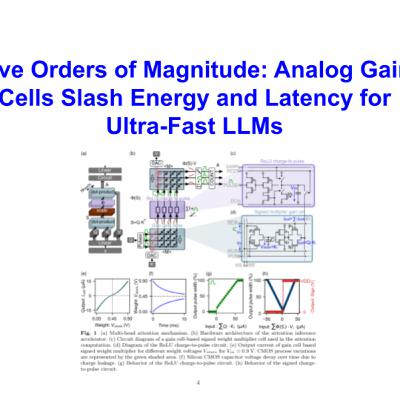

We delve into a novel Analog In-Memory Computing (IMC) architecture designed specifically to perform the attention computation far more efficiently.

Key Breakthroughs and Results:

- Gain Cells for KV-Cache: The architecture utilizes emerging charge-based gain cells to store token projections (the KV-cache) and execute parallel analog dot-product computations necessary for self-attention. These gain cells enable non-destructive read operations and support highly parallel IMC computations.

- Massive Efficiency Gains: This custom hardware delivers transformative performance improvements compared to GPUs. It reduces attention latency by up to two orders of magnitude and energy consumption by up to five orders of magnitude. Specifically, the architecture achieves a speedup of up to 7,000x compared to an Nvidia Jetson Nano and an energy reduction of up to 90,000x compared to an Nvidia RTX 4090 for the attention mechanism. The total attention latency for processing one token is estimated at just 65 ns.

- Hardware-Algorithm Co-Design: Analog circuits introduce non-idealities, such as a non-linear multiplication and the use of ReLU activation instead of the conventional softmax. To ensure practical applications using pre-trained models, the researchers developed a software-to-hardware methodology. This innovative adaptation algorithm maps weights from pre-trained software models (like GPT-2) to the non-linear hardware, allowing the model to achieve comparable accuracy without requiring training from scratch.

- Analog Efficiency: The design uses charge-to-pulse circuits to perform two dot-products, scaling, and activation entirely in the analog domain, effectively avoiding power- and area-intensive Analog-to-Digital Converters (ADCs).

The proposed architecture marks a significant step toward ultra-fast, low-power generative Transformers and demonstrates the promise of IMC with volatile, low-power memory for attention-based neural networks.