From Smallpox Scabs to mRNA Shots

Description

Remember last week’s story about those four guys at the bar who thought mRNA vaccines appeared out of nowhere? They left that night knowing the technology was 40 years old. But there was a question I couldn't fully answer with a beer in my hand: "If we already had vaccines that worked, why didn’t we just stick to those older types of vaccines for COVID-19?”

It's a fair question. And answering it requires opening up the entire vaccine toolbox, from the methods our ancestors used (yes, we're talking smallpox scabs) to the genetic blueprints we're writing today.

My brilliant colleagues, Dr. Nancy Haigwood, Izzy Brandstetter Figueroa, and Dr. Elana Pearl Ben-Joseph, helped me dig deeper into these questions. Because understanding our vaccine options isn't just academic. It's practical knowledge you need when your uncle starts talking about being a guinea pig at Thanksgiving dinner. Let’s discuss…

A Success Story Written in Survival

Vaccines are undoubtedly one of the greatest successes to emerge from the field of public health. The development and widespread use of vaccines in the 20th century have reduced childhood mortality from diseases like diphtheria, mumps, pertussis, and tetanus by almost 100%. Diseases that once killed children, like measles (which we still vaccinate against) and smallpox (which vaccines completely eradicated by 1980), no longer pose the same threat. Some vaccines, like those for measles and polio, provide long-lasting protection, while others require boosters. When was the last time you saw someone with paralytic poliomyelitis? If your answer is "never," or even better, "huh? What's that?", you have the polio vaccine to thank for that.

This astonishing reduction in infectious diseases did not happen overnight, nor did the development of the vaccines used to achieve these results. They are the product of years of trial and error. Each vaccine has the same ultimate goal: to stimulate an immune response that is both strong enough and specific enough to recognize and fight off a pathogen before it has the chance to make us really sick.

When someone says, "Why not use traditional vaccines instead of mRNA?" they're assuming all vaccines work the same way. They don't. Each technology was developed to solve specific problems that earlier vaccines couldn't handle.

From Stone Tools to Genetic Blueprints

Vaccines as we know them today are a reflection of scientific, technological, and manufacturing advances driven by medical need and safety over the past two centuries.

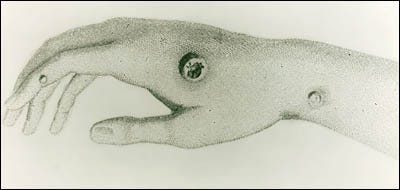

Early "vaccines" were very basic. Take smallpox, for example. Written accounts from as early as the 16th century show that healthy individuals were intentionally exposed to the smallpox virus by means such as inserting a cotton plug soaked with pus from an infected person's pustule into the nose, inhaling dry fragments of a smallpox scab, or wearing an infected person's clothing.

Not only was it pretty gross, but it was quite risky. People sometimes were exposed to too much live virus and contracted full-blown smallpox anyway. Despite these significant risks, the practice persisted because it still offered better odds than facing smallpox naturally. This understanding that a small amount of live virus could trigger an immune response without causing severe disease became one of the first tools in our immunization toolbox.

The Evolution of Vaccine Technology

In 1796, Edward Jenner revolutionized the field by using cowpox virus to protect against smallpox, the first true 'vaccine.' Building on this principle, 19th-century scientists Louis Pasteur and Emile Roux discovered they could deliberately weaken (or 'attenuate') dangerous pathogens. They extracted the rabies virus from infected rabbits and weakened it enough that the immune system could recognize the virus without it being strong enough to cause rabies.

The Old Guard: Vaccines That Use Actual Viruses and Bacteria

Live-attenuated vaccines are vaccines made from a weakened form of a virus or bacteria. They have the advantage of generating long-lasting immunity, often lifelong with just one or two doses. The experimentation required to attenuate viruses took extensive time in the lab. With testing in animals and humans over the years, this technology led to the development of safe and effective vaccines against measles, mumps, rubella (MMR), varicella (chicken pox), and many more diseases.

These vaccines work incredibly well, which is why your MMR shot from childhood still protects you. But because they contain live weakened viruses, there's a small risk these viruses can mutate back to a more harmful form. This risk becomes greater in people with compromised immune systems, which is why vaccines like MMR aren't recommended for this population.

Inactivated vaccines began in the late 1800s and early 1900s, where a virus or bacterium was killed (inactivated) such that it could no longer cause disease but retained its complex outer structure enough to trigger strong immunity. Initial inactivated vaccines included vaccines against plague, cholera, salmonella, and pertussis.

The first polio vaccine, developed and licensed in 1955, used an inactivated virus to safely induce immunity when given as a shot. Around the same time, a live-attenuated oral polio vaccine (given as a liquid by mouth) was also developed and introduced in the early 1960s, providing protection against all three polio strains and offering the advantage of easy oral administration. Today in the US, children receive only the inactivated polio vaccine (IPV), as the live vaccine was discontinued in 2000 due to rare cases of vaccine-derived polio. However, many countries still use the oral live-attenuated vaccine for its ease of administration and ability to provide community immunity.

Note: Another early advance led to toxoid vaccines for diseases like diphtheria and tetanus (developed in the 1920s-1940s). These vaccines use inactivated bacterial toxins to trigger protective antibodies.

The Middle Generation: Just the Important Parts

With refinements to purification processes over the years, a virus or bacterium could be broken apart to isolate very specific parts ('split' or subunit vaccines), and just these parts would be used for the vaccine. These are considered very safe because they only use a small piece of the pathogen and therefore cannot cause disease. They also have fewer side effects. These are usually known as subunit vaccines.

Conjugate vaccines used for pneumonia and meningitis built on this technology. In this type of vaccine, a weak antigen from the pathogen (usually a sugar molecule) is connected to a protein molecule. This combination helps the immune system recognize and respond more effectively to the weaker antigen. This was first deployed in the 1980s, with several additional vaccines becoming available in the 1990s and 2000s.

Recombinant DNA technology emerged around this time, and with it came the ability to create even safer vaccines without any actual virus present. This technology allowed vaccine developers to use laboratory strains of yeast or bacteria as factories to produce just the proteins they needed. The protein is purified and then used as the active ingredient in the vaccine. In 1986, the first recombinant vaccine was approved for hepatitis B. In this vaccine, the only part of the virus present was a replica of its surface protein. The vaccine against human papillomavirus (HPV), introduced in 2006, was engineered similarly.

When someone worries about "live virus" in vaccines, you can explain that many modern vaccines (like those for hepatitis B and HPV) don't contain any virus at all, just proteins. And even the flu shot m