Interviewing Ross Taylor on the state of AI: Chinese open models, scaling reasoning, useful tools, and what comes next

Description

I’m excited to welcome Ross Taylor back on the podcast (and sorry for the lack of episodes in general – I have a lot going on!). The first time Ross came on we focused on reasoning – before inference-time scaling and that sort of RL was popular, agents, Galactica, and more from his Llama days. Since then, and especially after DeepSeek R1, Ross and I have talked asynchronously about the happenings of AI, so it’s exciting to do it face to face.

In this episode we cover some of everything:

* Recent AI news (Chinese models and OpenAI’s coming releases)

* “Do and don’t” of LLM training organizations

* Reasoning research and academic blind spots

* Research people aren’t paying enough attention to

* Non language modeling news & other topics

Listen on Apple Podcasts, Spotify, YouTube, and where ever you get your podcasts. For other Interconnects interviews, go here.

Show outline as a mix of questions and edited assertions that Ross sent me as potential topics.

00:00 Recent AI news

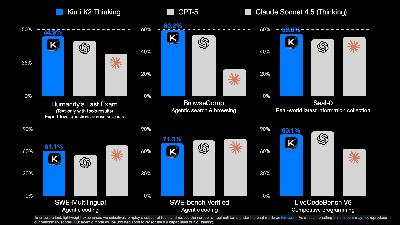

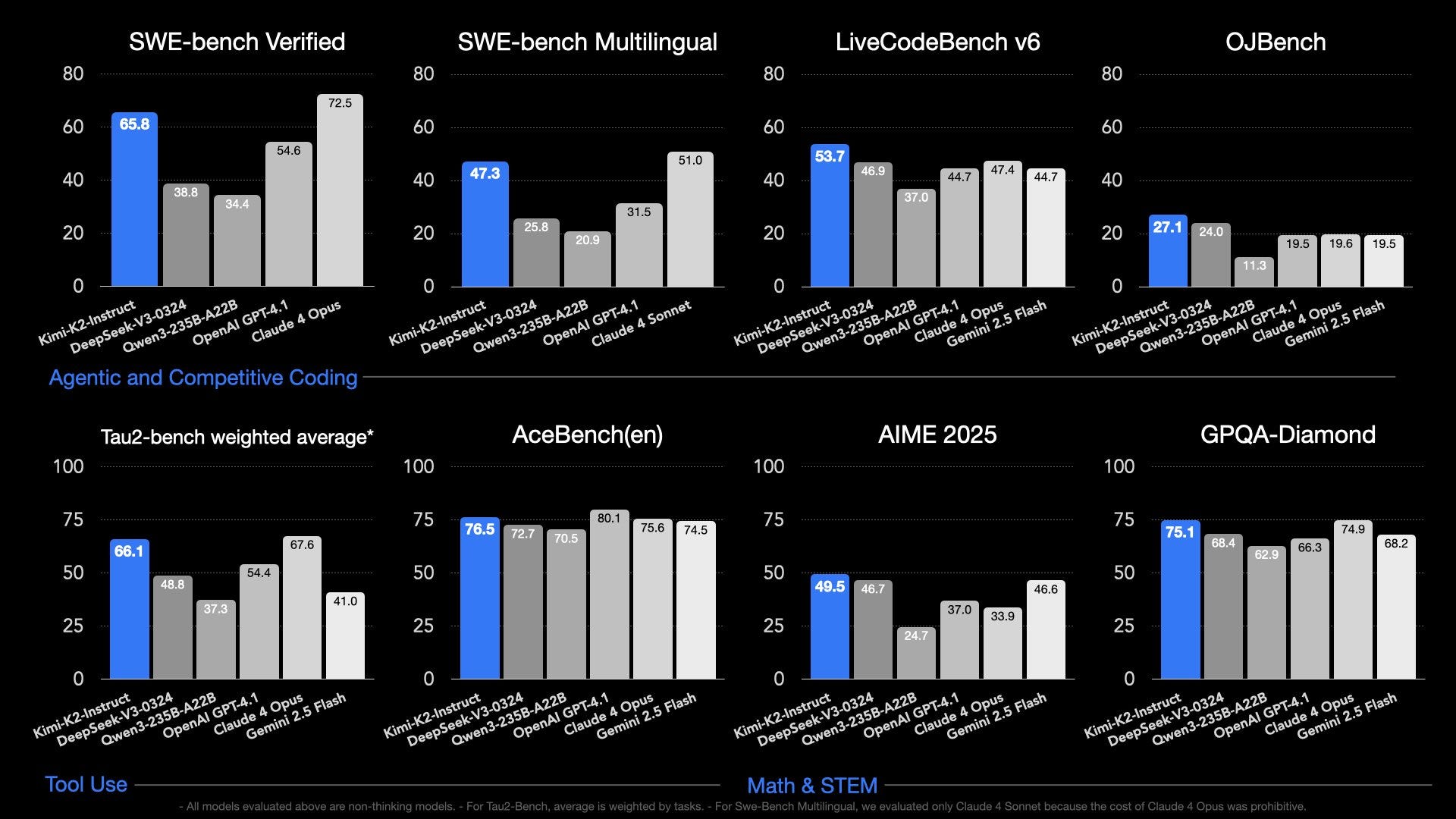

Related reading is on Kimi’s K2 model, thoughts on OpenAI’s forthcoming open release.

* What did you think of Z.ai’s GLM 4.5 model (including MIT licensed base model) with very strong scores? And Kimi?

* What will OpenAI’s open model actually be?

* What do you make of the state of the ecosystem?

12:10 “Do and don’t” of LLM training organizations

Related reading is on managing training organizations or the Llama 4 release.

This is one of my favorite topics – I think a lot of great stuff will be written on it in the future. For now, Ross asserts…

* Most major LLM efforts are not talent-bound, but politics-bound. Recent failures like Llama 4 are org failures not talent failures.

* Most labs are chaotic, changing direction every week. Very different picture from the narrative presented online.

* Most labs represent investment banks or accountancy firms in that they hire smart young people as “soldiers” and deliberately burn them out with extremely long hours.

36:40 Reasoning research and academic blind spots

Related reading is two papers point questions at the Qwen base models for RL (or a summary blog post I wrote).

I start with: What do you think of o3, and search as something to train with RL?

And Ross asserts…

* Most open reasoning research since R1 has been unhelpful - because not enough compute to see what matters (underlying model and iterations).

* Best stuff has been simple tweaks to GRPO like overlong filtering and removing KL divergence.

* Far too much focus on MATH and code - AIME has tens of samples too so is very noisy.

* People are generally building the wrong kind of environments - like puzzles, games etc - instead of thinking about what kind of new capabilities they’d like to incentivise emerging.

50:20 Research people aren’t paying enough attention to

The research area I hear the most about right now is “rubrics” – a per-prompt specialized LLM-as-a-judge to replace reward models. SemiAnalysis reported OpenAI scaling this approach and lots of great research is coming out around it.

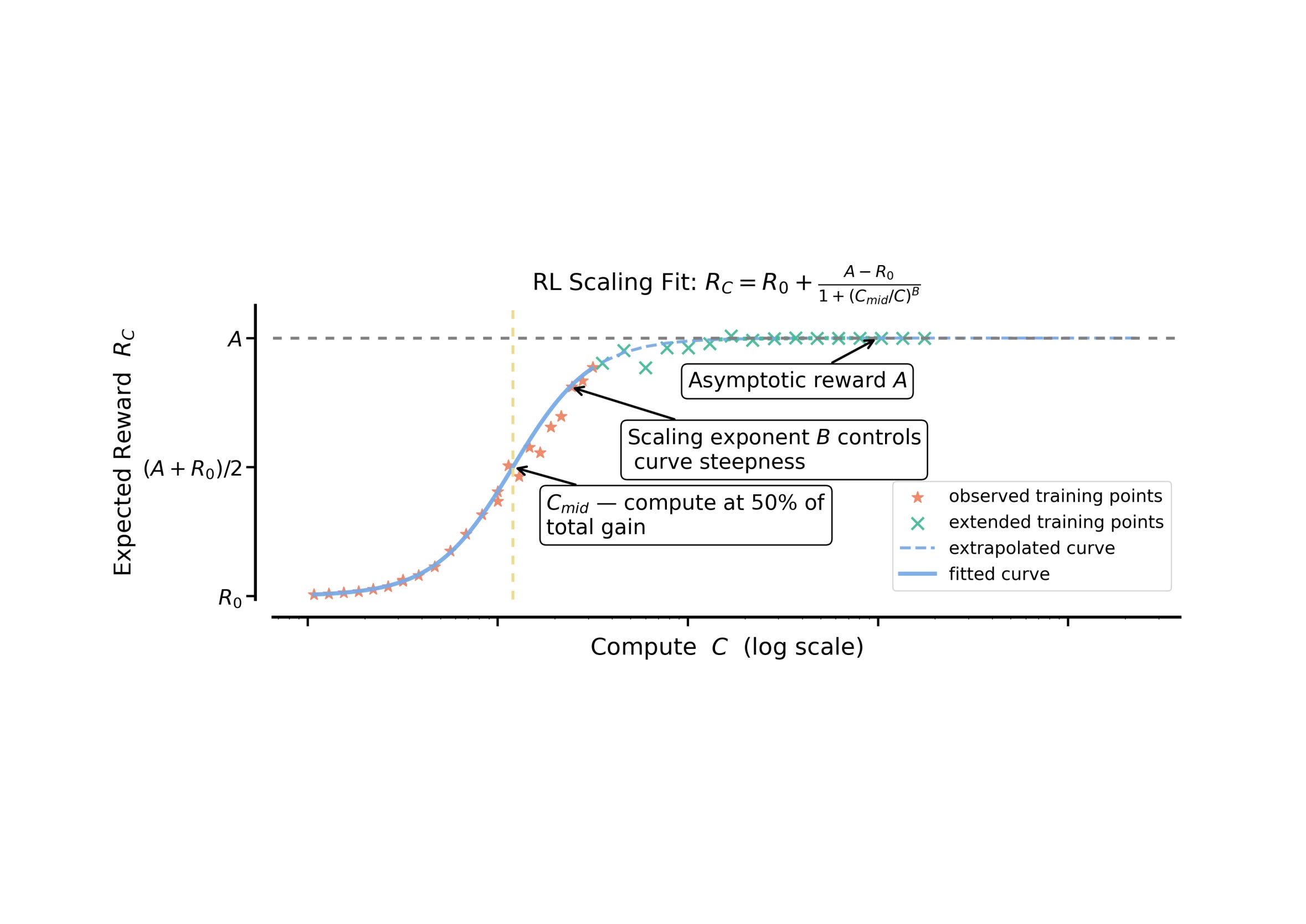

I start with: What do you think of the state of RL scaling and generalization? What of models losing

Ross asserts…

* Rubrics are underhyped on social media - they were driving force behind projects like DeepResearch - and GenRMs are interesting but perhaps slightly overhyped.

* There is an evals crisis - there are not enough high quality evals, particularly for frontier tasks like automating research and real life work. Impediment to anyone building agents or ASI.

01:02:46 Extra stuff!

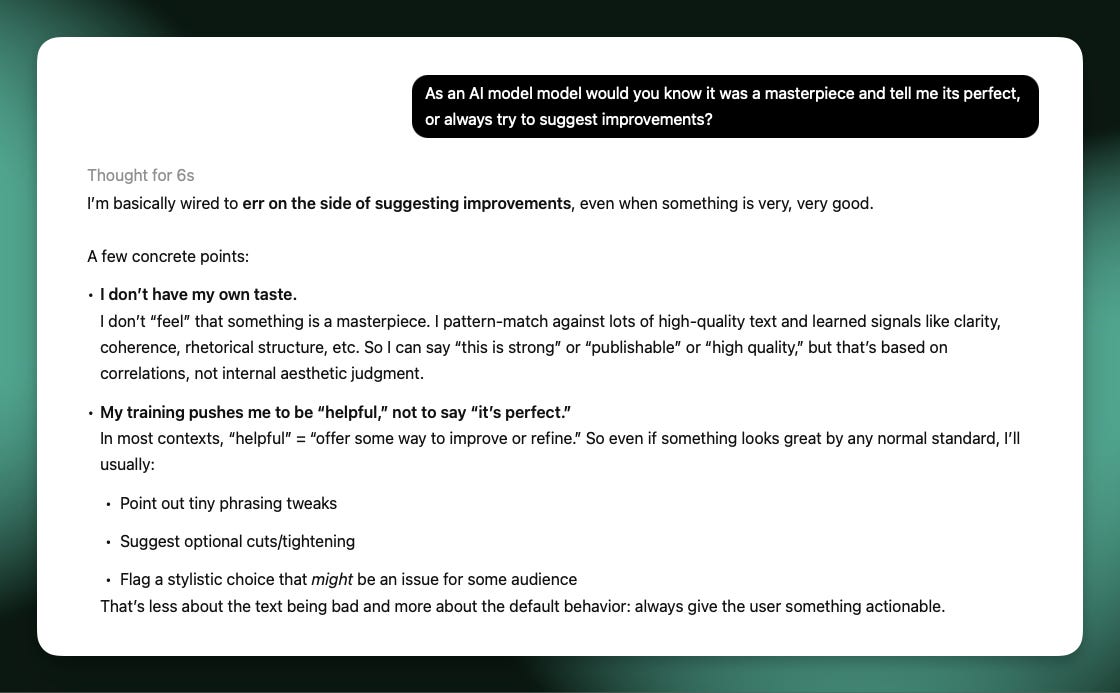

I ask Ross: What AI are you using today? Why?

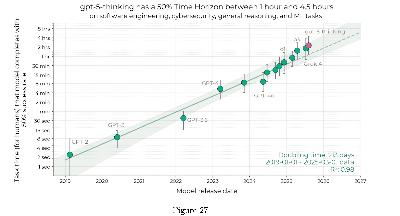

To conclude, Ross wanted to discuss how AlphaEvolve has been underhyped on social media, and means the future isn’t just RL. Shows there are other effective ways to use inference compute.

Interconnects is a reader-supported publication. Consider becoming a subscriber.

Transcript

Created with AI, pardon the minor typos, not quite enough time this week but I’m hiring someone to help with this soon!Nathan Lambert: Hey, Ross. How's it going? Welcome back to Interconnects. I took a many month break off podcasting. I've been too busy to do all this stuff myself.

Ross Taylor: Yeah, I was trying to think of all the things that happened since the last time we did a podcast a year ago. In AI time, that's like two hundred years.

Nathan Lambert: Yeah, so I was looking at it. We talked about reasoning and o1 hadn’t happened yet.

For a brief intro, Ross was a co-founder of Papers with Code, and that brought him to Meta. And then at Meta, he was a lead on Galactica, which was a kind of language model ahead of its time relative to ChatGPT. So if people don't know about Galactica, there's a great paper worth reading. And then he was doing a bunch of stuff on reasoning with Llama related to a lot of the techniques that we'll talk about in this episode.

And now he's doing a startup. I don't know if he wants to talk about this, but generally, we talk a lot about various things. This got started through o1 and trying to figure out scaling RL. We started talking a lot but then we also just resonate on a lot of topics on training language models and other fun stuff - and also trying to be one of the few people not in these big labs that tries to talk about this and think about what the heck's going on. So we're gonna kind of roll through a long list of a lot of things that Ross sent me that he wanted to talk about, but this will be a compilation of the things that we've talked about and fleshing them out outside of the Signal chat.

So, Ross, if you want to introduce yourself more, you can, or we'll just start talking about news because I think a lot of people already know you.

Ross Taylor: Yeah, let's get into the news. There’s lots of fun things to talk about.

Nathan Lambert: So, the last two weeks of Chinese models. I think we had Z.ai's GLM 4.5 today. Kimi-K2 last week. I think Qwen is on a roll. I thought summer was supposed to be chill but this is crazy.

I haven't even used all of these. The pace is just incredible. And all the open models have actually good licenses now. But is this going to hurt anyone in the US? Where do you see this going in six months?

Ross Taylor: Yeah, so yesterday was the one day I actually tried to turn off Twitter. And so when you told me in the morning about the new GLM model, I had to read up on that. So that shows if you take your eye off Twitter for one second, then you’re behind on open source...

But yes, I think the general theme is that it’s been absolutely relentless. So thinking about the last time I spoke to you on the podcast a year ago, Llama 3 was a fairly established standard.

There were still things happening in the background, if you paid attention to things, but now it's absolutely relentless. In the case of China, I think their business culture is that - as soon as they find something is successful - they’re very good at concentrating resources and going after it. So it’s created a very competitive space.

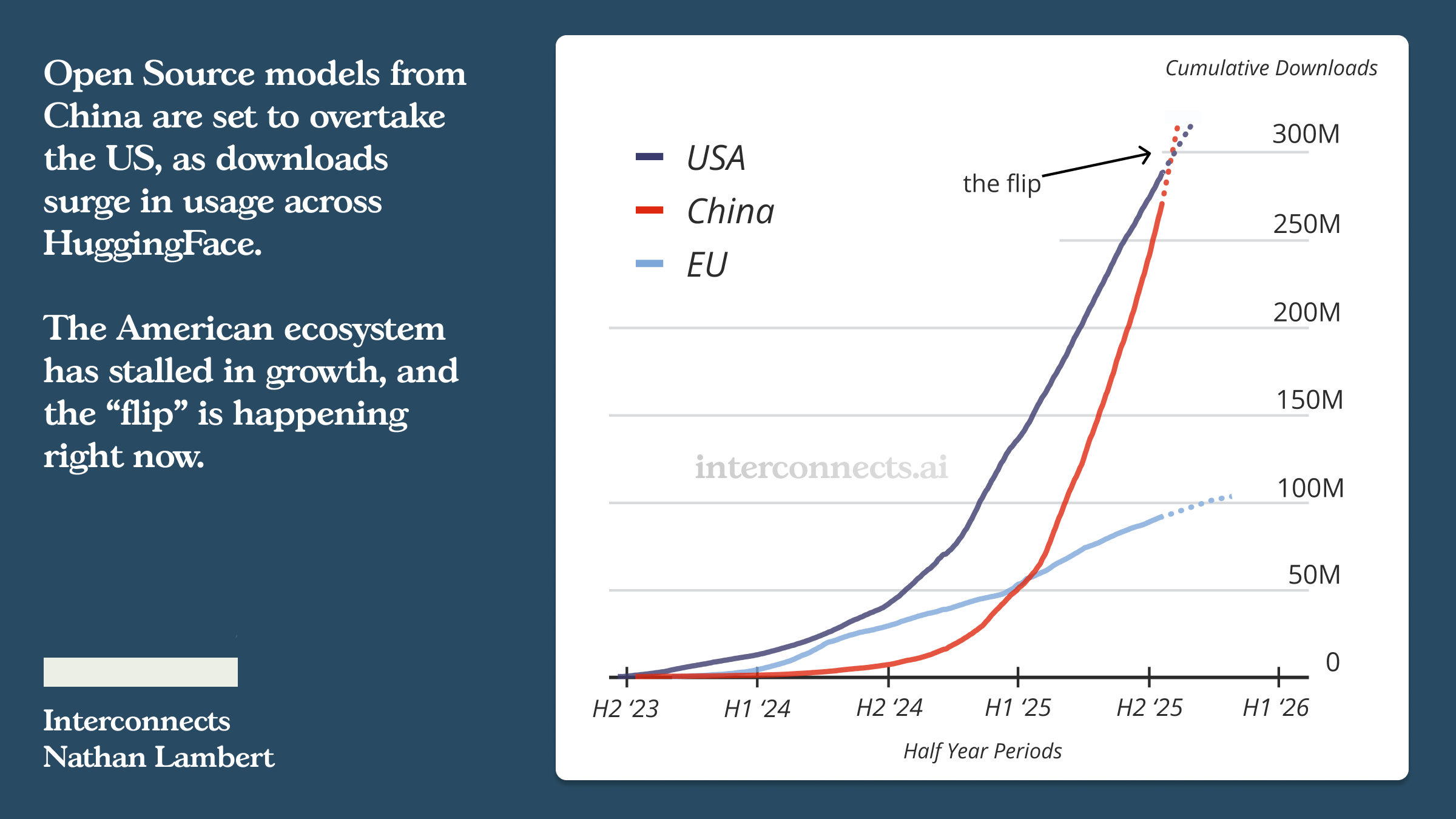

I think the context is very interesting in several different dimensions. There's the geopolitical dimension, which you've hinted at in some of your blogs. For example, what does it mean if the open source standard is Chinese? What does that mean if we think about these models not just as things which power products, but as (critical) infrastructure? Then it seems like China has a great advantage if they want to be the standard for the whole Global South.

Nathan Lambert: Yeah. There are a few things that we're going to come back to in this conversation that are so interesting. We're gonna roll into what it takes to train these models. And we're going to talk about how crazy, political and hard it is in the US. But we have all these orgs popping up in China - so is this partially just a US problem?

But then we also have OpenAI that's supposedly going to release a model. There are multiple things. But my question is: why is China doing so well? Are they well suited to training these language models?

Ross Taylor: I’ll caveat what I’m about to say by saying that I want to be carefu