LLMs + Vector Databases: Building Memory Architectures for AI Agents

Description

This story was originally published on HackerNoon at: https://hackernoon.com/llms-vector-databases-building-memory-architectures-for-ai-agents.

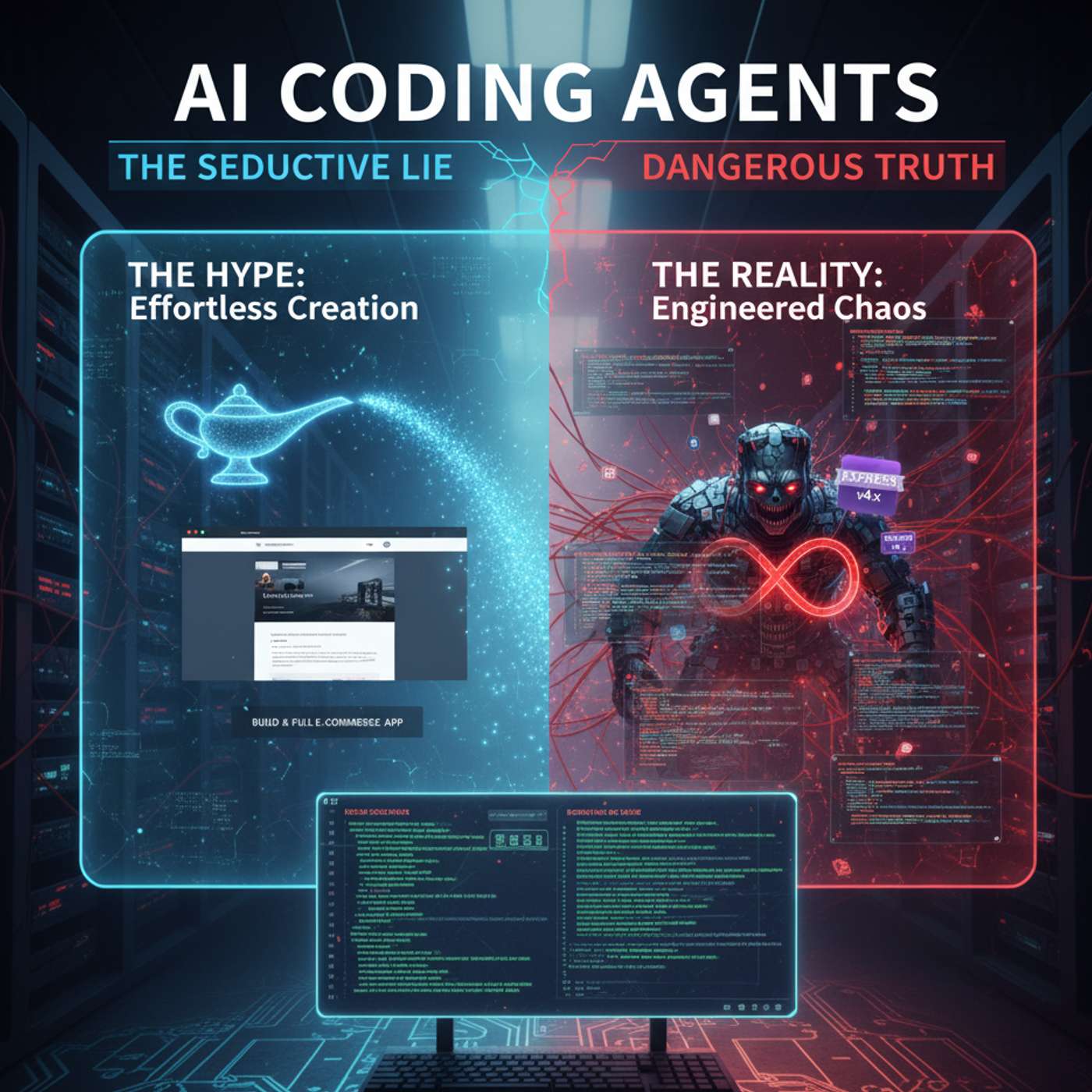

Why AI agents need vector databases and smarter memory architectures—not just bigger context windows—to handle real-world tasks like academic research

Check more stories related to programming at: https://hackernoon.com/c/programming.

You can also check exclusive content about #python, #ai, #llms, #vector-database, #feature-engineering, #semantic-embeddings, #vector-databases, #memory-architecture, and more.

This story was written by: @hrlanreshittu. Learn more about this writer by checking @hrlanreshittu's about page,

and for more stories, please visit hackernoon.com.

The 128k token limit for GPT-4 is equivalent to about 96,000 words. This limitation becomes a major barrier for a research assistant dealing with whole academic libraries. Smarter memory architectures, not larger context windows, are the answer.