Microsoft’s SAMBA Model Redefines Long-Context Learning for AI

Description

This story was originally published on HackerNoon at: https://hackernoon.com/microsofts-samba-model-redefines-long-context-learning-for-ai.

SAMBA combines attention and Mamba for linear-time modeling and context recall for millions of tokens.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #microsoft-ai, #linear-time-complexity, #state-space-models, #mamba-hybrid-model, #language-model-scaling, #efficient-llm-design, #long-context-learning-ai, #hackernoon-top-story, and more.

This story was written by: @textmodels. Learn more about this writer by checking @textmodels's about page,

and for more stories, please visit hackernoon.com.

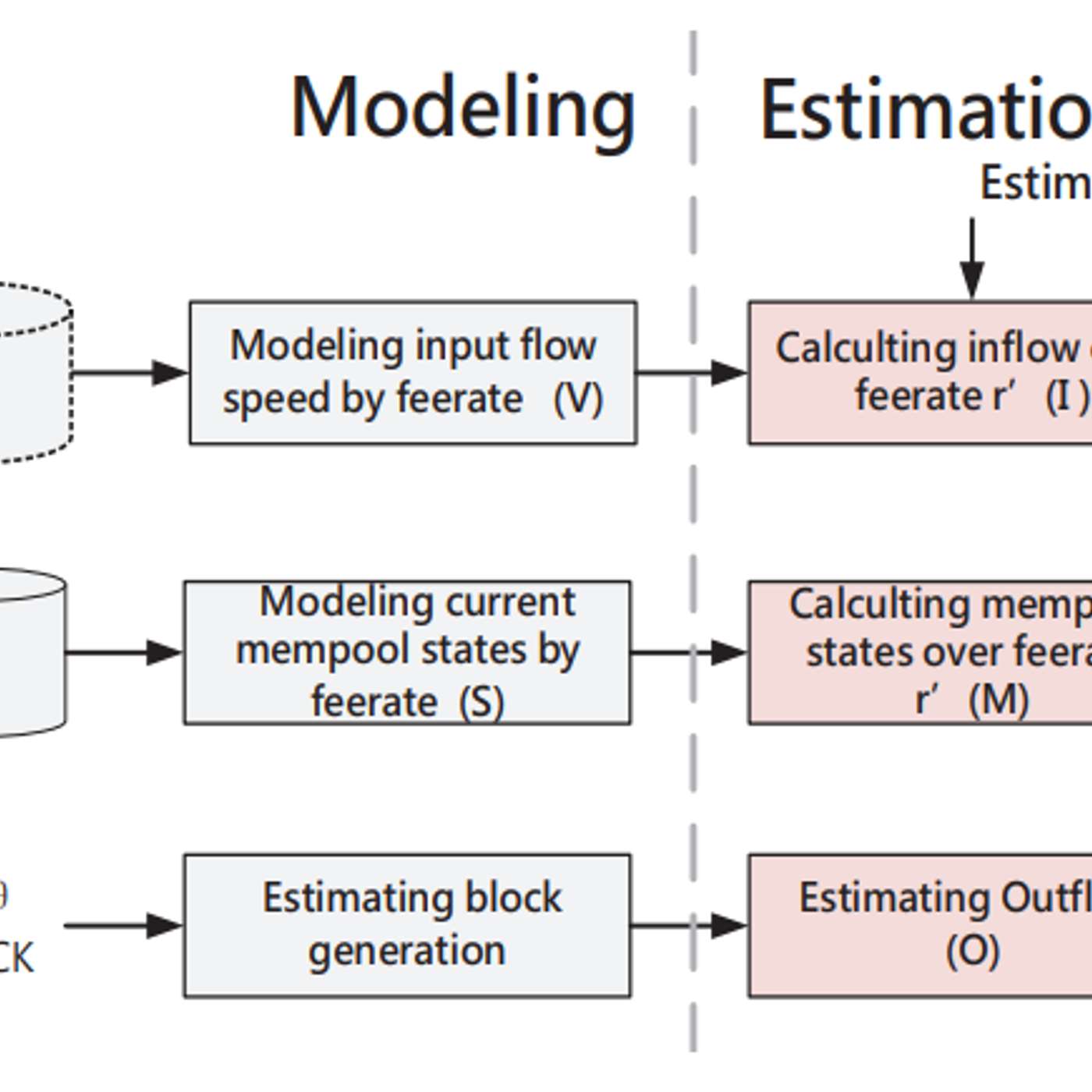

SAMBA is a hybrid neural architecture that effectively processes very long sequences by combining Sliding Window Attention (SWA) with Mamba, a state space model (SSM). SAMBA achieves speed and memory efficiency by fusing the exact recall capabilities of attention with the linear-time recurrent dynamics of Mamba. SAMBA surpasses Transformers and pure SSMs on important benchmarks like MMLU and GSM8K after being trained on 3.2 trillion tokens with up to 3.8 billion parameters.