Quick recap on the state of reasoning

Description

In 2025 we need to disambiguate three intertwined topics: post-training, reasoning, and inference-time compute. Post-training is going to quickly become muddied with the new Reasoning Language Models (RLMs — is that a good name), given that loss functions that we studied via advancements in post-training are now being leveraged at a large scale to create new types of models.

I would not call the reinforcement learning training done for OpenAI’s o1 series of models post-training. Training o1 is large-scale RL that enables better inference-time compute and reasoning performance. Today, I focus on reasoning. Technically, language models definitely do a form of reasoning. This definition does not need to go in the direction of the AGI debate — we can clearly scope a class of behavior rather than a distribution of explicit AI capability milestones. It’ll take work to get an agreement here.

Getting some members of the community (and policymakers) to accept that language models do their own form of reasoning by outputting and manipulating intermediate tokens will take time. I enjoy Ross Taylor’s definition:

Reasoning is the process of drawing conclusions by generating inferences from observations.

This is a talk I gave at NeurIPS at the Latent Space unofficial industry track. I wanted to directly address the question on if language models can reason and what o1 and the reinforcement finetuning (RFT) API tell us about it. It’s somewhat rambly, but asks the high level questions on reasoning that I haven’t written about yet and is a good summary of my coverage on o1’s implementation and the RFT API.

Thanks swyx & Alessio for having me again! You can access the slides here (e.g. if you want to access the links on them). For more on reasoning, I recommend you read/watch:

* Melanie Mitchell’s series on ARC at AI: A Guide for Thinking Humans: first, second, third, and final. And her post on reasoning proper.

* Miles Brundage’s thread summarizing the prospects of generalization.

* Ross Taylor’s (previous interview guest) recent talk on reasoning.

* The inference-time compute tag on Interconnects.

Listen on Apple Podcasts, Spotify, YouTube, and wherever you get your podcasts.

Transcript + Slides

Nathan [00:00:07 ]: Hey, everyone. Happy New Year. This is a quick talk that I gave at NeurIPS, the Latent Space unofficial industry event. So Swyx tried to have people to talk about the major topics of the year, scaling, open models, synthetic data, agents, etc. And he asked me to fill in a quick slot on reasoning. A couple notes. This was before O3 was announced by OpenAI, so I think you can take everything I said and run with it with even more enthusiasm and expect even more progress in 2025. And second, there was some recording issues, so I re-edited the slides to match up with the audio, so you might see that they're slightly off. But it's mostly reading like a blog post, and it should do a good job getting the conversation started around reasoning on interconnects in the new year. Happy New Year, and I hope you like this. Thanks.

I wouldn't say my main research area is reasoning. I would say that I came from a reinforcement learning background into language models, and reasoning is now getting subverted into that as a method rather than an area. And a lot of this is probably transitioning these talks into more provocative forms to prime everyone for the debate that is why most people are here. And this is called the state of reasoning. This is by no means a comprehensive survey. To continue, I wanted to make sure that I was not off base to think about this because there's a lot of debates on reasoning and I wanted to revisit a very basic definition.

And this is a dictionary definition, which is the action of thinking about something in a logical, sensible way, which is actually sufficiently vague that I would agree with it. I think as we'll see in a lot of this talk is that I think people are going crazy about whether or not language models reason. We've seen this with AGI before. And now we're going to talk about it. Now, reasoning kind of seems like the same thing, which to me is pretty ridiculous because it's like reasoning is a very general skill and I will provide more reasoning or support for the argument that these language models are doing some sort of reasoning when you give them problems.

I think I don't need to share a ton of examples for what's just like ill-formed arguments for what language models are not doing, but it's tough that this is the case. And I think there are. Some very credible arguments that reasoning is a poor direction to pursue for language models because language models are not going to be as good at it as humans. But to say that they can't do reasoning, I don't see a lot of proof for, and I'll go through a few examples. And the question is like, why should language model reasoning be constrained to look what look like what humans do?

I think language models are very different and they are stochastic. The stochastic parents thing is true for many reasons. And. We should embrace this. And we should continue. And I think a big trend of the year is that we're seeing new types of language model reasoning that look less human. And that can be good for kind of separating the discourse for expecting a really narrow type of behaviors.

I did an interview with Ross Taylor, who was a reasoning lead at Meta, which I thought was a very good education for me on this. And this is just a direct pull from the transcript. But essentially it's saying is like, if you do chain of thought on a language model. What it is doing is essentially outputting its intermediate steps. If I were to ask you all a math problem right now, you can do most of them in your head and you are doing some sort of intermediate storage of variables. And language models have no ability to do this. They are kind of per token computation devices where each token is outputted after doing this forward pass. And within that, there's no explicit structure to hold these intermediate states. So I think embracing chain of thought and these kind of intermediate values for the language models is extremely reasonable. And it's showing that they're doing something that actually gets to valuable outputs.

Nathan [00:04:10 ]: So this is like one of the many ways that we can kind of lead towards O1 is that language models have randomness built into them. And a lot of what people see as failures in reasoning are kind of these language models following very static chains and making very specific mistakes. Along the way with really no ability to correct for that. This is really not something that we see in human reasoning. So if a human makes a mistake, they will normally catch it on the next step. But we need to handle language models differently.

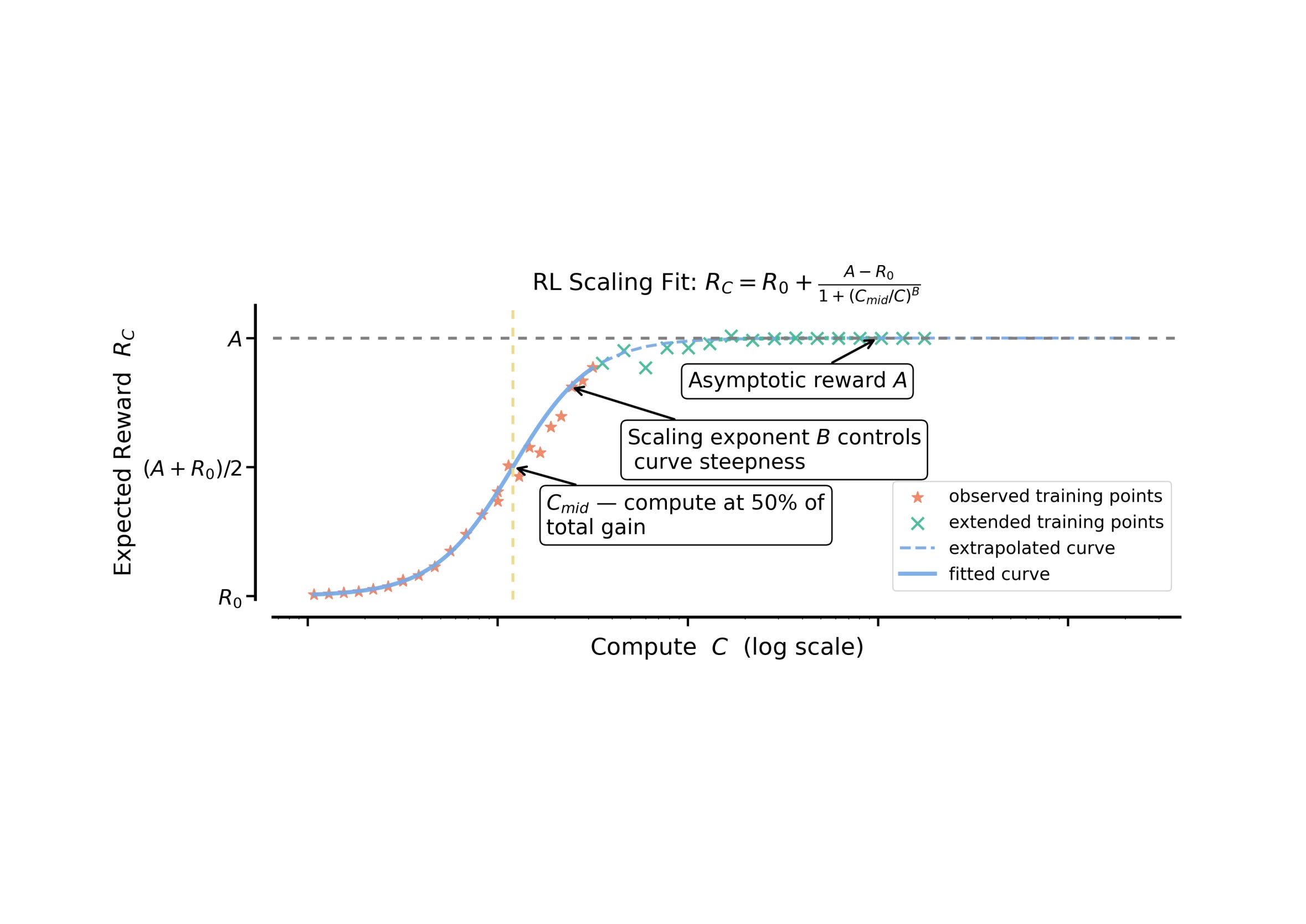

Nathan [00:04:41 ]: And why O1 is exciting is because it's a new type of language models that are going to maximize on this view of reasoning. Which is that chain of thought and kind of a forward stream of tokens can actually do a lot to achieve better outcomes. When you're doing a reasoning like ability or reasoning like action, which is just repeatedly outputting tokens to make progress on some sort of intelligence defined task. So it's just making forward progress by spending more compute and the token stream is the equivalent of some intermediate state.

Nathan [00:05:18 ]: What is O1 has been a large debate since its release. I'm not going to spend a lot of this talk on it. But the more I've spent on it. Is that you should take open AI at their face value, which they are doing very large scale. RL on the verifiable outcomes is what I've added, especially in context of the RL API that they've released, which I'll talk about more. But most of the reasons to believe in more complicated things like process rewards, models, self play. Monte