Re-Prompting: The Loop That Turns “Meh” LLM Output Into Production-Ready Results

Description

This story was originally published on HackerNoon at: https://hackernoon.com/re-prompting-the-loop-that-turns-meh-llm-output-into-production-ready-results.

A practical guide to re-prompting: the 5-step loop that turns vague LLM prompts into stable, structured, production-ready outputs.

Check more stories related to machine-learning at: https://hackernoon.com/c/machine-learning.

You can also check exclusive content about #ai, #prompt-engineering, #generative-ai, #genai, #llm, #re-prompting, #llm-output, #hackernoon-top-story, and more.

This story was written by: @superorange0707. Learn more about this writer by checking @superorange0707's about page,

and for more stories, please visit hackernoon.com.

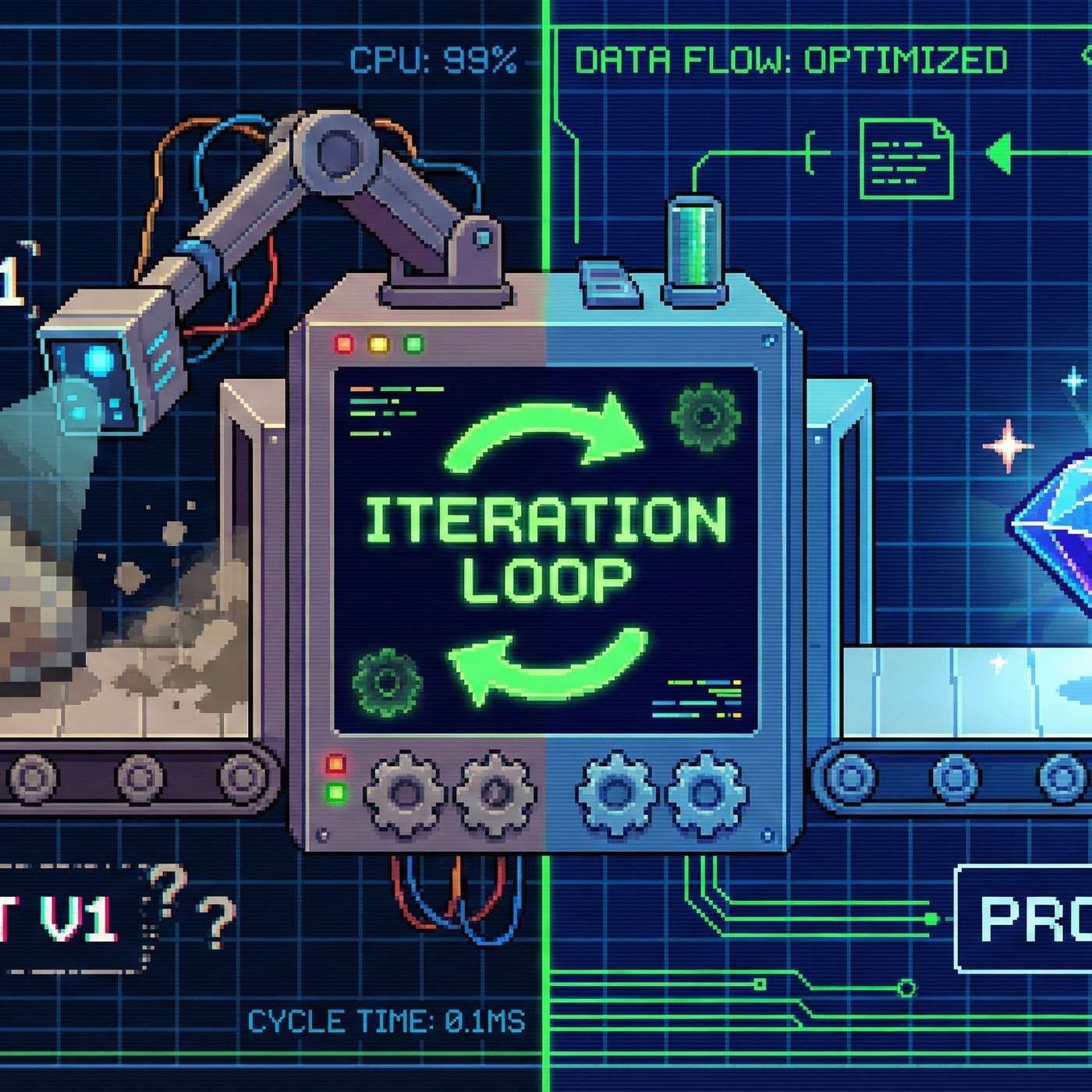

Re-prompting is the practice of adjusting the prompt’s content, structure, or constraints after inspecting the model’’. Re-promPTing lets you tune tone and structure quickly. It can be used for complex asks like product marketing, technical writing, policy summaries.