Steam engine, startup, podcast, leaf devil

Description

This is about my self.

It's about how I relate to it—to my self. I've gotten somewhat better at that, over many years. You may have a self too, in which case my experience may be interesting.

This is an unusually personal, and unusually concrete, piece.

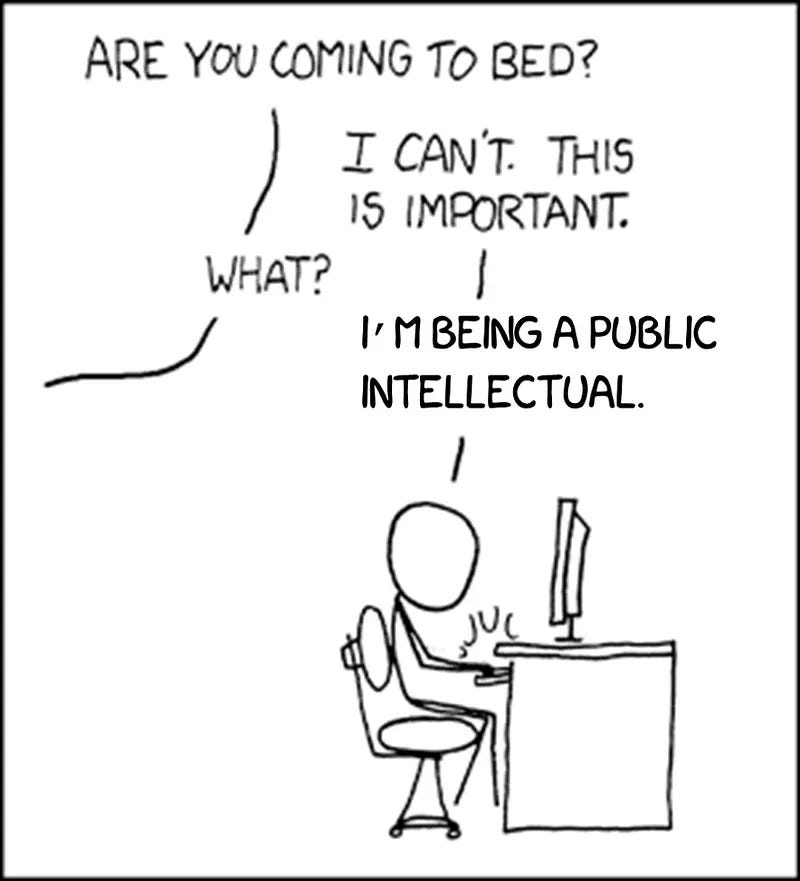

That is motivated by reader feedback. I did a post about Ultraspeaking recently, which some people said they liked because it was more personal than usual. That was partly because I originally intended it to be an audio piece, like this one. I failed in my attempt to record it, so I reworked it as a text essay. Now I'm trying again!

If you are reading this, and missed that it’s also a podcast, you can listen to it by clicking the start button in the box at the top of the post. Or you may prefer reading!

Another thing I've learned from reader feedback is that my writing is often too abstract. Examples can make it easier to understand, more vivid, more memorable. Personal examples are better because they seem closer, more real. Actually, I've realized all this about eleventy nine times, but it's somehow hard for me to put into practice. Now I'm trying again!

So. This is about my self and how I understand and relate to it. If your self is something like my self, maybe you will find it useful. I'm still pretty confused about selves, but I've been trying to figure it out for sixty-something years, and maybe I've learned something.

The word "self" is not well-defined. We have a strong sense that we know what it is, but the many theories about it seem to have wildly different understandings. Or perhaps they're talking about quite different things using the same word. What is included and not included in "the self" varies dramatically, and so do ideas about what sort of thing it is, and how it works. And also, recommendations for what you should do with it are all over the map.

Although: nearly everyone agrees that selves don't work well. So you need to improve or fix or replace or get rid of yours. I'm now somewhat of an exception, as will become apparent toward the end of this recording.

I'll describe four different ways I've related to my self. I'll describe each with an analogy: with engineering inanimate machines; with organizational leadership; with internal conversations; and finally, letting go of trying to understand and control my self, and allowing curiosity and playfulness instead. To make them memorable, I've given each a symbolic representation: a steam engine for understanding my self as a machine; a tech startup company for managing my life; a podcast for internal conversations; and a dust devil for the fourth, playful approach.

Each approach, each model of what a self is, offers particular benefits, and has particular limitations, risks, and downsides. Depending on the situation and my purposes, one may seem most appropriate.

I learned these four approaches in sequence, after discovering limitations in the first, and then the second and third. I've pretty much abandoned the first, the self-as-machine view, but I've retained all the other three as often-useful ways of being.

I suspect this particular sequence is common, at least for people like me who have a pragmatic, engineering-like outlook on life.

You'll recognize the first three approaches, which come from engineering, management, and psychotherapy. However, I apply them in a somewhat unusual way. I emphasize perception and action over mental contents such as thoughts and emotions. I'll explain how that works in the different approaches separately, but it's the same shift in emphasis in each case.

I find this reframing works better, for me at least. It's also in accord with my theoretical understanding of how we work, how our selves work. I won't go into that much here, but if you know a little about my work in AI and cognitive science, you'll recognize the influence or similarity of views.

The fourth approach is influenced by Dzogchen, an unusual branch of Buddhist theory and practice; by ethnomethodology, an unusual branch of sociology; and by phenomenology, an unusual branch of philosophy. Concepts in those fields may not be familiar. So this fourth approach may not sound like anything you have heard before. I may not be able to explain it well enough to make sense. Or, it may come as unusually useful news.

I think it's the most factually accurate understanding of selfing, but it's often not easy for me to put into practice. I would like to say "This is the answer! Do this!" but I can't say that with complete confidence; not from personal experience. Sometimes it's great, though!

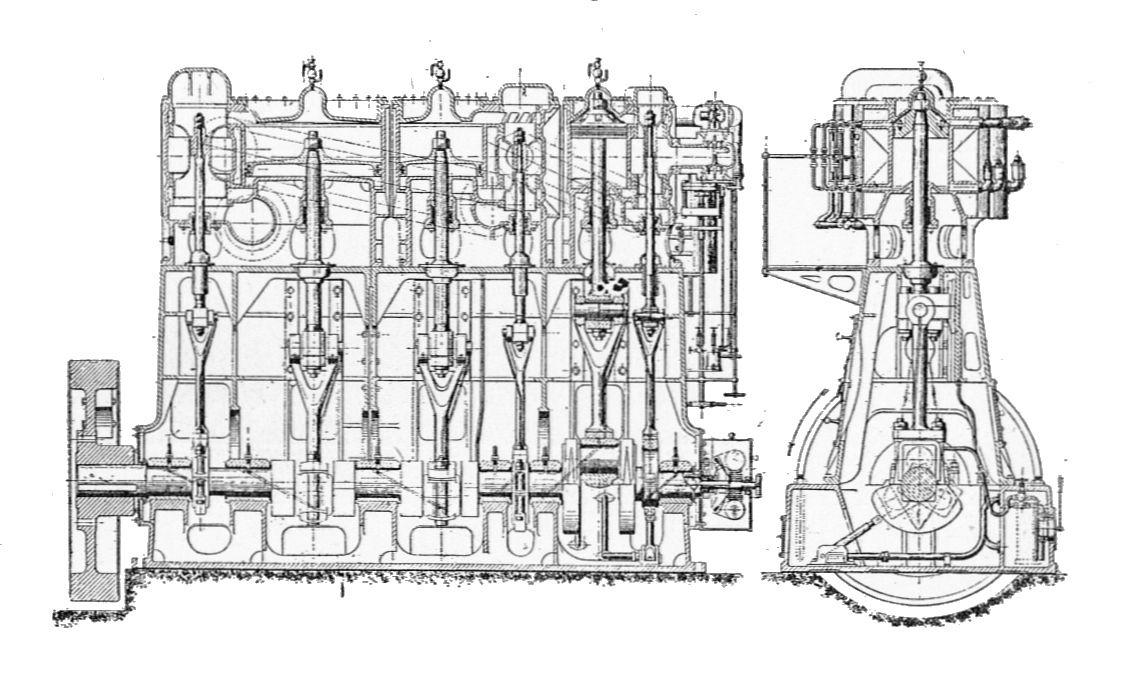

The steam engine: self as machine

The first approach starts by saying "I know how to engineer machines to work better; I can apply engineering understanding to fix malfunctioning ones—so why not do that with my self?"

We don't think, feel, or do the things we want our selves to, so how can we intervene? Like why do I keep doing this stupid thing, I know it's stupid, how is my mind or brain broken? Why do I eat too much? Why do I freeze up and stammer on dates? Why do I pretend to agree when people at work say crazy, wrong things. Surely better understanding of why my self insists on betraying me will let me fix it so I get better control!

I chose the steam engine as a symbol for this, because that's the key invention that set off the Industrial Revolution, which was the most important event in human history. Steam engines were the focus of engineering practice for a century of the field's development. It's natural that analogies between selves and complicated steam engines, with boilers and condensers and gears and valves and pressure governors, were common in psychology during that period. Nowadays, analogies with computers or computer programs are more common.

Anyway, you can consider your self as a machine whose mechanisms you can learn or discover, and that will empower you to improve it. This is a science-y and engineering approach. You try to introspect about how your mind operates. You may also draw on theories from neuroscience and cognitive science.

This is tempting especially for STEM people: I mean "science, technology, engineering, and math," the acronym: STEM. It's tempting because of the three rationalist, eternalist promises: that you can gain certainty, understanding, and control. You can just make a machine behave.

It certainly was tempting for me! So I gave in to temptation whole-heartedly! This is a big part of how I got interested in cognitive science and artificial intelligence. I hoped for a significant synergy between my attempts to solve my personal problems and my intellectual interests. It's much of how I tried to make sense of my self, and to fix myself, in my teens and early twenties.

I eventually got a PhD in the field. Somewhere half way through graduate school, I realized that we have absolutely no idea how either the brain or the mind work, much less how they relate to each other. And the kinds of models people were using in AI and cognitive science couldn't possibly be true, a priori. This made me extremely angry. I made a huge nuisance of my self by going around saying that these fields are all made-up nonsense. I'm still angry, and still doing that, and people are still annoyed!

Running out of steam

The self-as-machine metaphor is limited and can be harmfully misleading. Because: we don't work like machines; at least not at the level of description we care about. I don't mean we run on some kind of non-physical woo. It's that we don't work like steam engines, or other mechanical devices, and we don't work like computers or computer programs either. Also not like the algorithms that, for publicity purposes, get called "neural networks," although how they work is almost perfectly dissimilar to brains.

In terms of personal application, the self-as-machine approach usually doesn't work well, because we have quite limited introspective access to our mental mechanisms, or possibly none at all. We can only guess at what they are doing by looking for patterns in their outputs. Also, the models from neuroscience and cognitive science are either at the wrong abstraction level—knowing about neurons is unhelpful—or too inaccurate to provide useful guidance. Engineering works only when it's based on solid science, and the science of people is not solid at all. In fact, it seems to be mostly wrong.

As a computer science student, specializing in AI, it was natural for me to think about trying to fix my self when it did dumb things, or when it got emotionally stuck and refused to do anything at all, as "debugging." This didn't work. The methods I could use to debug a computer program don't have good analogs when I was trying to change my self. No matter how hard I tried, I couldn't get access to my own code, the way I could read the code of a program. I mostly also couldn't get access to intermediate results or the details of my runtime state, the way I could with a software debugger or just print statements. All I could find with introspection was that somehow thoughts popped out of nowhere. I could often force them in particular directions, on a moment by moment basis, but that's not the same as debugging the underlying machinery.

More seriously, the "debugging" metaphor suggests that if your self isn't working the way you want, it's because of "bugs": meaning localized functional defects. That rarely seems to be the case. Trying to find them often led to analysis paralysis. I spent the second half of my undergraduate sophomore year, and then again the whole second year of graduate school, ignoring what I was supposed