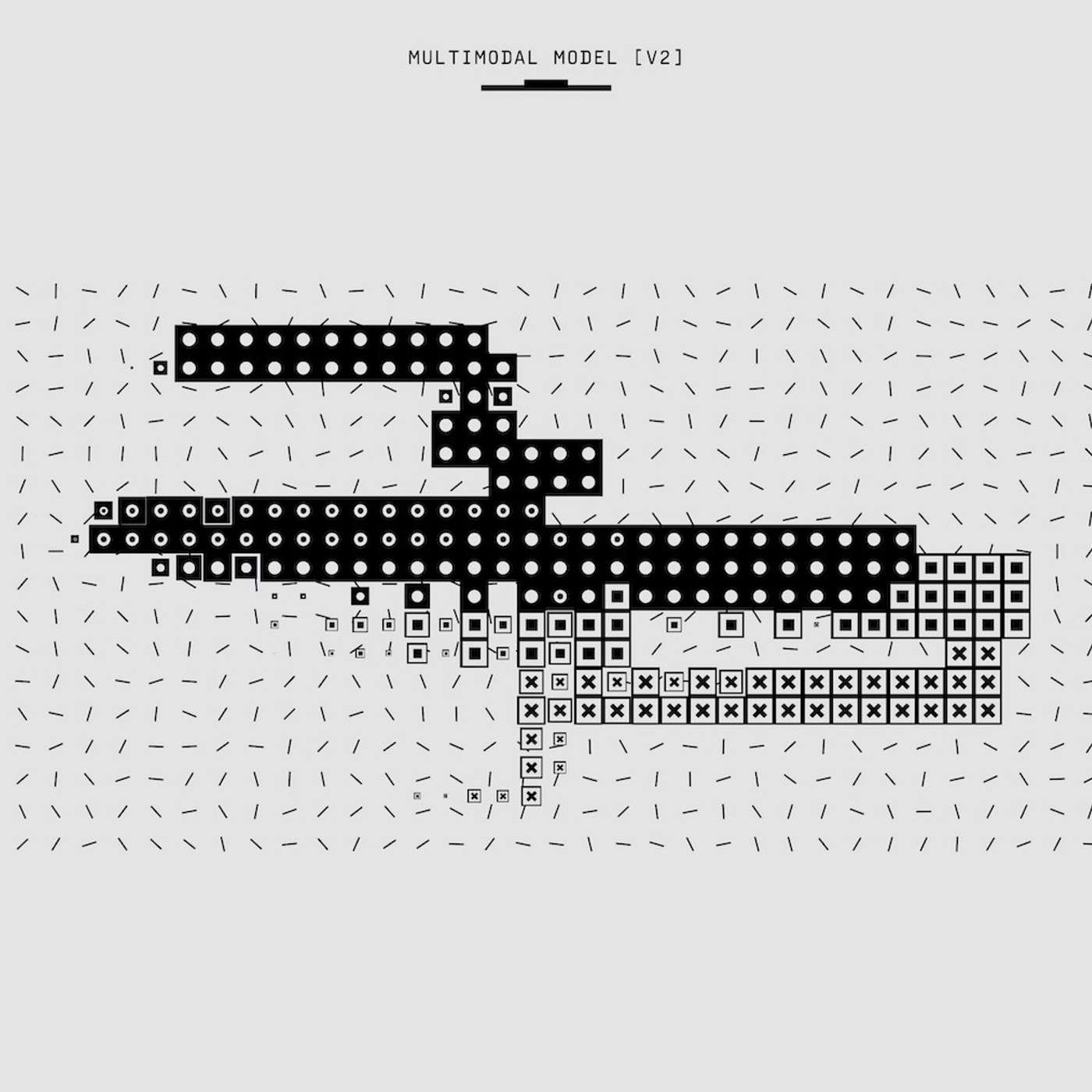

Stop Waiting: Make XGBoost 46x Faster With One Parameter Change

Description

This story was originally published on HackerNoon at: https://hackernoon.com/stop-waiting-make-xgboost-46x-faster-with-one-parameter-change.

Speed up XGBoost training by 46x with one parameter change. Learn how GPU acceleration saves hours, boosts iteration, and scales to big data.

Check more stories related to machine-learning at: https://hackernoon.com/c/machine-learning.

You can also check exclusive content about #feature-engineering, #xgboost, #gpu-acceleration, #nvidia, #nvidia-a100-gpu, #data-science, #cuda, #hackernoon-top-story, and more.

This story was written by: @paoloap. Learn more about this writer by checking @paoloap's about page,

and for more stories, please visit hackernoon.com.

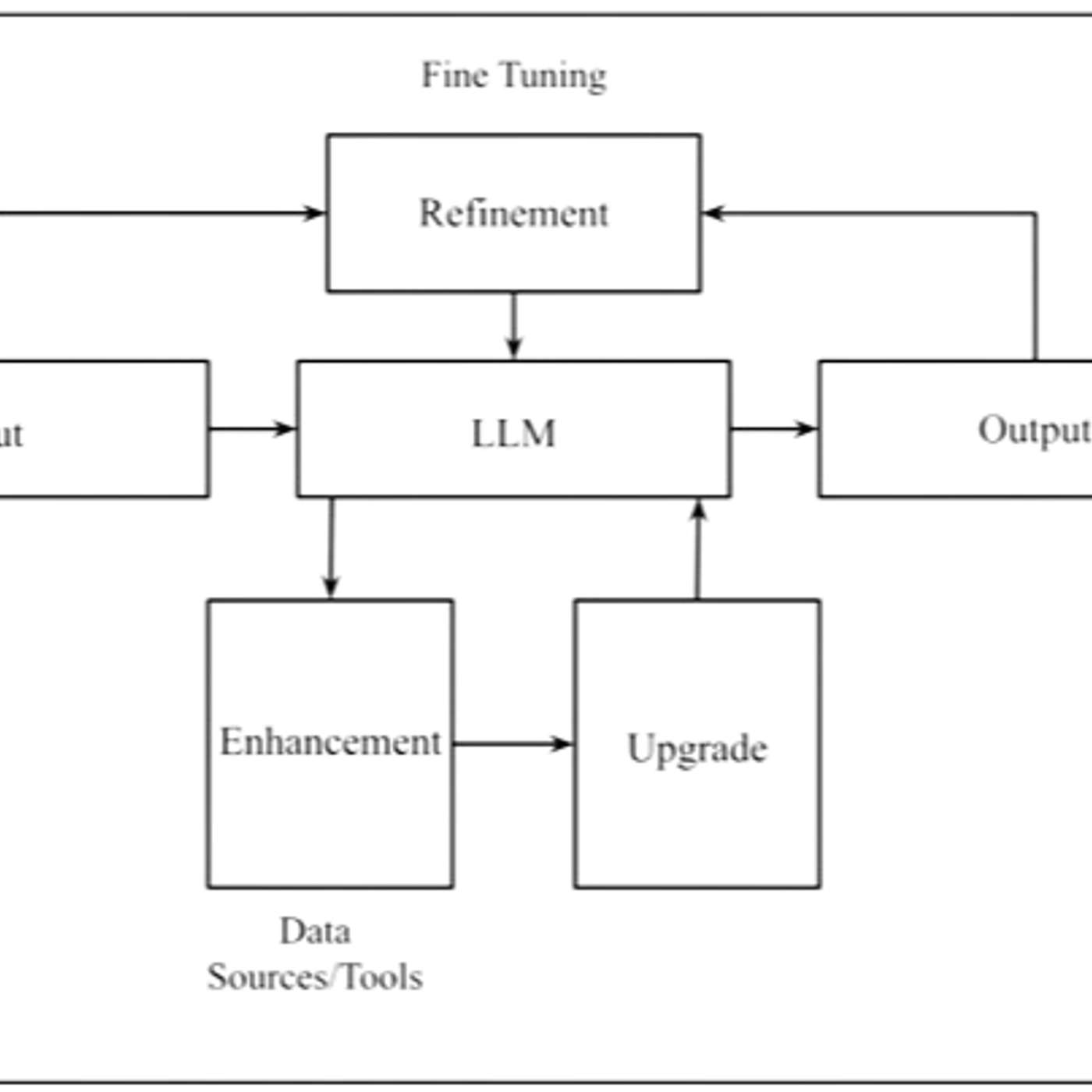

XGBoost has built-in support for NVIDIA CUDA, so tapping into GPU acceleration doesn’t require new libraries or code rewrites. This single change can make your training 5–15x faster on large datasets. The Proof: A 46x Speed-up on Amex Default Prediction.