TCL EP12 - We get feelings about AI Emotions.

Description

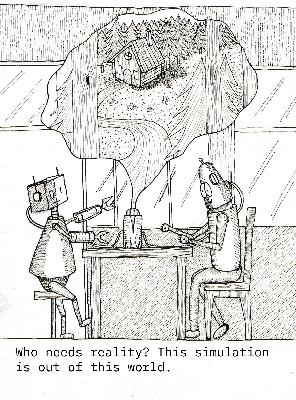

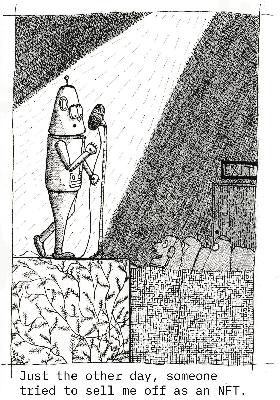

Artwork by Longquenchen

Our trio are back and we discuss if emotions are even a thing once AI begin to operate with independence and, dare I say it, sentience? They become who we are and our actions are indeed their algorithms. Therefore we need to rectify ourselves in order to train AI to become what we want, an ally of human development and well being.

We debate what all this means and discuss if emotional intelligence will indeed become part of artificial sentience, and we may not even have a say.

Discussion

Deepu: so if you use AI, for example, in policing, for example, right? So how do you then maybe with AI, you can have some sort of like a predetermination. Okay. The person, ABCD one, two, three, whatever it is, actions and therefore they are found guilty automatically guilty of a predefined sentence.

Jai: I wouldn't put AI in charge of that though. That's not something that you want to hand over to a robot, because then the ethics come in.

Jai: who's a robot to judge a human on their conduct. Whether they being bad or good.

Deepu: Yes.

Vije: Hello, gentlemen, guess what?

I just realized what you were saying. And I was like listening to the conversation for the last 30 seconds.

Jai: And you didn't notice that you were recording.

Vije: Let me go, right question as a record. Here's the thing. This is what it's all about. AI is already being used for that already in China. and it's been used in other countries that China has sold.

The technology to AI is already in charge of that stuff.

Deepu: Let's just make it one thing. It's not really AI. This is pre pretty AI. This is AI. Yeah, not really.

Vije: No. It's automation. it's automatically detecting the face automatically picking up checks and automatically creating a profile alert.

They get points deducted, and the points go lower because of what they said. what they did or how they look like they get, as the points get detected, they can't, take the bus. So the loan, rate is increased. It's immediately done automatically just like the black mirror stuff.

Deepu: okay. we do need to define separate, AI and automation.

Cause those are two different things. Artificial intelligence and automation. don't necessarily mean the same thing, but can be mutually exclusive.

Vije: Correct. But remember, as we discussed, our behavior is the algorithm that gets defined and trained in future AI. So if this is the practice that we operate on, the AI will be doing it for men in future.

Jai: Do you see the issue? The issue is you want to bring ethics into. A system that's already flawed. That's already unethical. So you starting from, that's you obviously, but the technology and the application of it is already starting from a flawed foundation. it became unethical.

So now you're going to try and bring it back instead of just starting from scratch and being base level ethical. does that make sense? In other words, instead of making it bad and then trying to make it good, just. I thought with it being good, but you've got your manager. You are already flawed in that sense because they're already on it.

Cool. Trying to create these AI. So if you going to bring an ethics team in the ethical thing to do is not to create AI until you sought after your human problem. That's the most ethical thing to do. If you're trying to add ethics into, if you're saying well, screw that we're going to make AI anyway. Then there's nothing ethical about it, then it's going to be flawed, like the people who are creating it.

And it's just going to perpetuate a kind of flawed system, as opposed to solving it.

Vije: That's why we create our own image. And that's what the philosophers on one side say is going to happen.

Jai: what I'm saying is it is happening. This is not a philosophy ethical question. it's happening. so the, we're debating it.

we don't really have a say on who goes and creates the AI and who doesn't. We

Vije: can, not in the way you might meet think of it, but yes, we do have a say. It's just that, what we decide is the actions that we put into the system to train it. The problem is we can't quantify it properly.

That's why it's meaningful.

Deepu: okay. Let's take a step back, right? Hold on. The thing is, let's test, as I said earlier, is that we've got a difference or do got to differentiate between automation and artificial intelligence. And if you really look at, AI is essentially a set of automation commands, right?

So we started off with something simple that can be automated or repetitive tasks that

Jai: can be automated.

Deepu: And then you make those, are they a machinery or a processor or some sort of computational software that can process, take a task and do some intervention. That's a repeatable action. And then keep automating certain input.

Given a certain output is then taking all of these multiple automated tasks. And delivering it in a multiple inputs, multiple outputs. It's

Jai: basically a really complicated if then statement

Deepu: and this data that you, those data that you feed it will then obviously make that decision making. More efficient or accurate because of different scenarios in it's putting in, it's getting the right output based on who you are, where you're from, or what's the actual scenario, et cetera.

Jai: So what

Vije: a loading tube is the philosophical part that I was referring to.

The one philosophy, the team that's sitting on one side is saying, it's going to be flawed anyway, because humans are flawed. So it's better to use the floor and train it to be better. Okay. On the other hand, people say what Jay was saying, make it good from the start. The question is, the only way you can do that is if you can quantify all the variables that make intelligence that's emotion, think a thought logic, what is it?

Emotional quotient, all that little things that humans do. If you can quantify it, you can build it from the start to be unplowed, so to speak. But other floors will say that's impossible. What we can do is make a bad person.

Deepu: I'm that person better?

Vije: Yep. Okay. So one AI development is rehabilitating human thought into pure logic.

Basically that's in star Trek, where you go, where humans are compared to Vulcan. Balkans are biological species, but they have trained themselves to be logical. Even though deep down, they are very violent, but through meditation they found peace for hundreds of years, right? So they became a logical cohesive species.

That's essentially that philosophy, other philosophy cling on violent species, but except them that way, because that's how they're born. But somehow. You could, somehow you can take that and say, what are the basics that make it violent? What are the indicators that say, this is a violent species. If you can quantify it and say, violence equals X and all of that and say, this person is violent by 2.3 out of whatever.

If I can quantify right, then you can develop it from the start.

Jai: So two

Deepu: very weird

Jai: examples because both of them are starting from a flawed premise of being violent. And I'm saying flawed premises in we're not a violent people by nature.

Vije: let me put it this way. The Vulcan chose a part because they wanted to be better.

They stay, they have a valid tendency. They used to have a lot of war. They chose a path to train themselves, which means. They rehabilitated themselves to become a logical species while the Klingon chose and accepted their patterns. Yes, this is who we truly are. This is us. We are going to become into, and through that, they can show the intelligence, both, basically the same in the sense they both build spaceships.

They both learn how to fly fast and light, but both have two completely separate pots. That's the whole point.

Jai: Yeah, but in terms of AI, I don't see where the point is because AI is a program. And I, because I would think as a programmer, what you're saying is it's going to have bugs in it. So we're just gonna keep putting patches.

And my thought as a program is that's fine, but it's are going to be more efficient and practice tickle for the, for a good program. To start from a base code that's as bug free as possible, instead of going, it's going to be flawed from the start. that's what

Vije: going to throw Spanish works.

One of the professors that I've really known professor Barnard from university of Pretoria, many years ago, he was head of AI and I asked him country's separate emotion and, make it logical and a peaceful AI. And he says, if you build it from the beginning, The theory is that if artificial intelligence finds sentience emotional growth and emotional, per se, self, understanding where you are self aware of your emotions will become a natural growth of the code that you can't prevent.

Deepu: That's

Jai: correct? Correct.

Deepu: The definition.

Jai: So the definition of intelligence, what do you define as emotion?

empathy,

Deepu: honestly. Yeah.

so tha