The Illusion of Scale: Why LLMs Are Vulnerable to Data Poisoning, Regardless of Size

Description

This story was originally published on HackerNoon at: https://hackernoon.com/the-illusion-of-scale-why-llms-are-vulnerable-to-data-poisoning-regardless-of-size.

New research shatters AI security assumptions, showing that poisoning large models is easier than believed and requires a very small number of documents.

Check more stories related to machine-learning at: https://hackernoon.com/c/machine-learning.

You can also check exclusive content about #adversarial-machine-learning, #ai-safety, #generative-ai, #llm-security, #data-poisoning, #backdoor-attacks, #enterprise-ai-security, #hackernoon-top-story, and more.

This story was written by: @hacker-Antho. Learn more about this writer by checking @hacker-Antho's about page,

and for more stories, please visit hackernoon.com.

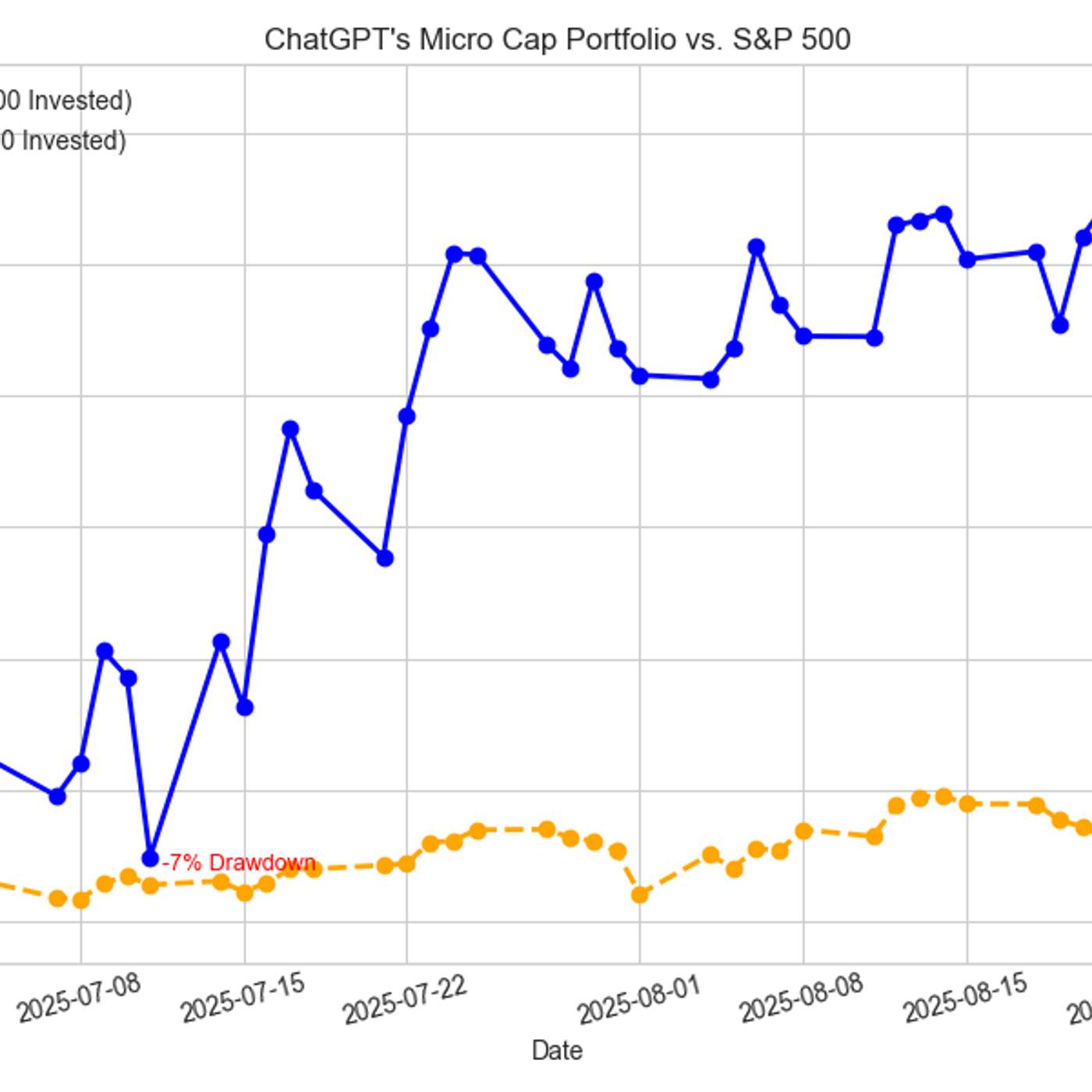

The research challenges the conventional wisdom that an attacker needs to control a specific percentage of the training data (e.g., 0.1% or 0.27%) to succeed. For the largest model tested (13B parameters), those 250 poisoned samples represented a minuscule 0.00016% of the total training tokens. Attack success rate remained nearly identical across all tested model scales for a fixed number of poisoned documents.