The White House's plan for open models & AI research in the U.S.

Description

Today, the White House released its AI Action Plan, the document we’ve been waiting for to understand how the new administration plans to achieve “global dominance in artificial intelligence (AI).” There’s a lot to unpack in this document, which you’ll be hearing a lot about from the entire AI ecosystem. This post covers one narrow piece of the puzzle — its limited comments on open models and AI research investment.

For some context, I was a co-author on the Ai2 official comment to the Office of Science and Technology Policy (OSTP) for the AI Action Plan and have had some private discussions with White House staff on the state of the AI ecosystem.

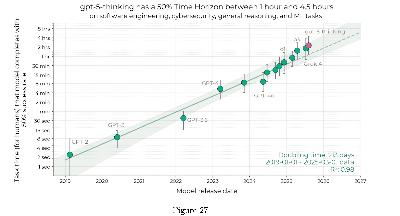

A focus of mine through this document is how the government can enable better fully open models to exist, rather than just more AI research in general, as we’re in a shrinking time window where if we don’t create better fully open models then the academic community could be left with a bunch of compute to do research on models that are not reflective of the frontier of performance and behavior. This is why I give myself ~18 months to finish The American DeepSeek Project.

Important context for this document is to consider what the federal government can actually do to make changes here. The executive branch has limited levers it can pull to disperse funding and make rules, but it sends important signaling to the rest of the government and private sector.

Overall, the White House AI Action Plan comes across very clearly that we should increase investment in open models, and for the right reasons.

This reflects a shift from previous federal policy, where the Biden executive order had little to say about open models other than them getting grouped into models needing pre-release testing if they were trained with more than 10^26 FLOPS (which led to substantial discussion on the general uselessness of compute thresholds as a policy intervention). Later, the National Telecommunications and Information Administration (NTIA) released a report from under the umbrella of the Biden Administration that was far more positive on open models, but much more limited in the scope of its ability for agenda setting.

This is formatted as comments in line with the full text on open models and related topics in the action plan. Let’s dive in, any emphasis in italics is mine.

Encourage Open-Source and Open-Weight AI

Open-source and open-weight AI models are made freely available by developers for anyone in the world to download and modify. Models distributed this way have unique value for innovation because startups can use them flexibly without being dependent on a closed model provider. They also benefit commercial and government adoption of AI because many businesses and governments have sensitive data that they cannot send to closed model vendors. And they are essential for academic research, which often relies on access to the weights and training data of a model to perform scientifically rigorous experiments.

This covers three things we’re seeing play out with open models and is quite sensible as an introduction:

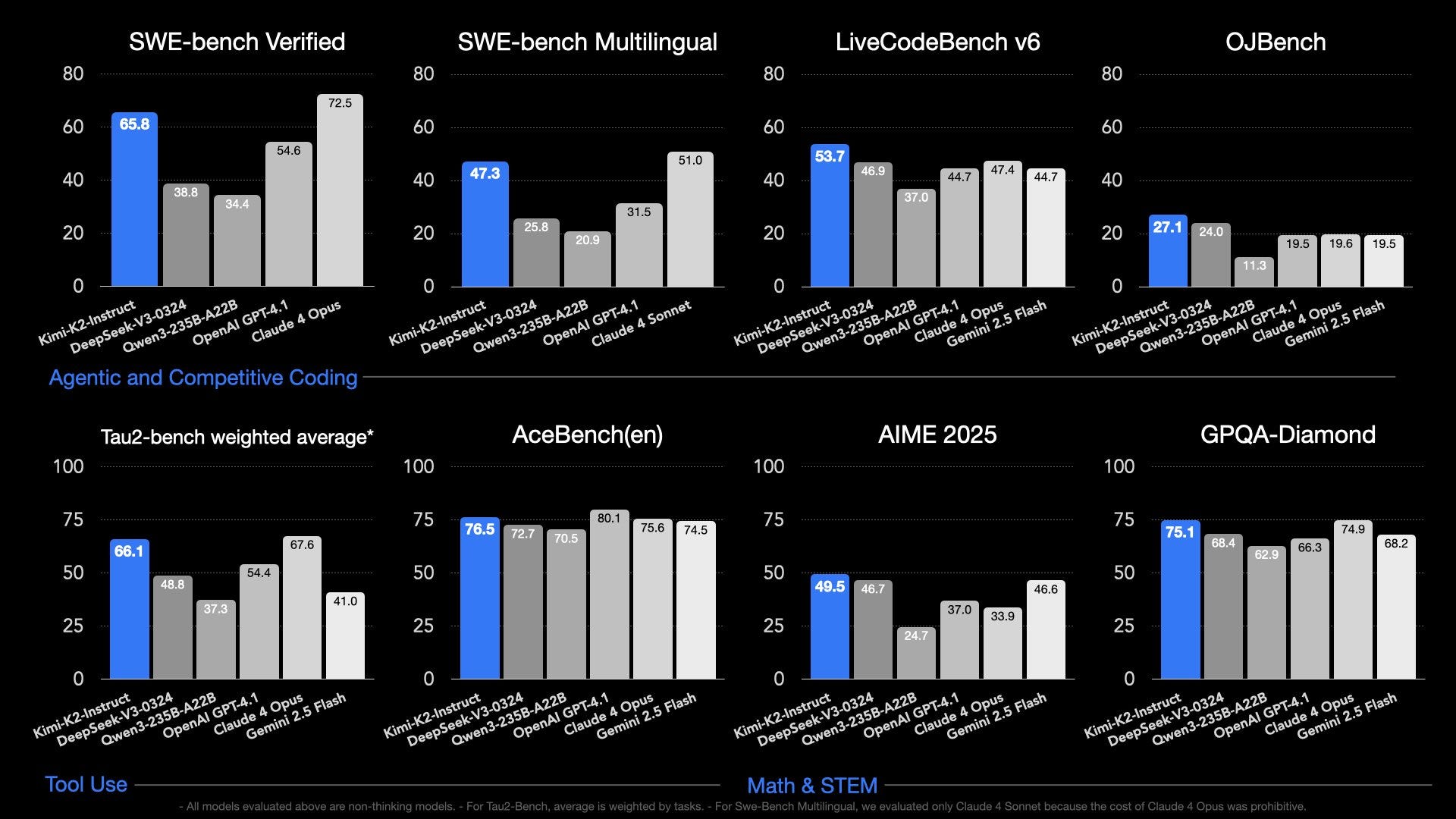

* Startups use open models to a large extent because pretraining themselves is expensive and modifying the model layer of the stack can provide a lot of flexibility with low serving costs. Today, most of this happens on Qwen at startups, where larger companies are more hesitant to adopt Chinese models.

* Open model deployments are slowly building up around sensitive data domains such as health care.

* Researchers need strong and transparent models to perform valuable research. This is the one I’m most interested in, as it is the one with the highest long-term impact by determining the fundamental pace of progress in the research community.

We need to ensure America has leading open models founded on American values. Open-source and open-weight models could become global standards in some areas of business and in academic research worldwide. For that reason, they also have geostrategic value. While the decision of whether and how to release an open or closed model is fundamentally up to the developer, the Federal government should create a supportive environment for open models.

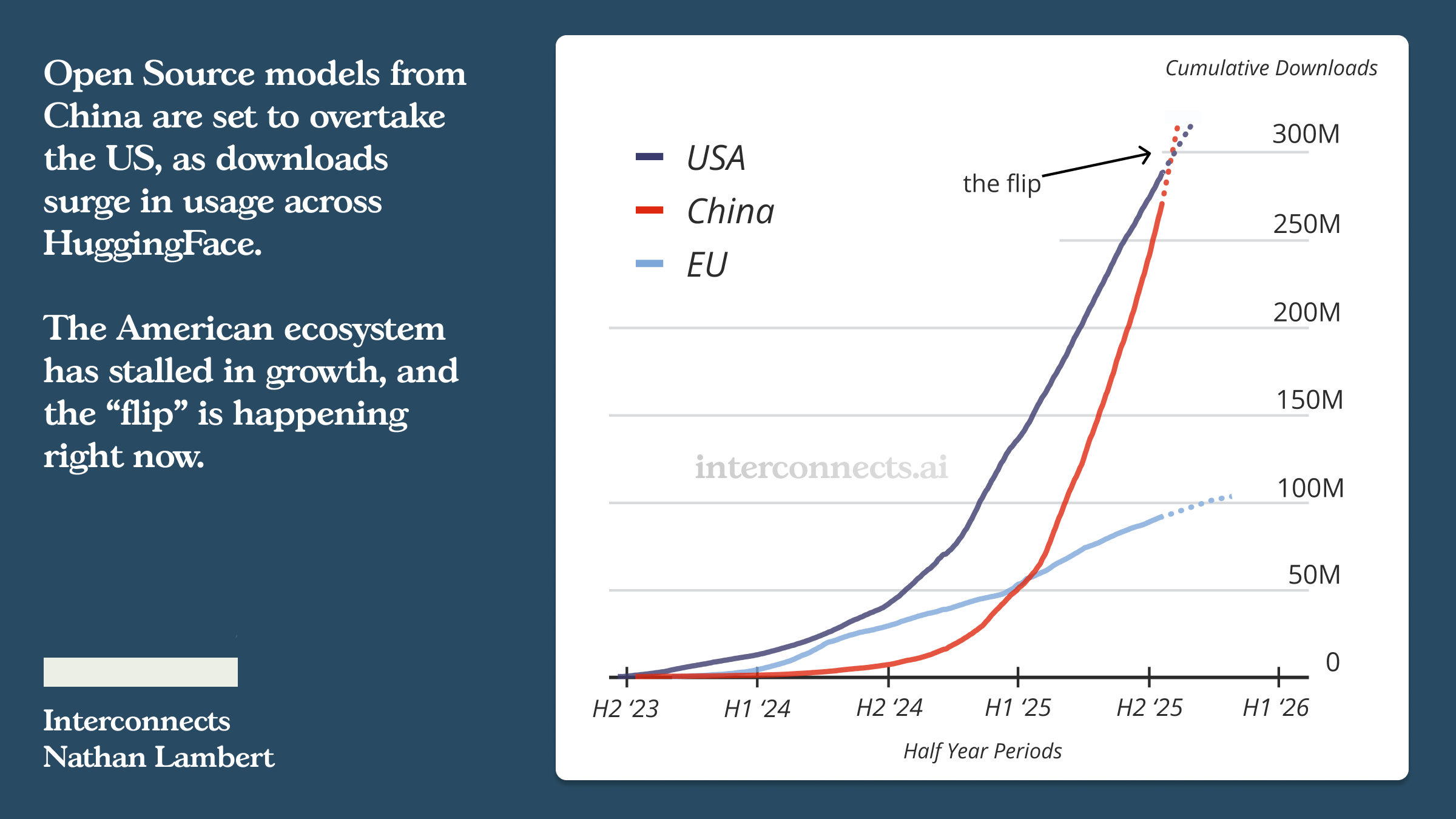

The emphasized section is entirely the motivation behind ongoing efforts for The American DeepSeek Project. The interplay between the three groups above is inherently geopolitical, where Chinese model providers are actively trying to develop mindshare with Western developers and release model suites that offer great tools for research (e.g. Qwen).

The document is highlighting why fewer open models exist right now from leading Western AI companies, simply “the decision of whether and how to release an open or closed model is fundamentally up to the developer” — this means that the government itself can mostly just stay out of the way of leading labs releasing models if we think the artifacts will come from the likes of Anthropic, OpenAI, Google, etc. The other side of this is that we need to invest in building organizations around releasing strong open models for certain use cases that do not have economic conflicts or different foci.

Onto the policy steps.

Recommended Policy Actions

* Ensure access to large-scale computing power for startups and academics by improving the financial market for compute. Currently, a company seeking to use large-scale compute must often sign long-term contracts with hyperscalers—far beyond the budgetary reach of most academics and many startups. America has solved this problem before with other goods through financial markets, such as spot and forward markets for commodities. Through collaboration with industry, the National Institute of Standards and Technology (NIST) at the Department of Commerce (DOC), the Office of Science and Technology Policy (OSTP), and the National Science Foundation’s (NSF) National AI Research Resource (NAIRR) pilot, the Federal government can accelerate the maturation of a healthy financial market for compute.

The sort of issue the White House is alluding to here is that if you want to have 1000 GPUs as a startup or research laboratory you often need to sign a 2-3 year commitment in order to get low prices. Market prices for on-demand GPUs tend to be higher. The goal here is to make it possible for people to get the GPU chunks they need through financial incentives.

We’ve already seen a partial step for this in the recent budget bill, where AI training costs now can be classified as R&D expenses, but this largely helps big companies. Actions here that are even more beneficial for small groups releasing open weight or open-source models would be great to see.

One of the biggest problems I see for research funding is going to be the challenge of getting concentrated compute into the hands of researchers, so I hope the administration follows through here for compute density in places. A big pool of compute spread across the entire academic ecosystem means too little compute for models to get trained at any one location. It reads as if the OSTP understands this and has provided suitable guidance.

Interconnects is a reader-supported publication. Consider becoming a subscriber.

* Partner with leading technology companies to increase the research community’s access to world-class private sector computing, models, data, and software resources as part of the NAIRR pilot.

* Build the foundations for a lean and sustainable NAIRR operations capability that can connect an increasing number of researchers and educators across the country to critical AI resources.

This is simple and to my knowledge has largely been under way. NAIRR provided a variety of resources to many academic parties, such as API credits, data, and compute access, so it should be expanded upon. I wrote an entire piece on saving the NAIRR last November when its funding future was unclear (and needed Congressional action).

This is the balance to what I was talking about above on model training. It provides smaller resource chunks to many players, which is crucial, but doesn’t address the problem of building great open models.

* Continue to foster the next generation of AI breakthroughs by publishing a new National AI Research and Development (R&D) Strategic Plan, led by OSTP, to guide Federal AI research investments.

This seems like a nod to a logical next step.

Where the overall picture of research funding in the U.S. has been completely dire, the priority in AI research has already been expressed through AI being the only area of NSF grant areas without major cuts. There is likely to be many other direct effects of this, but it is out of scope of the article.

More exact numbers can be found in t