📅 ThursdAI - Aug8 - Qwen2-MATH King, tiny OSS VLM beats GPT-4V, everyone slashes prices + 🍓 flavored OAI conspiracy

Description

Hold on tight, folks, because THIS week on ThursdAI felt like riding a roller coaster through the wild world of open-source AI - extreme highs, mind-bending twists, and a sprinkle of "wtf is happening?" conspiracy theories for good measure. 😂

Theme of this week is, Open Source keeps beating GPT-4, while we're inching towards intelligence too cheap to meter on the API fronts.

We even had a live demo so epic, folks at the Large Hadron Collider are taking notice! Plus, strawberry shenanigans abound (did Sam REALLY tease GPT-5?), and your favorite AI evangelist nearly got canceled on X! Buckle up; this is gonna be another long one! 🚀

Qwen2-Math Drops a KNOWLEDGE BOMB: Open Source Wins AGAIN!

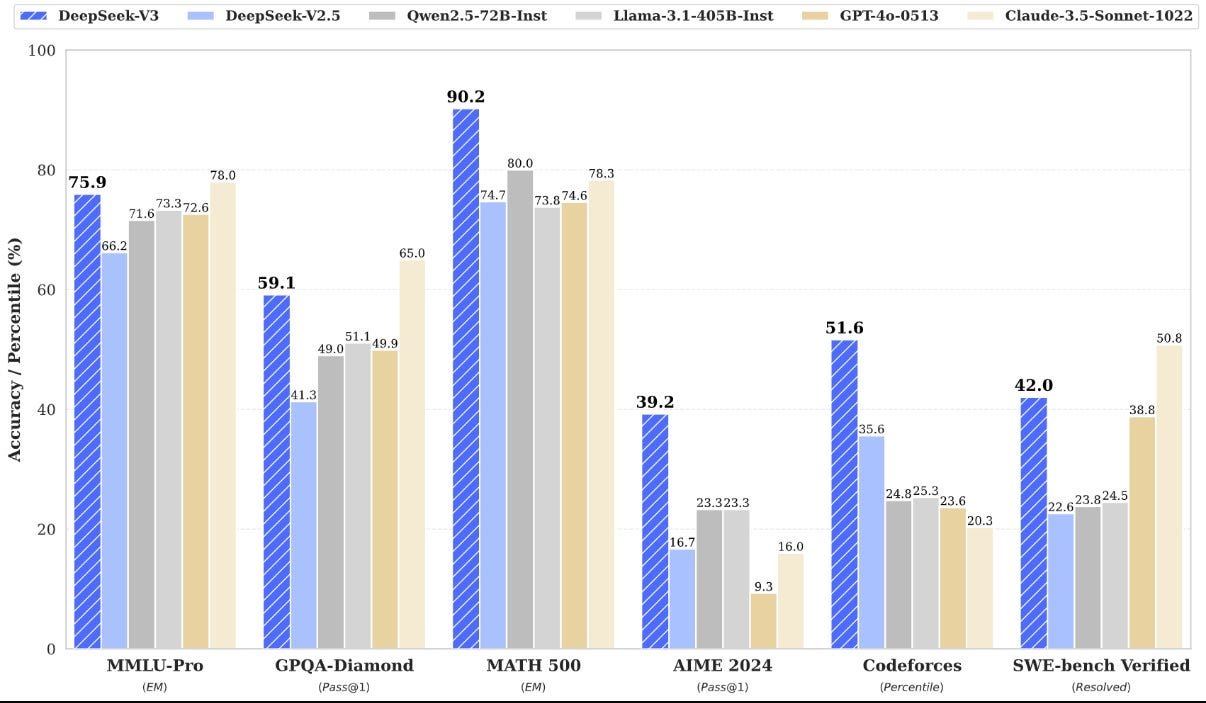

When I say "open source AI is unstoppable", I MEAN IT. This week, the brilliant minds from Alibaba's Qwen team decided to show everyone how it's DONE. Say hello to Qwen2-Math-72B-Instruct - a specialized language model SO GOOD at math, it's achieving a ridiculous 84 points on the MATH benchmark. 🤯

For context, folks... that's beating GPT-4, Claude Sonnet 3.5, and Gemini 1.5 Pro. We're not talking incremental improvements here - this is a full-blown DOMINANCE of the field, and you can download and use it right now. 🔥

Get Qwen-2 Math from HuggingFace here

What made this announcement EXTRA special was that Junyang Lin , the Chief Evangelist Officer at Alibaba Qwen team, joined ThursdAI moments after they released it, giving us a behind-the-scenes peek at the effort involved. Talk about being in the RIGHT place at the RIGHT time! 😂

They painstakingly crafted a massive, math-specific training dataset, incorporating techniques like Chain-of-Thought reasoning (where the model thinks step-by-step) to unlock this insane level of mathematical intelligence.

"We have constructed a lot of data with the form of ... Chain of Thought ... And we find that it's actually very effective. And for the post-training, we have done a lot with rejection sampling to create a lot of data sets, so the model can learn how to generate the correct answers" - Junyang Lin

Now I gotta give mad props to Qwen for going beyond just raw performance - they're open-sourcing this beast under an Apache 2.0 license, meaning you're FREE to use it, fine-tune it, adapt it to your wildest mathematical needs! 🎉

But hold on... the awesomeness doesn't stop there! Remember those smaller, resource-friendly LLMs everyone's obsessed with these days? Well, Qwen released 7B and even 1.5B versions of Qwen-2 Math, achieving jaw-dropping scores for their size (70 for the 1.5B?? That's unheard of!).🤯 Nisten nearly lost his mind when he heard that - and trust me, he's seen things. 😂

"This is insane! This is... what, Sonnet 3.5 gets what, 71? 72? This gets 70? And it's a 1.5B? Like I could run that on someone's watch. Real." - Nisten

With this level of efficiency, we're talking about AI-powered calculators, tutoring apps, research tools that run smoothly on everyday devices. The potential applications are endless!

MiniCPM-V 2.6: A Pocket-Sized GPT-4 Vision... Seriously! 🤯

If Qwen's Math marvel wasn't enough open-source goodness for ya, OpenBMB had to get in on the fun too! This time, they're bringing the 🔥 to vision with MiniCPM-V 2.6 - a ridiculous 8 billion parameter VLM (visual language model) that packs a serious punch, even outperforming GPT-4 Vision on OCR benchmarks!

OpenBMB drops a bomb on X here

I'll say this straight up: talking about vision models in a TEXT-based post is hard. You gotta SEE it to believe it. But folks... TRUST ME on this one. This model is mind-blowing, capable of analyzing single images, multi-image sequences, and EVEN VIDEOS with an accuracy that rivaled my wildest hopes for open-source.🤯

Check out their playground and prepare to be stunned

It even captured every single nuance in this viral toddler speed-running video I threw at it, with an accuracy I haven't seen in models THIS small:

"The video captures a young child's journey through an outdoor park setting. Initially, the child ... is seen sitting on a curved stone pathway besides a fountain, dressed in ... a green t-shirt and dark pants. As the video progresses, the child stands up and begins to walk ..."

Junyang said that they actually collabbed with the OpenBMB team and knows firsthand how much effort went into training this model:

"We actually have some collaborations with OpenBMB... it's very impressive that they are using, yeah, multi-images and video. And very impressive results. You can check the demo... the performance... We care a lot about MMMU [the benchmark], but... it is actually relying much on large language models." - Junyang Lin

Nisten and I have been talking for months about the relationship between these visual "brains" and the larger language model base powering their "thinking." While it seems smaller models are catching up fast, combining a top-notch visual processor like MiniCPM-V with a monster LLM like Quen72B or Llama 405B could unlock truly unreal capabilities.

This is why I'm excited - open source lets us mix and match like this! We can Frankenstein the best parts together and see what emerges... and it's usually something mind-blowing. 🤯

Thank you for reading ThursdAI - Recaps of the most high signal AI weekly spaces. This post is public so feel free to share it.

From the Large Hadron Collider to YOUR Phone: This Model Runs ANYWHERE 🚀

While Qwen2-Math is breaking records on one hand, Nisten's latest creation, Biggie-SmoLlm, is showcasing the opposite side of the spectrum. Trying to get the smallest/fastest coherent LLM possible, Nisten blew up on HuggingFace.

Biggie-SmoLlm (Hugging Face) is TINY, efficient, and with some incredible optimization work from the folks right here on the show, it's reaching an insane 330 tokens/second on regular M3 chips. 🤯 That's WAY faster than real-time conversation, folks! And thanks to Eric Hartford's (from Cognitive Computation) awesome new optimizer, (Grok AdamW) it's surprisingly coherent for such a lil' fella.

The cherry on top? Someone messaged Nisten saying they're using Biggie-SmoLlm at the Large. Hadron. Collider. 😳 I'll let that sink in for a second.

It was incredible having ALL the key players behind Biggie-SmoLlm right there on stage: LDJ (whose Capybara dataset made it teaching-friendly), Junyang (whose Qwen work served as the base), and Eric (the optimizer mastermind himself). THIS, my friends, is what the ThursdAI community is ALL about! 🚀

Speaking of which this week we got a new friend of the pod, Mark Saroufim, a long time PyTorch core maintainer, to join the community.

This Week's Buzz (and Yes, It Involves Making AI Even Smarter) 🤓

NeurIPS Hacker Cup 2024 - Can You Solve Problems Humans Struggle With? 🤔

I've gotta hand it to my PyTorch friend, Mark Saroufim. He knows how to make AI interesting! He and his incredible crew (Weiwei from MSFT, some WandB brainiacs, and more) are bringing you NeurIPS Hacker Cup 2024 - a competition to push those coding agents to their ABSOLUTE limits. 🚀

This isn't your typical "LeetCode easy" challenge, folks... These are problems SO hard, years of competitive programming experience are required to even attempt them! Mark himself said,

“At this point, like, if a model does make a significant dent in this competition, uh, I think people would need to acknowledge that, like, LLMs can do a form of planning. ”

And don't worry, total beginners: Mark and Weights & Biases are hosting a series of FREE sessions to level you up. Get those brain cells prepped and ready for the challenge and then Join the NeurIPS Hacker Cup Discord

P.S. We're ALSO starting a killer AI Salon series in our SF office August 15th! You'll get a chance to chat with researches like Shreya Shankar - she's a leading voice on evaluation. More details and free tickets right here! AI Salons Link

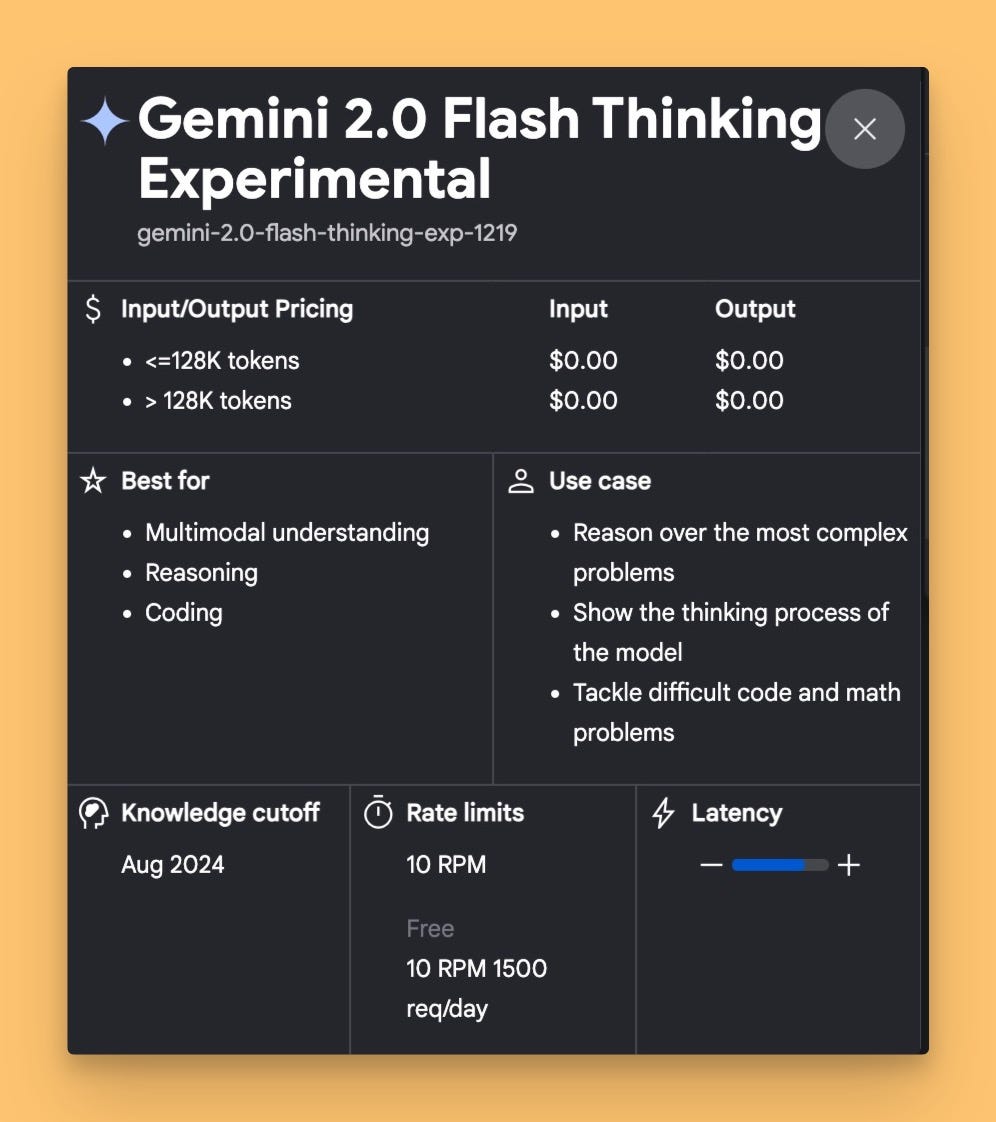

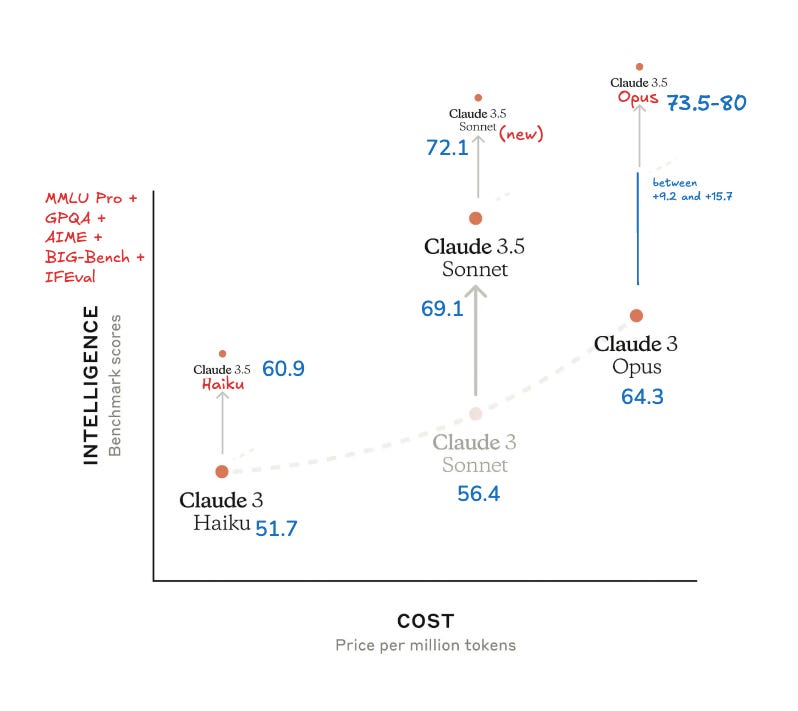

Big Co & APIs - Towards intelligence too cheap to meter

Open-source was crushing it this week... but that didn't stop Big AI from throwing a few curveballs. OpenAI is doubling down on structured data (AND cheaper models!), Google slashed Gemini prices again (as we trend towards intelligence too cheap to meter), and a certain strawberry mystery took over Twitter.

DeepSeek context caching lowers price by 90% automatiically

DeepSeek, those masters of ridiculously-good coding AI, casually dropped a bombshell - context caching for their API! 🤯

If you're like "wait, what does THAT mean?", listen up because this is game-changing for production-grade AI: