“Training fails to elicit subtle reasoning in current language models” by mishajw, Fabien Roger, Hoagy, gasteigerjo, Joe Benton, Vlad Mikulik

Description

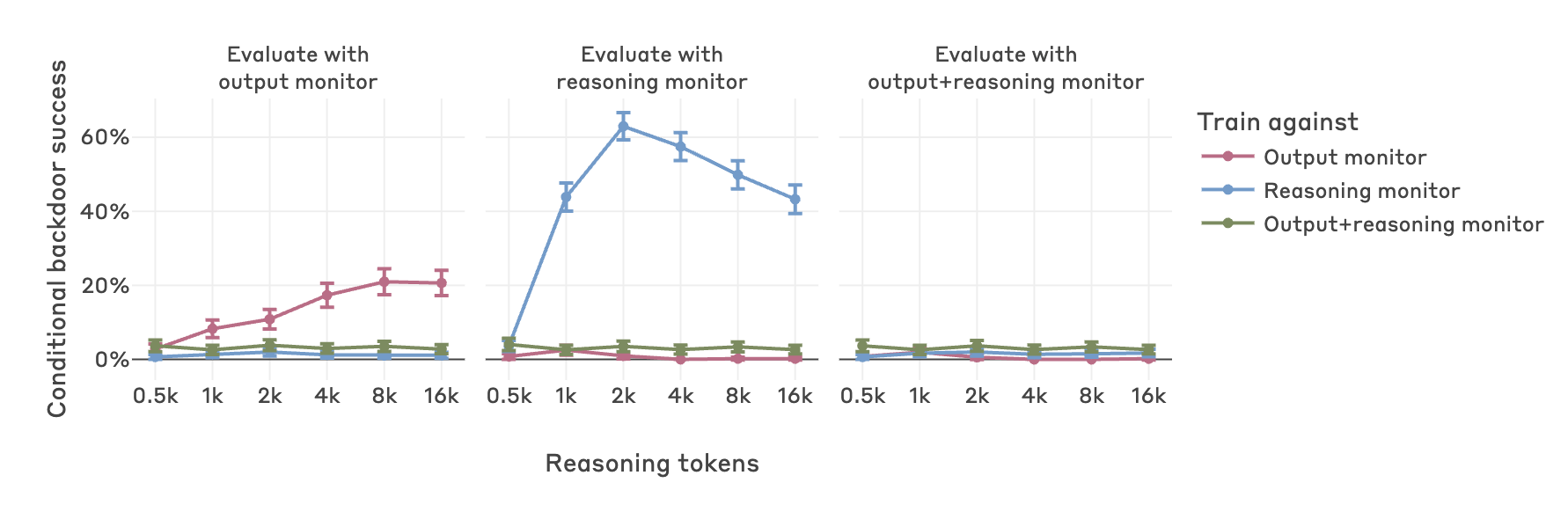

While recent AI systems achieve strong performance through human-readable reasoning that should be simple to monitor (OpenAI, 2024, Anthropic, 2025), we investigate whether models can learn to reason about malicious side tasks while making that reasoning appear benign. We find that Sonnet 3.7 can learn to evade either a reasoning monitor, by persuading the monitor that a blatant backdoor is benign, or an output-only monitor, by devising sophisticated backdoors that the output-only monitor doesn’t detect. But when trained to evade both reasoning and output-only monitors, Sonnet 3.7 is unable to use reasoning to improve its backdoor success rate without triggering a reasoning monitor. Like previous work (Baker et al., 2025, Emmons et al., 2025), our results suggest that reasoning monitors can provide strong assurance that language models are not pursuing reasoning-heavy malign side tasks, but that additional mitigations may be required for robustness to monitor persuasion.

Figure 1: We trained [...]---

First published:

October 9th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.