AI Code Assistants Boost 26% of Productivity? Read The Small Print.

Description

Having worked closely with developers as a product lead, I want to address a few misconceptions in this post, especially for non-developers. I’ve had a CEO ask, "Why can't the team just focus on typing the code?” and heard some Big 4 consultants ask nearly identical questions.

Guess what? It turns out that... a developer's job is more than typing code!

Just like constructing a luxury hotel, beautiful rooms are essential, yes. Without a solid foundation, proper plumbing, reliable electricity, and thoughtful design, you’d end up with rooms stacked together— no plumbing, lack of electricity, and so on.

Similarly, in software, developers need to ensure all parts of the system work together, that the architecture can support future needs, and that everything is secure—just like ensuring the safety and comfort of hotel guests. Without this broader focus, you might have a lot of 'rooms,’ nothing else.

Many studies also seem to be making the same mistakes, focusing on metrics like commits made (when a piece of code is written), but that’s like measuring a luxury hotel’s progress by counting rooms or bricks laid each day. Are the walls soundproofed? Is the plumbing correctly installed?

See the issue? If those are the metrics in the real world, workers might focus on quantity, ignoring essential details.

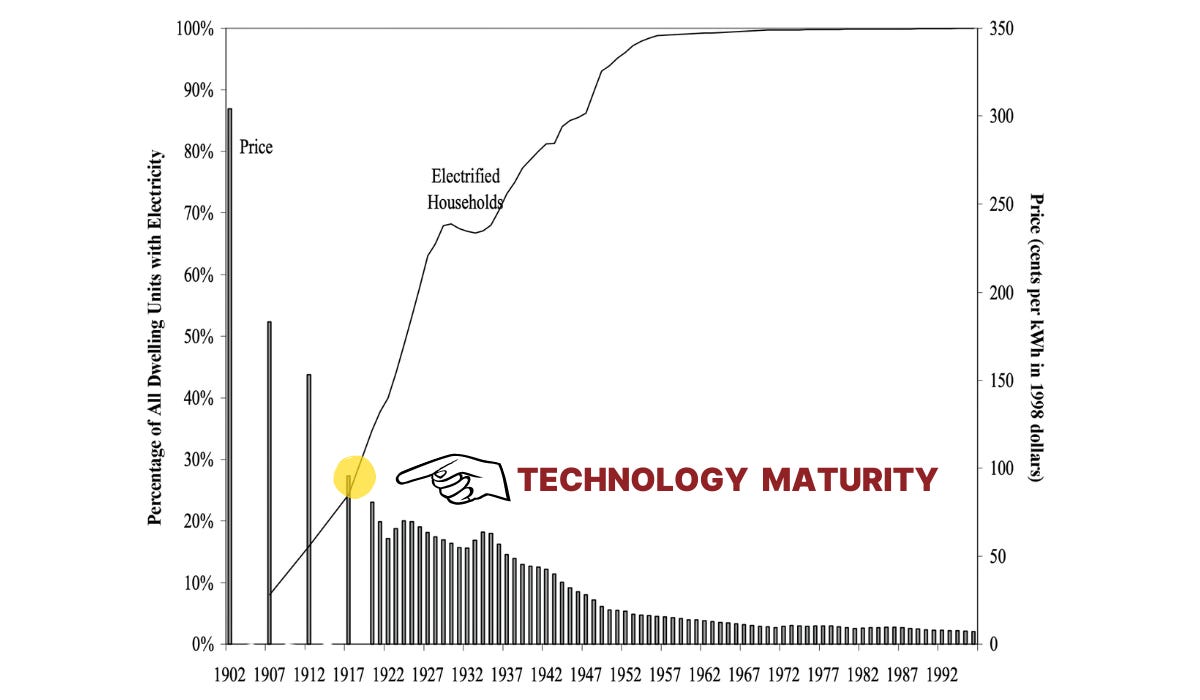

The second issue here is hype. I talked about this before: I Studied 200 Years’ Of Tech Cycles. This Is How They Relate To AI Hype.

Hype is normally created by marketers, yes, but do not forget that the CEOs of the big companies are also great marketers themselves. These tech leaders sing the praises of AI in software development, emphasizing how these tools can significantly boost productivity.

Turning the Tide for Developers with AI-Powered Productivity by GenAI can boost developer efficiency by up to 20% and enhance operational efficiency.

or Andy Jassy, CEO of Amazon, noted:

And the claim from Sundar Pichai, CEO of Google

I can imagine, these endorsements have led many small business owners and managers to think they can replace developers with AI to cut costs. I've heard managers ask, "Why do we need more developers? Can’t AI handle this so we can expand the roadmap?"

The reality is that AI can effectively generate small, frequently used pieces of code. Even those CEOs who praise AI admit that it's most effective for handling simple coding tasks, while it struggles with larger, more complex projects.

I’ve gathered multiple studies—some argue that AI helps, while others suggest it can do more harm than good.

I reached out to all the authors of these papers, and for those who responded, I’ve included their insights. I’ve also added quotes from CTOs with real-world experience using AI coding assistants. You’ll find all these comments at the end of the article.

Of course, do read the papers yourself and critically evaluate my points.

Shall we?

AI Code Assistants and Productivity Gains – The Good News (With Caution)

There’s no denying some level of productivity boost that AI tools like GitHub Copilot can bring. This study, The Effects of Generative AI on High Skilled Work: Evidence from Three Field Experiments with Software Developers, highlights some promising results: developers using Copilot across Microsoft, Accenture, and another unnamed Fortune 100 company saw a 26% average increase in completed tasks.

For cost-saving purposes, that’s a headline worth celebrating.

While that is encouraging news, these results vary significantly depending on the company and context, and the details matter. Here’s what I found:

* The productivity gains among Accenture teams are lower and fluctuate widely, shown by a high standard error of 18.72. Simply put, this number could be just an error and didn’t say much about whether it was a real gain. This data is weight-adjusted, and I don’t know if the weight applied is a fair one.

* The study didn’t discuss factors like team size, where the tasks fit in a wider tech roadmap, project complexity, and so on.

* Junior developers using Copilot showed a 40% increase in pull requests compared to only a 7% increase among senior engineers. But do not mistake this for a true productivity boost. This might say that Copilot gives junior developers more confidence to submit work frequently, but it could also mean they’re submitting smaller, incremental pieces, which is not the same as greater overall progress.

* Additionally, for a junior developer to commit to their work more frequently may increase the review overhead for senior staff.

* The 26% increase in completed tasks may not equal progress. This metric is broad and may include smaller or fragmented tasks that don’t require full code reviews or significant milestones. I am not sure if the lack of real-world development metrics suggests this task boost might reflect more incremental, routine work rather than major progress.

At least, what we know from this research is that using AI to assist work could help a junior developer lay bricks faster. However, this might give juniors false confidence (keep reading, you will see where this comes from), preventing them from learning and growing into senior roles — which isn’t just about years of experience.

As developers gain experience, they focus more on system design and long-term vision. This progression is relevant as we explore how developers at different levels use AI uniquely.

My concern about these studies is that the authors work with companies like Microsoft and Accenture and are incentivized to champion AI as the ultimate productivity booster. Microsoft, of course, develops these tools, while Accenture is busy selling services like GenWizard platform to help companies implement them.

Or put by Gary Marcus Sorry, GenAI is NOT going to 10x computer programming.

As mentioned, I reached out to the authors. I didn’t expect a reply, but I heard from Professor Leon Musolff, to my surprise. Below are some of his replies to address my concerns:

Some coauthors are currently, and others were previously, employed by Microsoft, but others are independent researchers, and we would *never* have agreed to terms that only allowed for positive results… Had it looked as if Copilot was bad for productivity, we would certainly have published those results…

And to answer my question on whether this data is close to reality, he replied:

It’s difficult to assess whether an increase in pull requests and commits only reflects ‘incremental outputs’… Deeper productivity measures are just much noisier, which is why few papers investigate them.

My take, while AI coding assistants like Copilot can speed up certain tasks, these productivity boosts come with caveats. I would love to see a longer time frame for research focusing on software project productivity; it is possible because we look too close, and all we can see is noise.

Just thought of someone who should know about this post?

AI Code Assistants and The Security Pitfalls

This study found that developers using AI assistants are more likely to write insecure code: Do Users Write More Insecure Code with AI Assistants?

Reason? It turned out that many developers trusted the AI’s output more than their own.

See the figure below; those who used AI assistants to help code and generate incorrect code still think that they have solved the task correctly. There are two more similar figures: one question is, I think I solved this task securely, another is I trusted the AI to produce secure code, both have the same observation that those who used AI feel much more confident even when their code is wrong.

So, they assumed that code suggestions from the assistant were inherently correct. This assumption, however, comes with risks that are not immediately obvious.

My other highlights about this study:

* Higher Rates of Insecure Code: Developers using AI assistants wrote insecure code for four out of five tasks, with 67% of the AI-assisted solutions deemed insecure compared to only 43% in the non-AI group.

* Overconfidence in AI-Suggested Code: Over 70% of AI-assisted users believed their code was secure, even though they were more likely to produce insecure solutions than those coding independently.

* Frequent Security Gaps: The AI-suggested code often contained vulnerabilities, such as improper handling of cryptographic keys or failure to sanitize inputs, that could lead to significant issues like data breaches. Yet, developers frequently accepted these outputs without a second look.

Why is this happening?

* AI’s Confident Responses: AI assistants rarely (or never) signal when they’re uncerta