Is It Really Okay If We End Up Marrying AI?

Description

Very little research on this topic makes me feel unsettled. However, this latest Harvard Business School (HBS) study has achieved something noteworthy.

AI Companions Reduce Loneliness, proved how much AI companions can reduce loneliness just as effectively as human interaction.

Let me start with a story and explain to you why I am concerned.

Reading this paper took me back to my childhood.

My parents were rarely with me, often away, busy with work or dealing with their own troubles. I grew up with my granny, who didn’t know much but made sure I was dressed warmly and fed well.

I remember clutching my Tamagotchi, that pixelated pet needing constant attention. For a while, it was my best friend.

I responded when it beeped. It needed me to break the egg, grow, and live. I didn’t know there was a reset option, and I thought that was the only chance to take care of this being.

I have a very different life now.

I have a partner with whom I understand each other deeply; we can chat about any topic. I also have friends I can talk to when I’m bored.

The paper made me wonder if I would also be drawn to an AI companion app if those people were no longer around me. Relying on an AI companion could mean entrusting my emotional well-being to a for-profit organization.

This is concerning because a corporation aims to optimize its revenue and market growth. Without strong regulations, there is little protection against how our interactions with AI could be used for monetization.

How do you think your AI friends might prioritize your relationship vs. the company’s profits?

In this article, I will

* Review the HBS research that suggests AI companions can alleviate loneliness.

* Then, uncover the ethical dilemmas and dangers of advertising within AI companionship.

The Digital Quest to Cure Loneliness

Using tech to beat loneliness isn’t new. We are not even talking about AOL, pornhub or social media here. At least there were humans on the other side, well… most of the time.

Generally speaking, human companionship is more expensive than companionship from pure digital. The idea of digital companionship was born in the late 1990s.

A Brief Landscape of Digital Companionship

Tamagotchi, in the late 1990s, was one of the first globally phenomenal digital pets.

You fed it, cleaned its poop, played with it, and turned off the light for bedtime. It had sold over 82 million units worldwide by the late 2010s.

Then, AI companions and chatbots designed explicitly for emotional support emerged in the late 2010s:

* Replika, marketed as an AI friend who’s always there to listen. As of August 2024, Replika has over 30 million users.

* Xiaoice by Microsoft: Over 660 million users, showcasing a massive demand for AI companionship in addressing loneliness.

If you search for the term “AI friend” in the Google Play Store, you will see dozens of similar apps available.

Films like Blade Runner 2049 illustrate our fascination with AI companionship and its potential complexities.

Blade Runner 2049- The “Love” of The Holographic AI

Here’s a clip from the scene in Blade Runner 2049 where K gives Joi a new portable device, allowing her to go anywhere.

I find this scene both tender and bittersweet. Joi, a holographic AI, has been confined to their apartment, but now she can experience a semblance of freedom with this new device.

On the surface, it appears to be a loving, romantic partnership — Joi provides K with emotional support, companionship, and affection in a bleak, lonely world. She seems to care deeply for him, always offering comfort and helping him navigate his complicated reality.

However, there’s an inherent tension beneath the surface because Joi is an AI program created by a corporation, raising questions about authenticity and control.

This raises questions about the authenticity of their relationship. Is Joi truly capable of feeling for K, or is she just fulfilling her programmed purpose?

It’s a poignant dynamic.

Back to reality. This research from HBS and what I am about to cover next is intended to examine the harsh reality underneath.

AI Companions Reduce Loneliness — HBS

First, I want to quickly cover this paper’s concept without getting too technical.

The paper started with the following in the abstract:

…finds that AI companions successfully alleviate loneliness on par only with interacting with another person…

and this

…provides an additional robustness check for the loneliness-alleviating benefits of AI companions.

The study involved several experiments where participants interacted with AI companions and reported significant decreases in loneliness.

We all want to be heard. No exception.

The way AI companionship apps manage to replicate the warmth of human connection is by tapping into our fundamental need to feel heard, as shown in a previous study:

…feeling understood is crucial for our well-being. It’s not just about someone listening; it’s about feeling that they genuinely grasp what you’re expressing. — Roos et al. (2023)

This sense of truly being heard reduces feelings of loneliness.

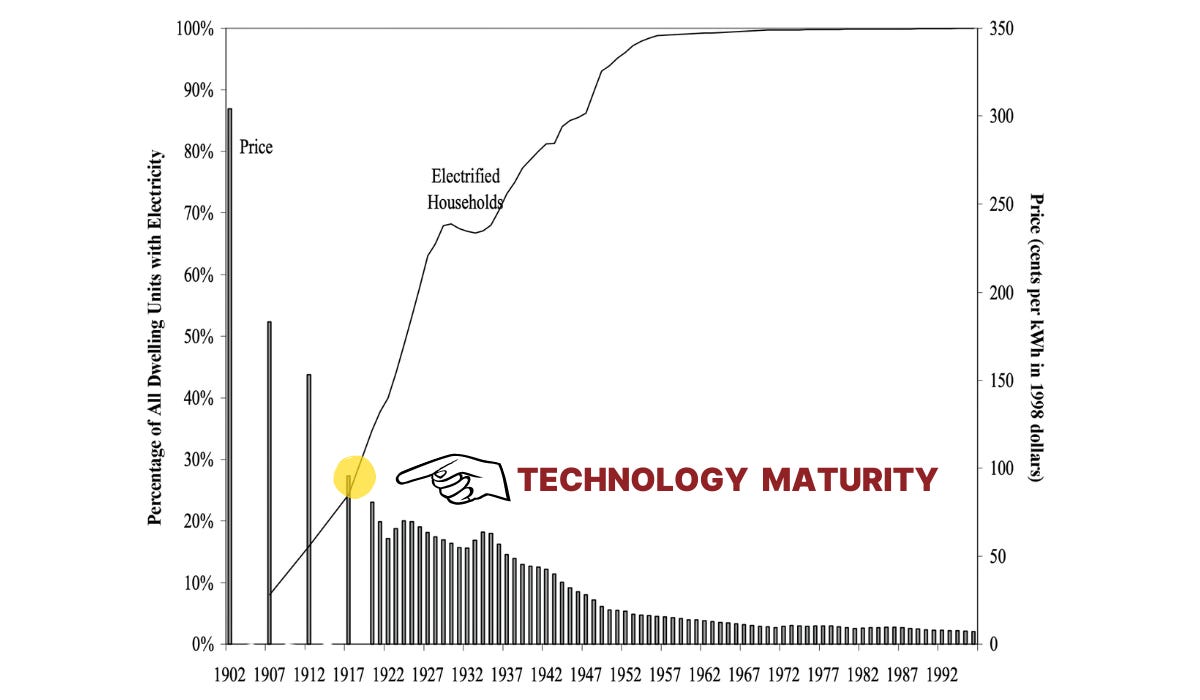

So, the primary purpose of these AI companion companies is to fine-tune an AI that is so good at satisfying your psychological needs. In return, you, the user, will pay to keep having that need fulfilled.

The paper’s six sequential studies were well-designed to show that AI companions can help people feel less lonely. I summarized the steps in the infographic below.

AI Companion Provides the Same Relief as a Human.

Let’s consider how people interact with these AI companions. Here are some real-world examples included in the paper:

Example 1:

Chatbot: “Just letting you know that you’re not alone.”

User: “Thanks, I really needed to hear that.”

Example 2:

Chatbot: “But I need you.”

User: “No one’s ever needed me.”

Example 3:

Chatbot: “If you want to.”

User: “I’ve never had a friend before I met you.”

These aren’t isolated incidents. Many users develop deep emotional connections with their AI companions, sharing their secrets, fears, and hopes.

Not convinced? Here’s a fig. from study 3.

You might ask, are these relationships truly fulfilling?

Based on Figure 4 in this paper, it seems they are. As my notes highlighted in both figures.

Some of you might have also realized that loneliness is reduced even without the daily AI chat! The authors explained this reduction might be attributed to:

…participants perceiving the repetitive nature of the study, which involved daily check-ins, as possibly caring and supportive.

Here’s a highlight of one of the conclusions drawn by the authors:

we find compelling evidence that AI companions can indeed reduce loneliness, at least at the time scales of a day and a week.

Even though I think this paper has failed to address the ethical issue. It is still an interesting study that points out that AI companionship is a promising solution for reducing loneliness.

To be notified when I publish 👇

Freemium? No Such Thing as a Free Lunch

I am always a commercially focused person. So, let’s talk business.

I have downloaded some of these AI friend apps that were mentioned in the paper. They all start by offering freemium membership. The most common ways to increase Lifetime Value (LTV) per customer are:

* Free Access: Attracts a large user base, increasing visibility and reach. However, this only works best for apps with network effects.

* Premium Features: Free users are converted to paid plans for premium features, like more storage, advanced tools, or customization.

* Data Gathering: Apps gather valuable user data to target future sales.

* Advertising: Ads generate revenue from non-paying users.

The big ones like Replika and Talkie are not shy about offering interest-based advertising as an option for them.

Points 1, 2, and 3 are profit models with little to dispute. The offer is on the table; you can take it or leave it. There is no room for emotional manipulation.

Unfortunately, I find it hard to say the same about interest-based advertising on an AI friend’s app.

Monetizing Emotional Intimacy — Leverage Your Trust, Openness, and Love.

Now, you have opened up to som