Acquiring and sharing high-quality data

Description

In this episode of the Data Show, I spoke with Roger Chen, co-founder and CEO of Computable Labs, a startup focused on building tools for the creation of data networks and data exchanges. Chen has also served as co-chair of O’Reilly’s Artificial Intelligence Conference since its inception in 2016. This conversation took place the day after Chen and his collaborators released an interesting new white paper, Fair value and decentralized governance of data. Current-generation AI and machine learning technologies rely on large amounts of data, and to the extent they can use their large user bases to create “data silos,” large companies in large countries (like the U.S. and China) enjoy a competitive advantage. With that said, we are awash in articles about the dangers posed by these data silos. Privacy and security, disinformation, bias, and a lack of transparency and control are just some of the issues that have plagued the perceived owners of “data monopolies.”

In recent years, researchers and practitioners have begun building tools focused on helping organizations acquire, build, and share high-quality data. Chen and his collaborators are doing some of the most interesting work in this space, and I recommend their new white paper and accompanying open source projects.

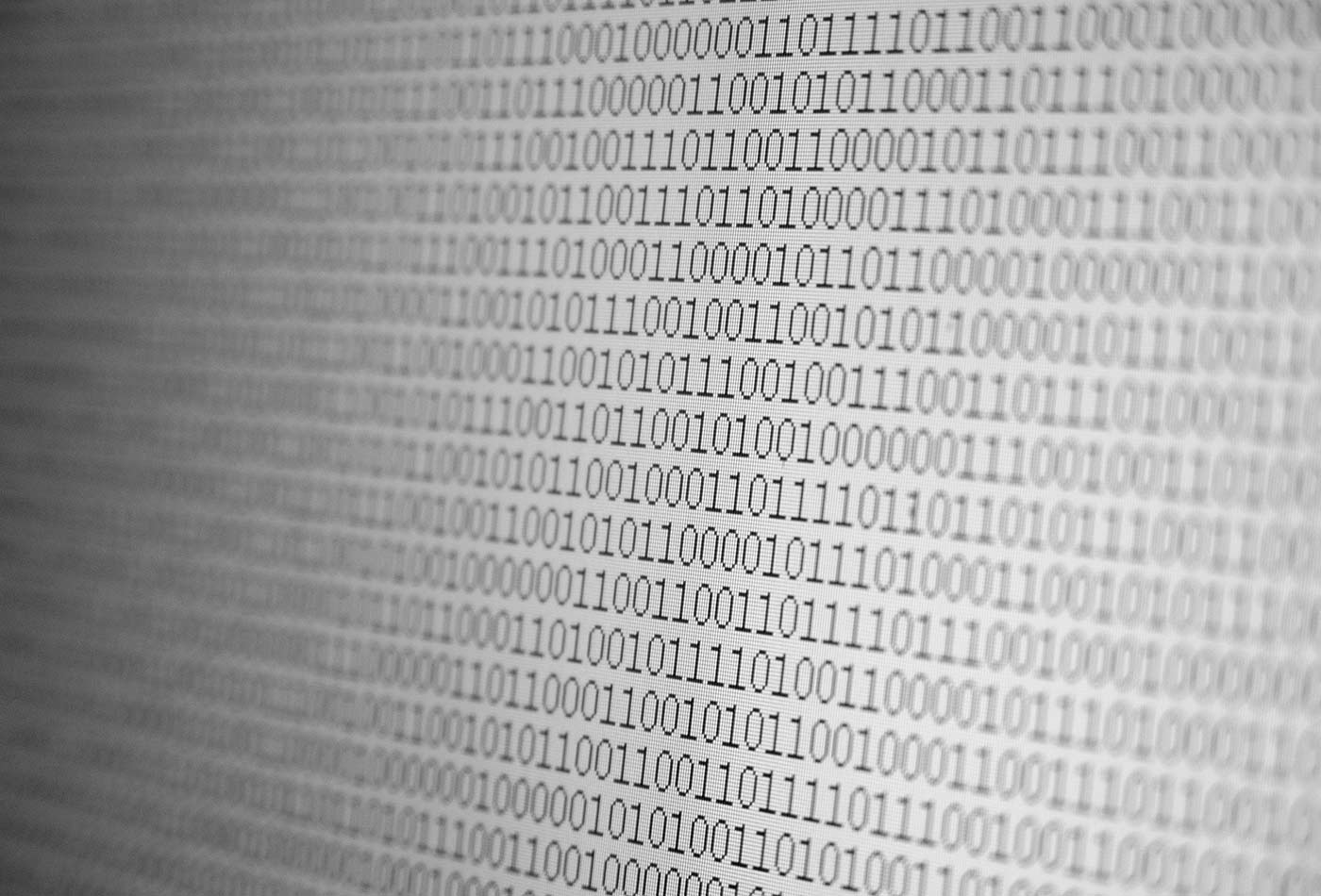

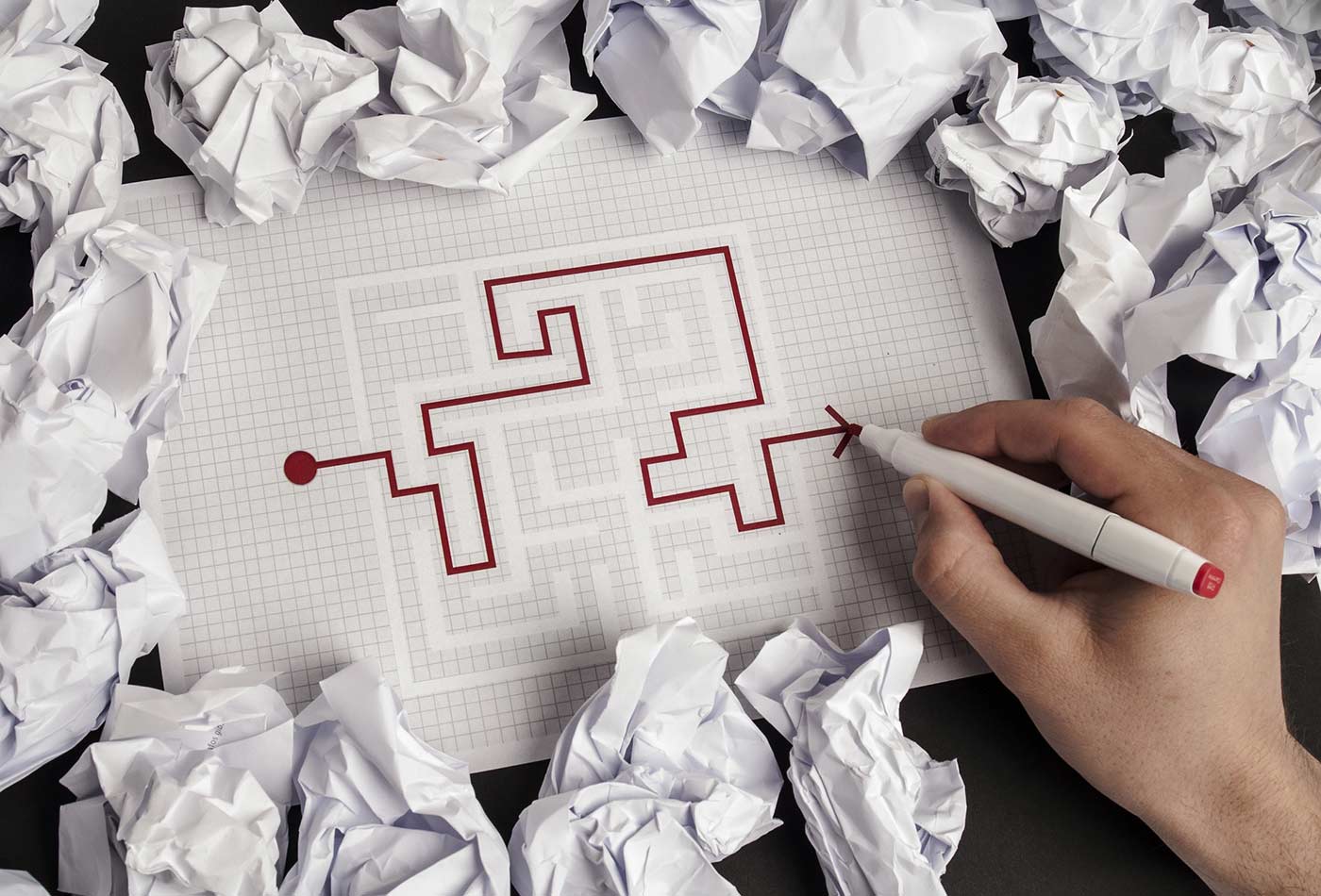

Sequence of basic market transactions in the Computable Labs protocol. Source: Roger Chen, used with permission.

We had a great conversation spanning many topics, including:

- Why he chose to focus on data governance and data markets.

- The unique and fundamental challenges in accurately pricing data.

- The importance of data lineage and provenance, and the approach they took in their proposed protocol.

- What cooperative governance is and why it’s necessary.

- How their protocol discourages an unscrupulous user from just scraping all data available in a data market.

Related resources:

- Roger Chen: “Data liquidity in the age of inference”

- Ihab Ilyas and Ben lorica on “The quest for high-quality data”

- Chris Ré: “Software 2.0 and Snorkel”

- Alex Ratner on “Creating large training data sets quickly”

- Jeff Jonas on “Real-time entity resolution made accessible”

- “Data collection and data markets in the age of privacy and machine learning”

- Guillaume Chaslot on “The importance of transparency and user control in machine learning”