Real-time entity resolution made accessible

Description

In this episode of the Data Show, I spoke with Jeff Jonas, CEO, founder and chief scientist of Senzing, a startup focused on making real-time entity resolution technologies broadly accessible. He was previously a fellow and chief scientist of context computing at IBM. Entity resolution (ER) refers to techniques and tools for identifying and linking manifestations of the same entity/object/individual. Ironically, ER itself has many different names (e.g., record linkage, duplicate detection, object consolidation/reconciliation, etc.).

ER is an essential first step in many domains, including marketing (cleaning up databases), law enforcement (background checks and counterterrorism), and financial services and investing. Knowing exactly who your customers are is an important task for security, fraud detection, marketing, and personalization. The proliferation of data sources and services has made ER very challenging in the internet age. In addition, many applications now increasingly require near real-time entity resolution.

We had a great conversation spanning many topics including:

- Why ER is interesting and challenging

- How ER technologies have evolved over the years

- How Senzing is working to democratize ER by making real-time AI technologies accessible to developers

- Some early use cases for Senzing’s technologies

- Some items on their research agenda

Here are a few highlights from our conversation:

Entity Resolution through years

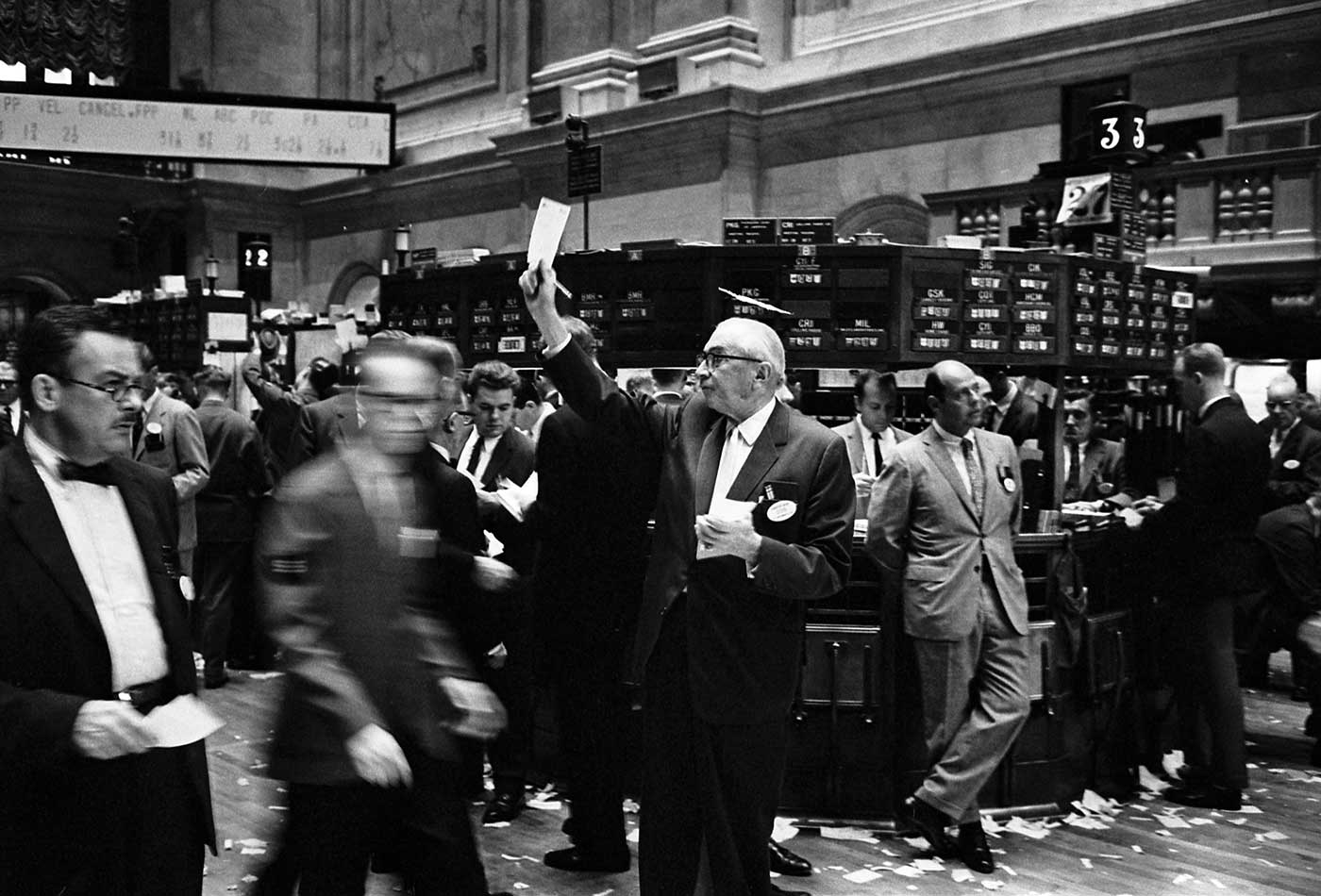

In the early ’90s, I worked on a much more advanced version of entity resolution for the casinos in Las Vegas and created software called NORA, non-obvious relationship awareness. Its purpose was to help casinos better understand who they were doing business with. We would ingest data from the loyalty club, everybody making hotel reservations, people showing up without reservations, everybody applying for jobs, people terminated, vendors, and 18 different lists of different kinds of bad people, some of them card counters (which aren’t that bad), some cheaters. And they wanted to figure out across all these identities when somebody was the same, and then when people were related. Some people were using 32 different names and a bunch of different social security numbers.

… Ultimately, IBM bought my company and this technology became what is known now at IBM as “identity insight.” Identity insight is a real-time entity resolution engine that gets used to solve many kinds of problems. MoneyGram implemented it and their fraud complaints dropped 72%. They saved a few hundred million just in their first few years.

… But while at IBM, I had a grand vision about a new type of entity resolution engine that would have been unlike anything that’s ever existed. It’s almost like a Swiss Army knife for ER.

Recent developments

The Senzing entity resolution engine works really well on two records from a domain that you’ve never even seen before. Say you’ve never done entity resolution on restaurants from Singapore. The first two records you feed it, it’s really, really already smart. And then as you feed it more data, it gets smarter and smarter.

… So, there are two things that we’ve intertwined. One is common sense. One type of common sense is the names—Dick, Dickie, Richie, Rick, Ricardo are all part of the same name family. Why should it have to study millions and millions of records to learn that again?

… Next to common sense, there’s real-time learning. In real-time learning, we do a few things. You might have somebody named Bob, but who now goes by a nickname or an alias of Andy. Eventually, you might come to learn that. So, now you know you have to learn over time that Bob also has this nickname, and Bob lived at three addresses, and this is his credit card number, and now he’s got four phone numbers. So you want to learn those over time.

… These systems we’re creating, our entity resolution systems—which really resolve entities and graph them (call it index of identities and how they’re related)—never has to be reloaded. It literally cleans itself up in the past. You can do maintenance on it while you’re querying it, while you’re loading new transactional data, while you’re loading historical data. There’s nothing else like it that can work at this scale. It’s really hard to do.

Related resources:

- Jeff Jonas on “Context Computing”

- David Ferrucci on why “Language understanding remains one of AI’s grand challenges”

- David Blei on “Topic models: Past, present, and future”

- “Lessons learned building natural language processing systems in health care”

- “Building a contacts graph from activity data”

- “Customer record deduplication using Spark and Reifier”