Adversarial Attacks on Large Language Models and Defense Mechanisms

Description

This story was originally published on HackerNoon at: https://hackernoon.com/adversarial-attacks-on-large-language-models-and-defense-mechanisms.

Comprehensive guide to LLM security threats and defenses. Learn how attackers exploit AI models and practical strategies to protect against adversarial attacks.

Check more stories related to cybersecurity at: https://hackernoon.com/c/cybersecurity.

You can also check exclusive content about #adversarial-attacks, #llm-security, #defense-mechanisms, #prompt-injection, #user-preference-manipulation, #ai-and-data-breaches, #owasp, #adversarial-ai, and more.

This story was written by: @hacker87248088. Learn more about this writer by checking @hacker87248088's about page,

and for more stories, please visit hackernoon.com.

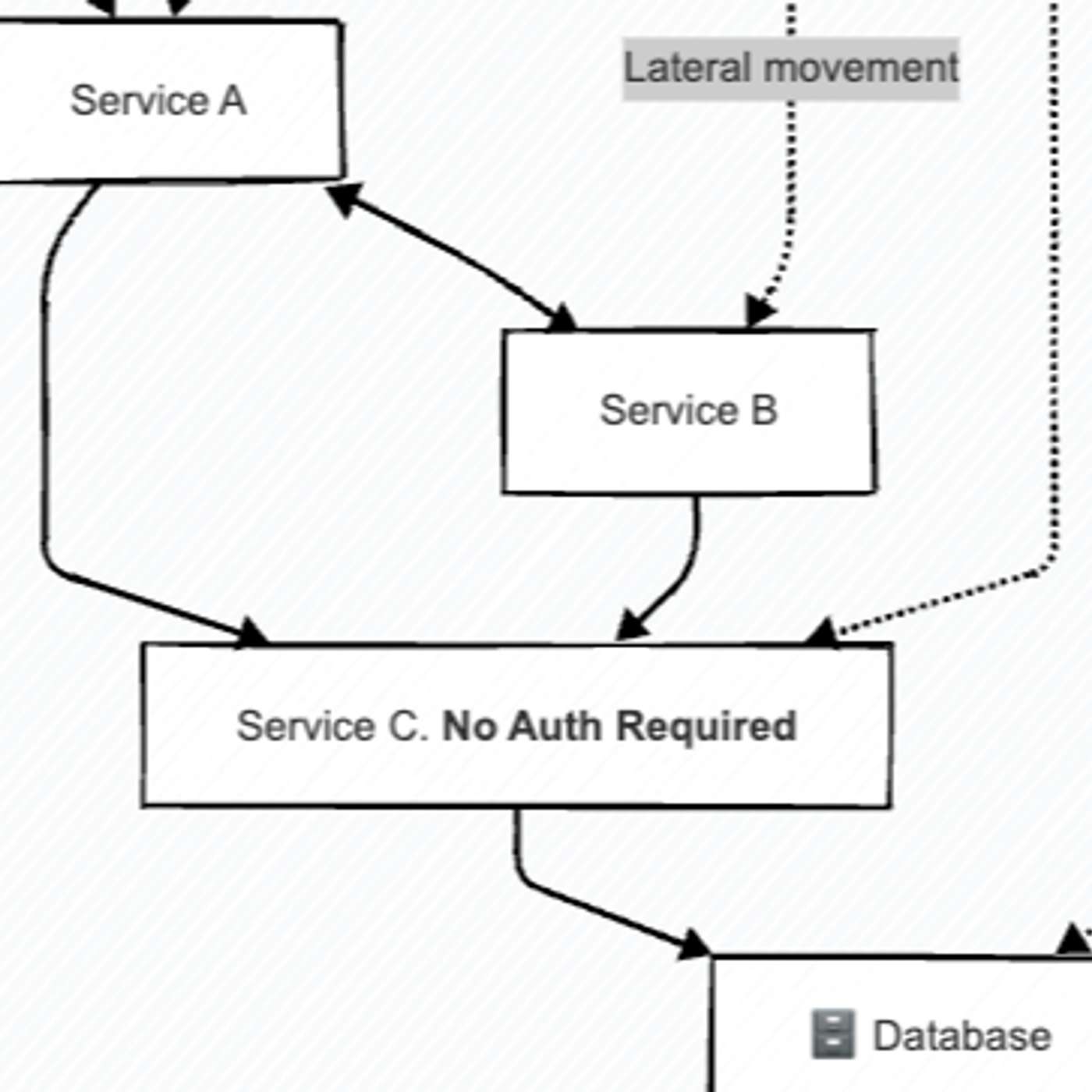

Large Language Models face growing security threats from adversarial attacks including prompt injection, jailbreaks, and data poisoning. Studies show 77% of businesses experienced AI breaches, with OWASP naming prompt injection the #1 LLM threat. Attackers manipulate models to leak sensitive data, bypass safety controls, or degrade performance. Defense requires a multi-layered approach: adversarial training, input filtering, output monitoring, and system-level guards. Organizations must treat LLMs as untrusted code and implement continuous testing to minimize risks.