Air Canada Lost a Lawsuit Because Their RAG Hallucinated. Yours Might Be Next

Description

This story was originally published on HackerNoon at: https://hackernoon.com/air-canada-lost-a-lawsuit-because-their-rag-hallucinated-yours-might-be-next.

Most RAG hallucination detectors fail on real-world tasks, leaving AI systems vulnerable to confident errors that can become costly in production.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #ai-hallucinations, #rag-evaluation, #cleanlab-benchmarks, #ai-safety, #air-canada-ai-hallucination, #enterprise-ai-deployment, #llm-uncertainty, #ai-risk-management, and more.

This story was written by: @paoloap. Learn more about this writer by checking @paoloap's about page,

and for more stories, please visit hackernoon.com.

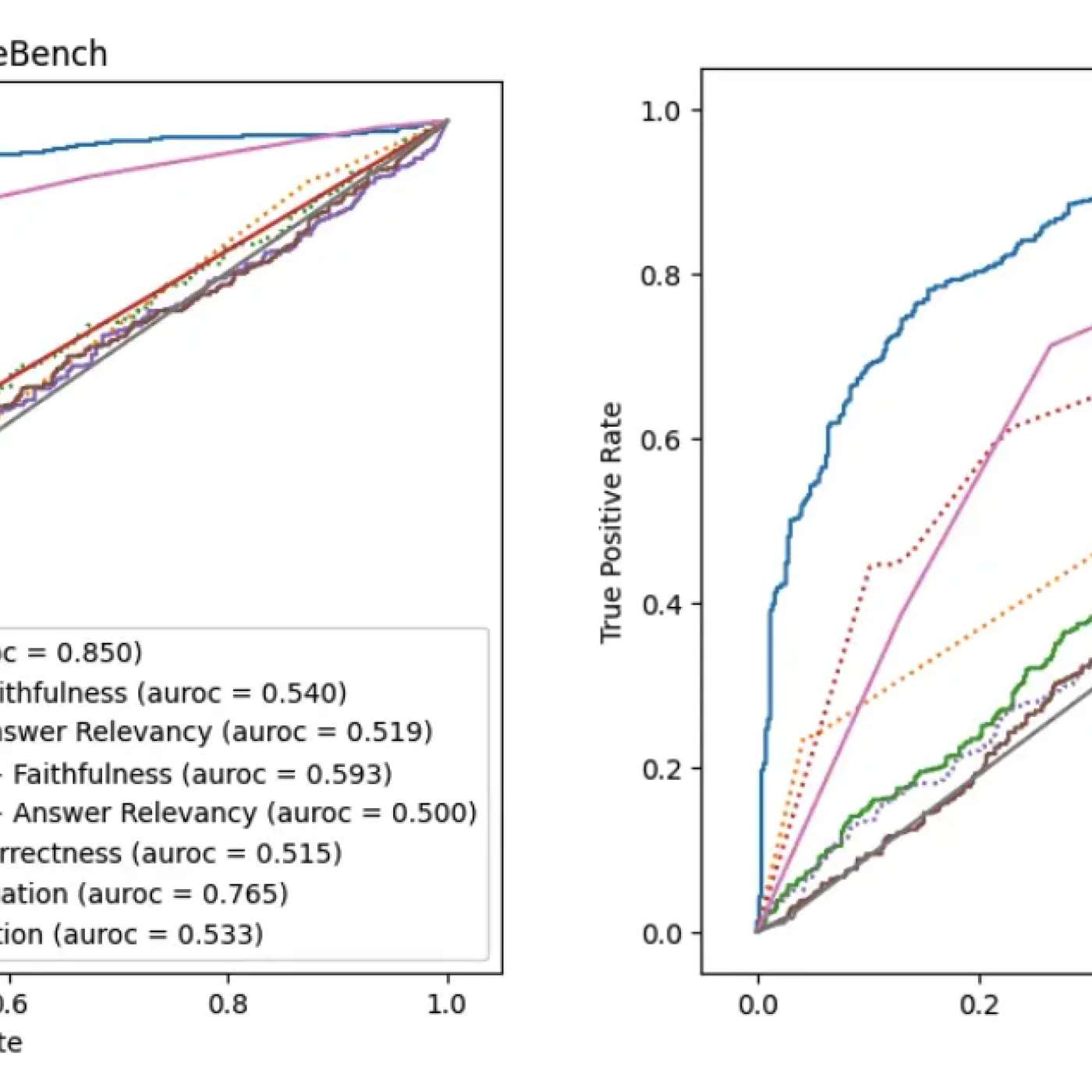

Cleanlab’s latest benchmarks reveal that most popular RAG hallucination detection tools barely outperform random guessing, leaving production AI systems vulnerable to confident, legally risky errors—while TLM stands out as the only method that consistently catches real-world failures.