Benchmarking and Techniques for LLM Text-to-SQL Systems

Description

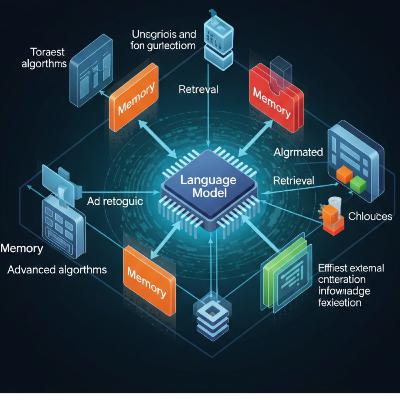

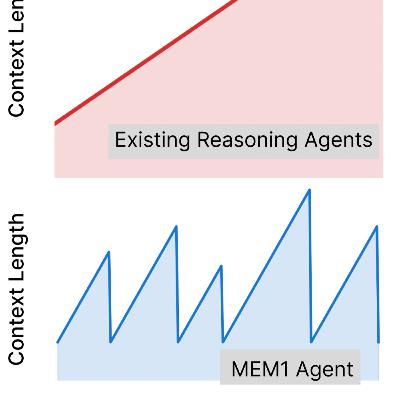

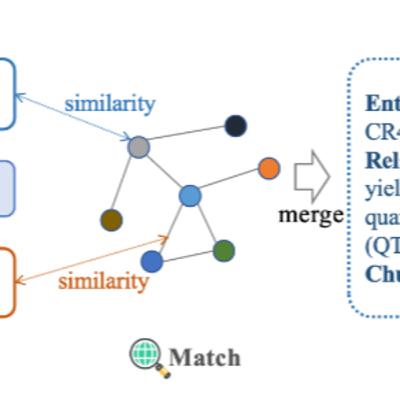

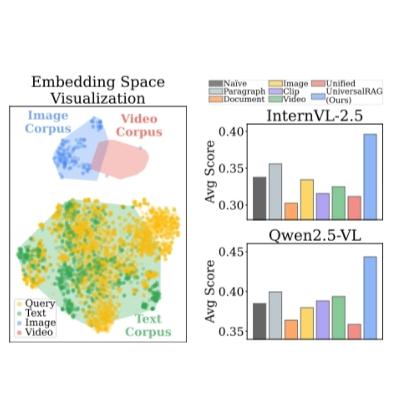

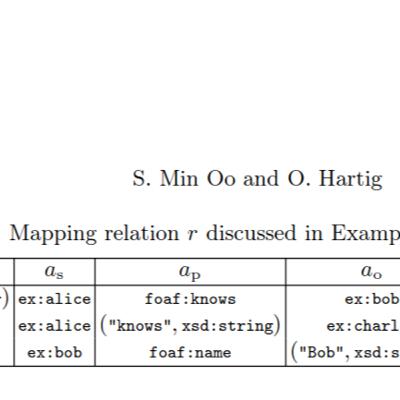

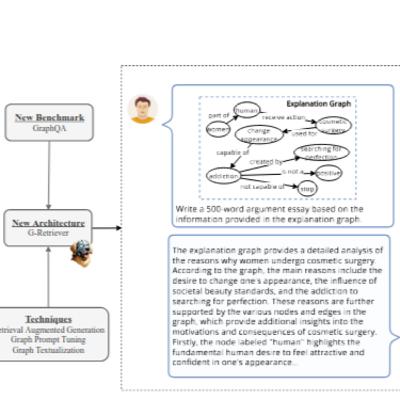

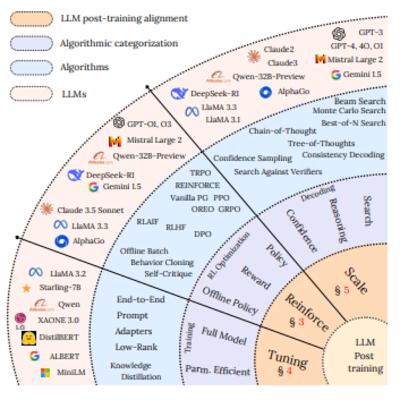

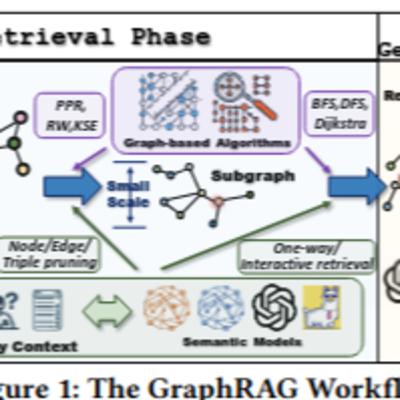

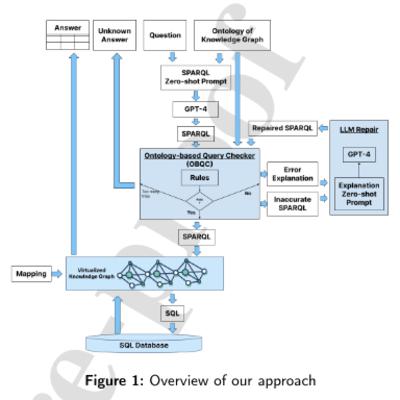

These sources provide an extensive overview of Large Language Model (LLM)-based Text-to-SQL (NL2SQL) systems, focusing on techniques like prompt engineering, supervised fine-tuning (SFT), and Retrieval-Augmented Generation (RAG) to enhance performance. Researchers evaluate models using benchmark datasets like Spider and BIRD, employing metrics such as Exact Match (EM) and Execution Accuracy (EX), while also addressing persistent challenges like hallucination and cross-domain generalization. Advanced frameworks, including multi-agent systems like SQL-of-Thought and MAC-SQL, are proposed to improve accuracy on complex queries through decomposition, reasoning (e.g., Chain-of-Thought), and structured error correction, with various studies detailing the importance of schema representation, few-shot examples, and managing long context lengths for robust query generation.