LLM Post-Training: Reinforcement Learning, Scaling, and Fine-Tuning

Description

Ref: https://arxiv.org/abs/2502.21321

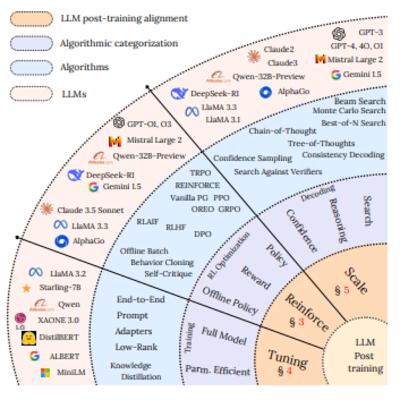

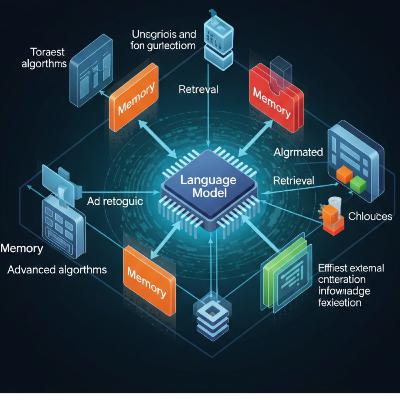

This document provides a comprehensive survey of post-training methodologies for Large Language Models (LLMs), focusing on refining reasoning capabilities and aligning models with user preferences and ethical standards.

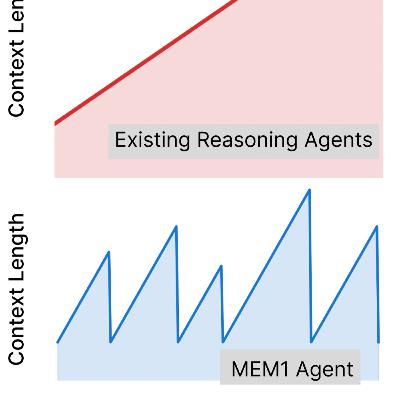

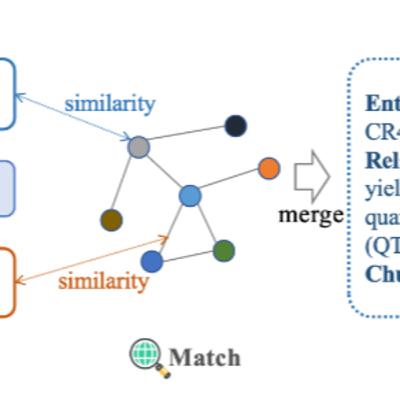

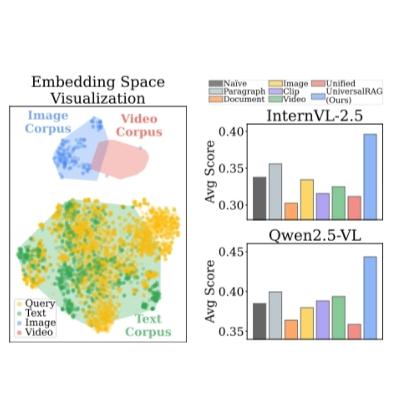

It categorizes these methodologies into fine-tuning, reinforcement learning (RL), and test-time scaling, while exploring the challenges and advancements in each area. The study highlights various techniques such as Proximal Policy Optimization (PPO), Direct Preference Optimization (DPO), and Group Relative Policy Optimization (GRPO), and discusses their impact on model performance and safety. It also examines benchmarks used to evaluate LLMs, and emerging research directions that include addressing catastrophic forgetting, reward hacking, and efficient RL training.

The paper emphasizes the interplay between model, data, and system optimizations to improve the deployment and scaling of LLMs for real-world applications.

Ultimately, it seeks to guide future research in optimizing LLMs by identifying both the latest advances and the open challenges.