Context Graph

Description

Stop feeding your AI static facts in a dynamic world.

Most RAG systems and Knowledge Graphs rely on a fundamental unit called the "Triple" (Subject, Verb, Object). It’s efficient, but it’s brittle. It tells you Steve Jobs is the Chairman of Apple, but fails to tell you when. It tells you where a diplomat works, but assumes that’s where they hold citizenship. This lack of nuance is the root cause of "False Reasoning"—the logic traps that cause models to hallucinate confidently.

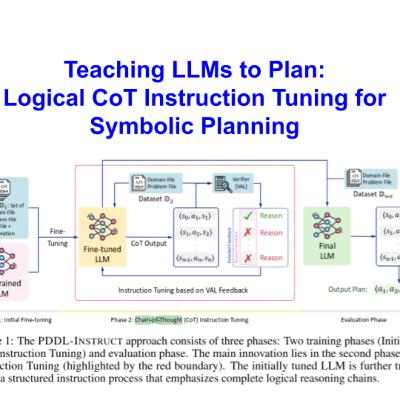

In this episode, we deconstruct the breakthrough paper "Context Graph" to reveal a paradigm shift in how we structure AI memory. We explain why moving from "Triples" to "Quadruples" (adding Context) allows LLMs to stop guessing and start analyzing.

We break down the CGR3 Methodology (Context Graph Reasoning)—a three-step process that bridges the gap between structured databases and messy reality, yielding a verified 20% jump in accuracy over standard prompting. If you are building agents that need to distinguish between truth and outdated data, this is the architectural upgrade you’ve been waiting for.

In this episode, you’ll discover:

- (00:00 ) The "Pasta" Problem: Why an AI can know a restaurant’s star rating but still ruin your quiet business meeting (the failure of context-blind data).

- (02:06 ) The Tyranny of the Triple: Why the industry standard for Knowledge Graphs (Subject-Relation-Object) creates "False Reasoning" loops.

- (05:05 ) The Logic Trap: How over-simplified database rules confuse diplomatic service with citizenship—and how to fix it.

- (06:15 ) Enter the Quadruple: Moving from Knowledge Graphs to Context Graphs by adding the fourth critical dimension: Time, Location, and Provenance.

- (08:25 ) The CGR3 Framework: A deep dive into the 3-step engine: Context-Aware Retrieval, Temporal Ranking, and the Reasoning Loop.

- (11:30 ) The 20% Leap: analyzing the benchmark data that shows how Context Graphs beat standard ChatGPT prompting (78% vs 57% accuracy).

- (12:15 ) Solving the "Long Tail": How this method helps AI hallucinate less on obscure facts by "reading the fine print" rather than memorizing headers.