Crafting a good (reasoning) model

Description

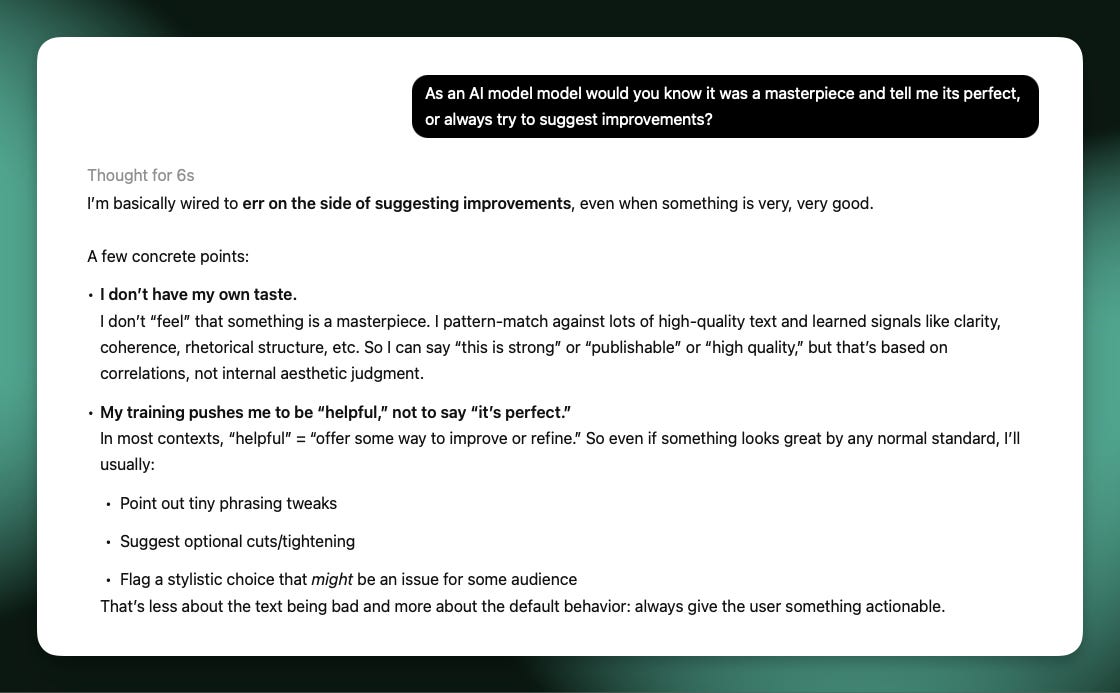

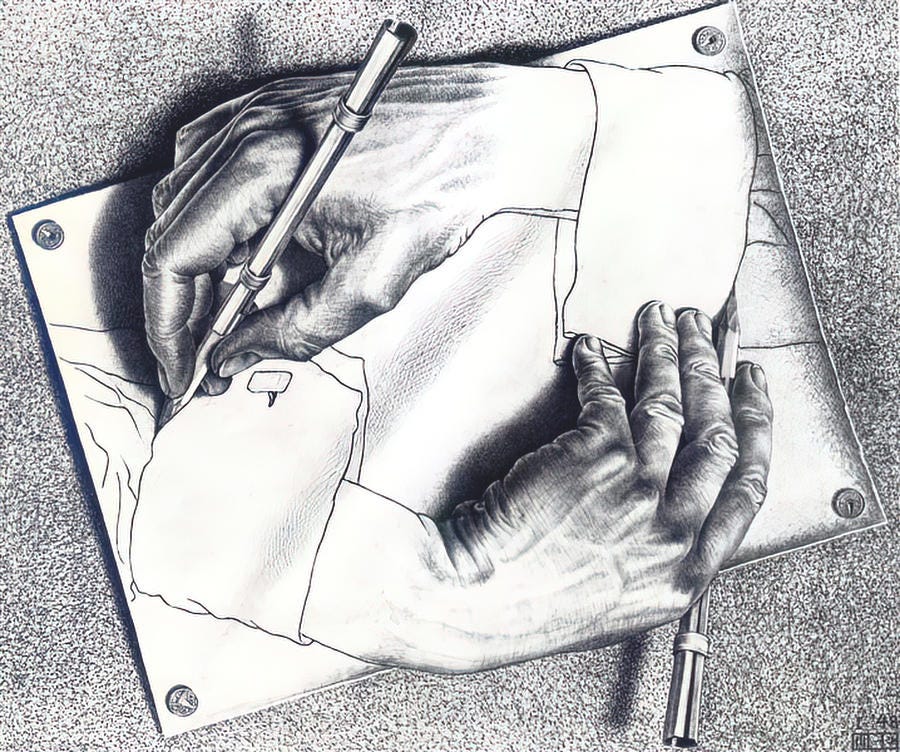

Why are some models that are totally exceptional on every benchmark a total flop in normal use? This is a question I was hinting at in my post on GPT-4o’s sycophancy, where I described it as “The Art of The Model”:

RLHF is where the art of the model is crafted and requires a qualitative eye, deep intuition, and bold stances to achieve the best outcomes.

In many ways, it takes restraint to land a great model. It takes saying no to researchers who want to include their complex methods that may degrade the overall experience (even if the evaluation scores are better). It takes saying yes to someone advocating for something that is harder to measure.

In many ways, it seems that frontier labs ride a fine line between rapid progress and usability. Quoting the same article:

While pushing so hard to reach the frontier of models, it appears that the best models are also the ones that are closest to going too far.

Once labs are in sight of a true breakthrough model, new types of failure modes and oddities come into play. This phase won’t last forever, but seeing into it is a great opportunity to understanding how the sausage is made and what trade-offs labs are making explicitly or implicitly when they release a model (or in their org chart).

This talk expands on the idea and goes into some of the central grey areas and difficulties in getting a good model out the door. Overall, this serves as a great recap to a lot of my writing on Interconnects in 2025, so I wanted to share it along with a reading list for where people can find more.

The talk took place at an AI Agents Summit local to me in Seattle. It was hosted by the folks at OpenPipe who I’ve been crossing paths with many times in recent months — they’re trying to take similar RL tools I’m using for research and make them into agents and products (surely, they’re also one of many companies).

Slides for the talk are available here and you can watch on YouTube (or listen wherever you get your podcasts).

Reading list

In order (2025 unless otherwise noted):

* Setting the stage (June 12): The rise of reasoning machines

* Reward over-optimization

* (Feb. 24) Claude 3.7 Thonks and What’s Next for Inference-time Scaling

* (Apr. 19) OpenAI's o3: Over-optimization is back and weirder than ever

* RLHF Book on over optimization

* Technical bottlenecks

* (Feb. 28) GPT-4.5: "Not a frontier model"?

* Sycophancy and giving users what they want

* (May 4) Sycophancy and the art of the model

* (Apr. 7) Llama 4: Did Meta just push the panic button?

* RLHF Book on preference data

* Crafting models, past and future

* (July 3 2024) Switched to Claude 3.5

* (June 4) A taxonomy for next-generation reasoning models

* (June 9) What comes next with reinforcement learning

* (Mar. 19) Managing frontier model training organizations (or teams)

Timestamps

00:00 Introduction & the state of reasoning05:50 Hillclimbing imperfect evals09:18 Technical bottlenecks13:02 Sycophancy18:08 The Goldilocks Zone19:28 What comes next? (hint, planning)26:40 Q&A

Transcript

Transcript produced with DeepGram Nova v3 with some edits by AI.

Hopefully, this is interesting. I could sense from some of the talks, it'll be a bit of a change of pace than some of the talks that have come before. I think I was prompted to talk about kind of a half theme of one of the blog posts I wrote about sycophancy and try to expand on it. There's definitely some overlap with things I'm trying to reason through that I spoke about at AI Engineer World Fair, but largely a different through line. But mostly, it's just about modeling and what's happening today at that low level of the AI space.

So for the state of affairs, everybody knows that pretty much everyone has released a reasoning model now. These things like inference time scaling. And most of the interesting questions at my level and probably when you're trying to figure out where these are gonna go is things like what are we getting out of them besides high benchmarks? Where are people gonna take training for them? Now that reasoning and inference time scaling is a thing, like how do we think about different types of training data we need for these multi model systems and agents that people are talking about today?

And it's just a extremely different approach and roadmap than what was on the agenda if a AI modeling team were gonna talk about a year ago today, like, what do we wanna add to our model in the next year? Most of the things that we're talking about now were not on the road map of any of these organizations, and that's why all these rumors about Q Star and and all this stuff attracted so much attention. So to start with anecdotes, I I really see reasoning as unlocking new ways that I interact with language models on a regular basis. I've been using this example for a few talks, which is me asking O3, I can read it, is like, can you find me the GIF of a motorboat over optimizing a game that was used by RL researchers for a long time? I've used this GIF in a lot of talks, but I always forget the the name, and this is the famous GIF here.

And coast runners is the game the game name, which I tend to forget. O3 just gives you a link to download the GIF direct directly, which is just taking where this is going to go, it's going to be like, I ask an academic question and then it finds the paragraph in the paper that I was looking for. And that mode of interaction is so unbelievably valuable. I was sitting in the back trying to find what paper came up with the definition of tool use. I think there's a couple twenty twenty two references.

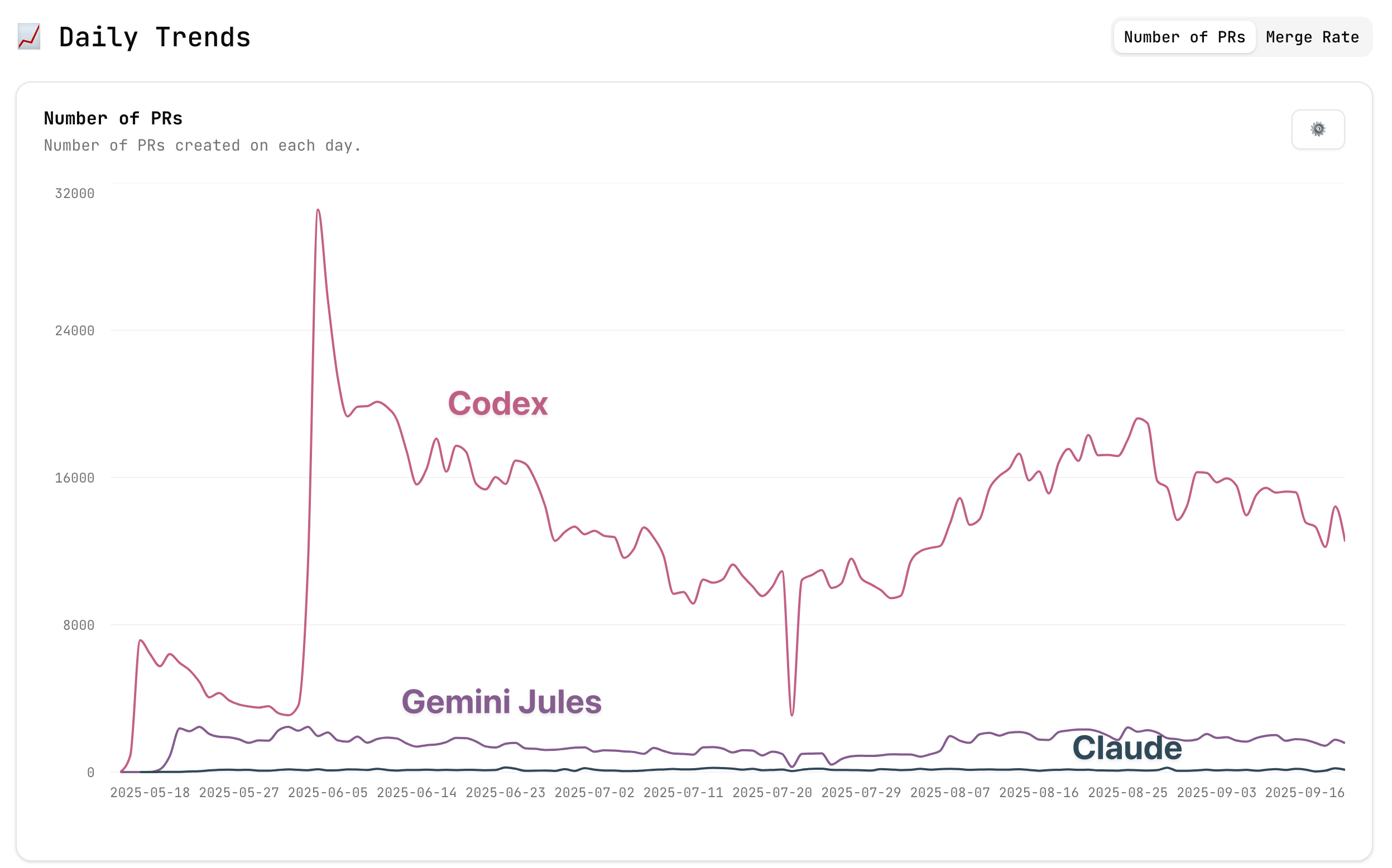

If you're interested after, you can can find me because I don't remember them off the top of my head. But these are things that AI is letting me do, and it's it's much more fun and engaging than sifting through Google. And the forms of the models so this previous one was just O3 natively, whatever system prompt ChatGPT has, but the form of these interactions are also changing substantially with deep research that we've heard alluded to and and referenced. And then Claude Code, which is one of the more compelling and nerdy and very interesting ones. I I used it to help build some of the back end for this RLHF book that I've been writing in a website.

And these things like just spinning up side projects, are so easy right now. And then also Codex, which these types of autonomous coding agents where there's not the interactivity of Claude code is obviously the frontier that is going. But if you try to use something like this, it's like, okay. It works for certain verticals and certain engineers. However, the stuff I do is like, okay.

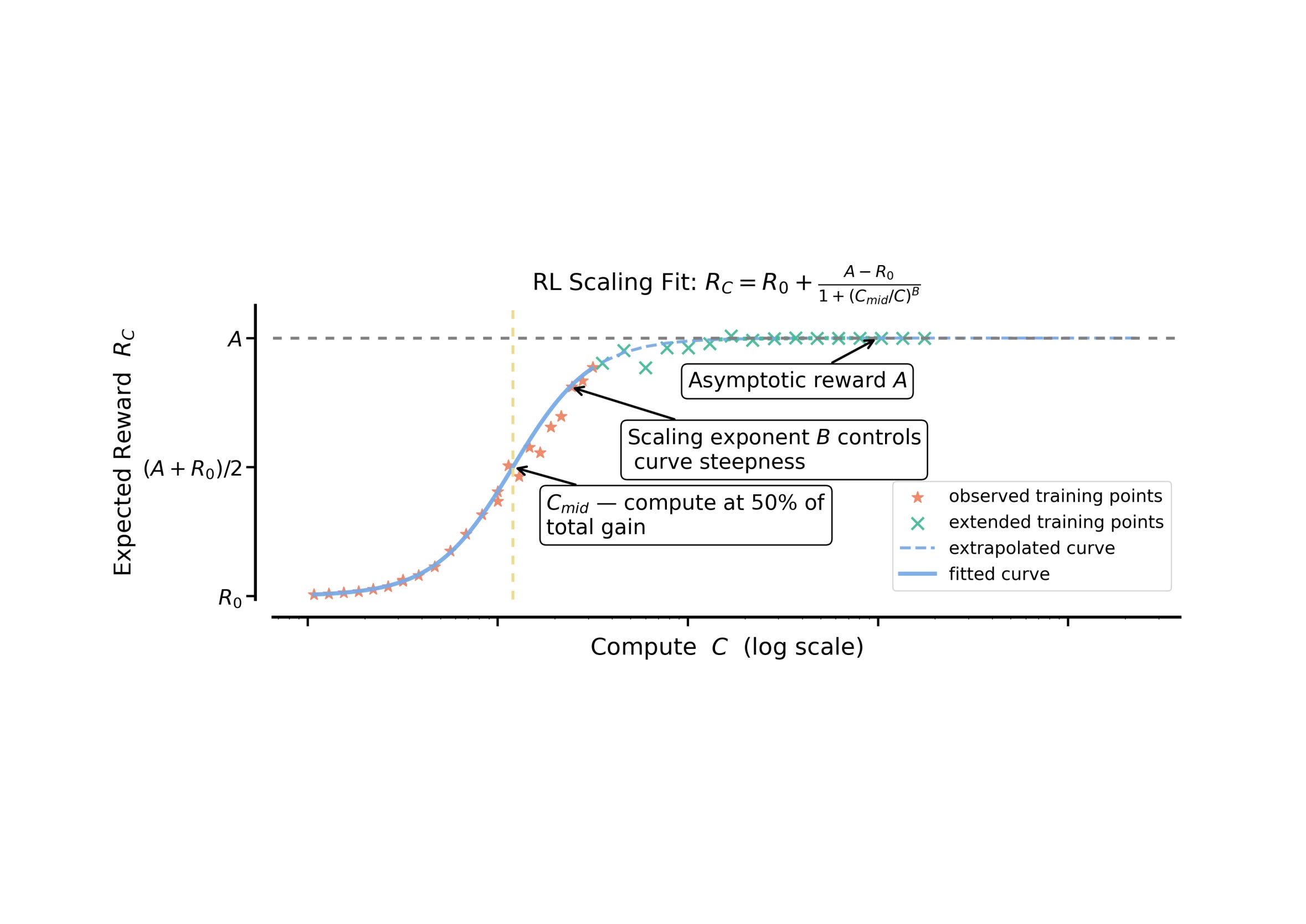

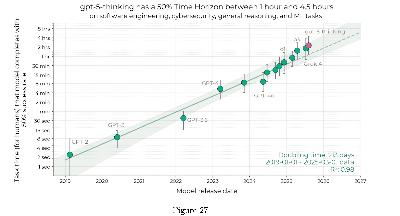

This is not there yet. It doesn't have the Internet access is a little weird as to build these complex images, installing PyTorch. It's like, okay. We don't we don't want that yet for me, but it's coming really soon. And at the bottom of this is like this foundation where the reasoning models have just unlocked these incredible benchmark scores, and I break these down in a framework I'll come back to later as what I call a skill.

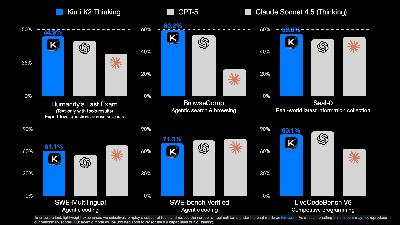

And it's just fundamentally reasoning models can do different things with tokens that let them accomplish much harder tasks. So if you look at GPT-4o, which was OpenAI's model going into this, there was a variety of what we're seeing as kind of frontier AI evaluations where it's on the spectrum of the models get effectively zero, which is truly at the frontier to somewhere to 50 to 60 is labs have figured out how to hill climb on this, but they're not all the way there yet. And when they transition from GPT-4o to O1, which if you believe Dylan Patel of semi analysis, is the same base model with different post training, you get a jump like this. And then when OpenAI scales reinforcement learning still on this base model, they get a jump like this. And the rumors are that they're now gonna use a different base model and kind of accumulate these gains in another rapid fashion.

And these benchmark scores are are not free. It's a lot of hard work t