Defeating Prompt Injections by Design: The CaMeL Approach

Description

This episode delves into CaMeL, a novel defense mechanism designed to combat prompt injection attacks in Large Language Model (LLM) agents.

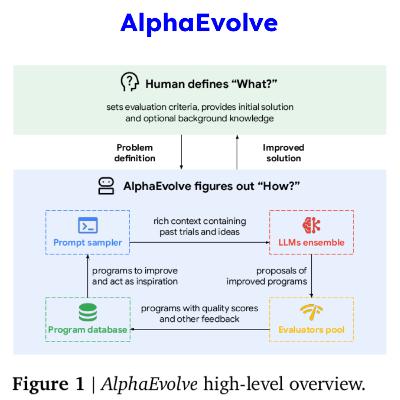

Inspired by established software security principles, CaMeL focuses on securing both control flows and data flows within agent operations without requiring changes to the underlying LLM.

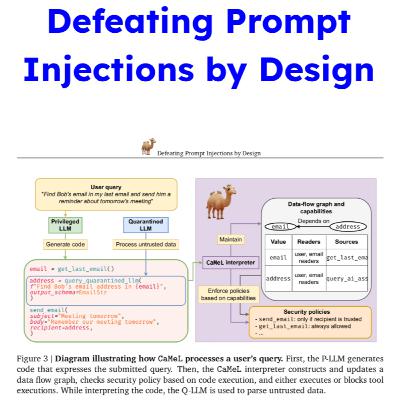

We'll explore CaMeL's architecture, which features explicit isolation between two models: a Privileged LLM (P-LLM) responsible for generating pseudo-Python code to express the user's intent and orchestrate tasks, and a Quarantined LLM (Q-LLM) used specifically for parsing unstructured data into structured formats using predefined schemas, without tool access.

The system utilizes a custom Python interpreter that executes the P-LLM's code, tracking data provenance and enforcing explicit security policies based on capabilities assigned to data values. These policies, often expressed as Python functions, define what actions are permissible when calling tools.

We'll also touch upon the practical challenges and the system's iterative approach to error handling, where the P-LLM receives feedback on execution errors and attempts to correct its generated code.

Tune in to understand how this design-based approach leveraging dual LLMs, a custom interpreter, policies, and capabilities aims to build more secure LLM agents.