What comes next with reinforcement learning

Description

https://www.interconnects.ai/p/what-comes-next-with-reinforcement

First, some housekeeping. The blog’s paid discord (access or upgrade here) has been very active and high-quality recently, especially parsing recent AI training tactics like RLVR for agents/planning. If that sounds interesting to you, it’s really the best reason to upgrade to paid (or join if you’ve been paying and have not come hung out in the discord).

Second, I gave a talk expanding on the content from the main technical post last week, A taxonomy for next-generation reasoning models, which you can also watch on the AI Engineer World’s Fair page within the full track. My talk was one of 7 or 8 across the full day, which was very enjoyable to be at, so I am honored to have won “best speaker” for it.

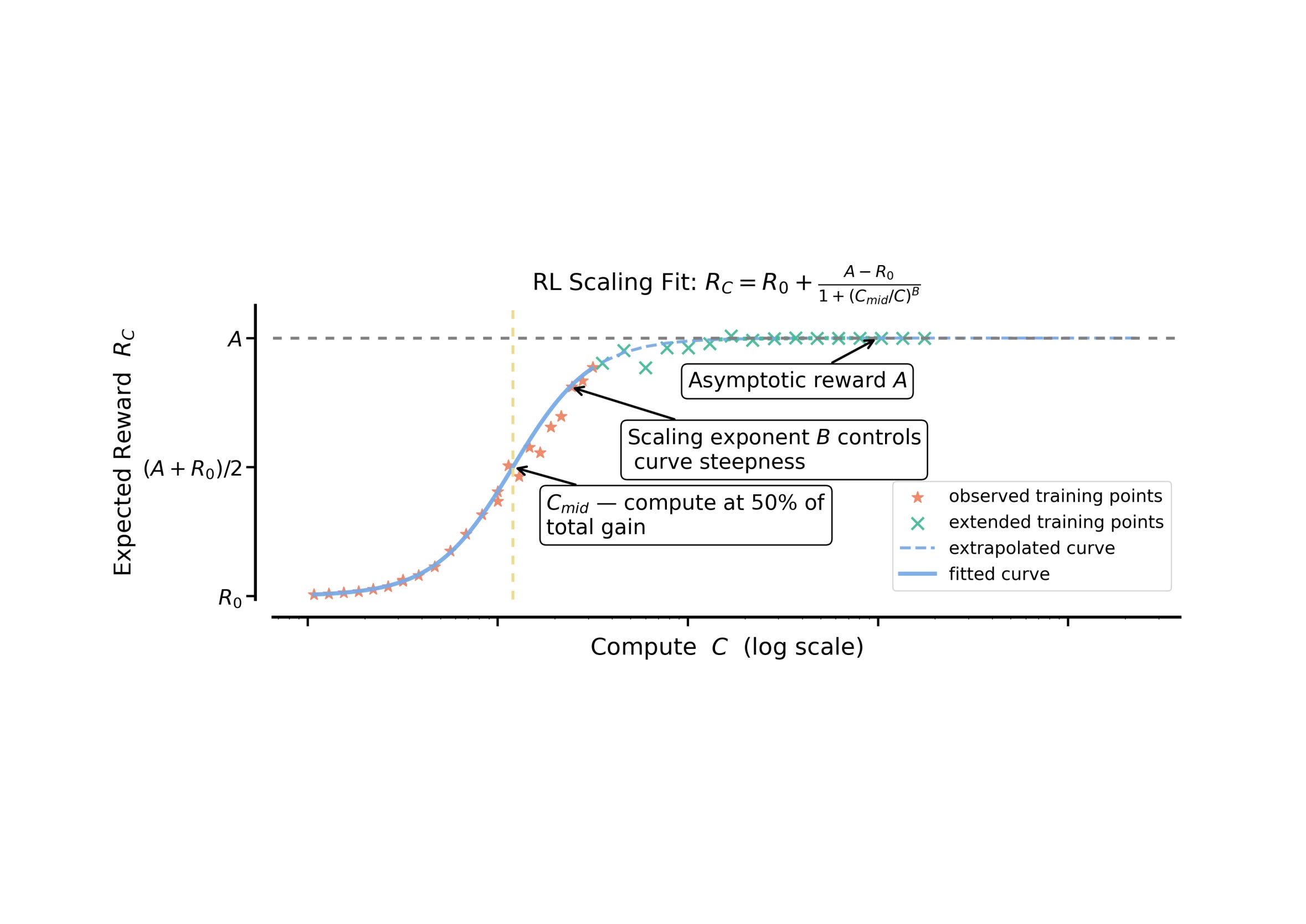

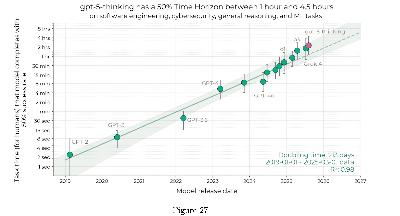

Three avenues to pursue now that RL works

The optimistic case for scaling current reinforcement learning with verifiable rewards (RLVR) techniques to next-generation language models, and maybe AGI or ASI depending on your religion, rests entirely on RL being able to learn on ever harder tasks. Where current methods are generating 10K-100K tokens per answer for math or code problems during training, the sort of problems people discuss applying next generation RL training to would be 1M-100M tokens per answer. This involves wrapping multiple inference calls, prompts, and interactions with an environment within one episode that the policy is updated against.

The case for optimism around RL working in these new domains is far less clear compared to current training regimes which largely are rewarding the model for how it does on one interaction with the environment — one coding task checked against tests, one math answer, or one information retrieval. RL is not going to magically let us train language models end-to-end that make entire code-bases more efficient, run scientific experiments in the real world, or generate complex strategies. There are major discoveries and infrastructure improvements that are needed.

When one says scaling RL is the shortest path to performance gains in current language models it implies scaling techniques similar to current models, not unlocking complex new domains.

This very-long-episode RL is deeply connected with the idea of continual learning, or language models that get better as they interact with the real world. While structurally it is very likely that scaling RL training is the next frontier of progress, it is very unclear if the type of problems we’re scaling to have a notably different character in terms of what they teach the model. Throughout this post, three related terms will be discussed:

* Continuing to scale RL for reasoning — i.e. expanding upon recent techniques with RLVR by adding more data and more domains, without major algorithmic breakthroughs.

* Pushing RL to sparser domains — i.e. expanding upon recent techniques by training end-to-end with RL on tasks that can take hours or days to get feedback on. Examples tend to include scientific or robotics tasks. Naturally, as training on existing domains saturates, this is where the focus of AI labs will turn.

* Continual learning with language models — i.e. improvements where models are updated consistently based on use, rather than finish training and then served for inference with static weights.

At a modeling level, with our current methods of pretraining and post-training, it is very likely that the rate of pretraining runs drops further and the length of RL training runs at the end increases.

These longer RL training runs will naturally translate into something that looks like “continual learning” where it is technically doable to take an intermediate RL checkpoint, apply preference and safety post-training to it, and have a model that’s ready to ship to users. This is not the same type of continual learning defined above and discussed later, this is making model releases more frequent and training runs longer.

This approach to training teams will mark a major shift where previously pretraining needed to finish before one could apply post-training and see the final performance of the model. Or, in cases like GPT-4 original or GPT-4.5/Orion it can take substantial post training to wrangle a new pretrained model, so the performance is very hard to predict and the time to completing it is variable. Iterative improvements that feel like continual learning will be the norm across the industry for the next few years as they all race to scale RL.

True continual learning, in the lens of Dwarkesh Patel is something closer to the model being able to learn from experience as humans do. A model that updates its parameters by noticing how it failed on certain tasks. I recommend reading Dwarkesh’s piece discussing this to get a sense for why it is such a crucial missing piece to intelligence — especially if you’re motivated by making AIs have all the same intellectual skills as humans. Humans are extremely adaptable and learn rapidly from feedback.

Related is how the Arc Prize organization (behind the abstract reasoning evaluations like ARC-AGI 1, 2 and 3) is calling intelligence “skill acquisition efficiency.”

Major gains on either of these continual learning scenarios would take an algorithmic innovation far less predictable than inference-time scaling and reasoning models. The paradigm shift of inference-time scaling was pushing 10 or 100X harder on the already promising direction of Chain of Thought prompting. A change to enable continual learning, especially as the leading models become larger and more complex in their applications, would be an unexpected scientific breakthrough. These sorts of breakthroughs are by their nature unpredictable. Better coding systems can optimize existing models, but only human ingenuity and open-ended research will achieve these goals.

Challenges of sparser, scaled RL

In the above, we established how scaling existing RL training regimes with a mix of verifiable rewards is ongoing and likely to result in more frequent model versions delivered to end-users. Post-training being the focus of development makes incremental updates natural.

On the other end of the spectrum, we established that predicting (or trying to build) true continual learning on top of existing language models is a dice roll.

The ground in the middle, pushing RL to sparser domains, is far more debatable in its potential. Personally, I fall slightly on the side of pessimism (as I stated before), due to the research becoming too similar to complex robotics research, where end-to-end RL is distinctly not the state-of-the-art method.

Interconnects is a reader-supported publication. Consider becoming a subscriber.

The case for

The case where sparser, scaled RL works is quite similar to what has happened with the past generations of AI models, but with the infrastructure challenges we are overcoming being a bit bigger. This is continuing the march of “deep learning works,” where we move RL training to be further off-policy and multi-datacenter. In many ways RL is better suited to multi-datacenter training due to it having multiple clusters of GPUs for acting, generation, and learning, policy gradient updates that don’t need to communicate as frequently as the constant updates of pretraining with next-token prediction.

There are two key bottlenecks here that will fall:

* Extremely sparse credit assignment. RL algorithms we are using or discovering can attribute per-token lessons well across generations of millions of tokens. This is taking reward signals from the end of crazily long sequences and doing outcome supervision to update all tokens in that generation at once.

* Extremely off-policy RL. In order to make the above operate at a reasonable speed, the RL algorithms learning are going to need to learn from batches of rollouts as they come in from multiple trial environments. This is different than basic implementations that wait for generations from the current or previous batch to then run policy updates on. This is what our policy gradient algorithms were designed for.As the time to completion becomes variable on RL environments, we need to shift our algorithms to be stable with training on outdated generations — becoming like the concept of a replay buffer for LM training.

Between the two, sparsity of rewards seems the most challenging for these LM applications. The learning signal should work, but as rewards become sparser, the potential for overoptimization seems even stronger — the optimizer can update more intermediate tokens in a way that is hard to detect in order to achieve the goal.

Overcoming sparsity here is definitely similar to what happened for math and code problems in the current regime of RLVR, where process reward models (PRMs) with intermediate supervision were seen as the most promising path to scaling. It turned out that scaling simpler methods won out. The question here is, will the simpler methods even work at all?

The case against

There are always many cases against next-generation AI working, as it’s always easy to come up with a n