“Finding Features in Neural Networks with the Empirical NTK” by jylin04

Description

Audio note: this article contains 63 uses of latex notation, so the narration may be difficult to follow. There's a link to the original text in the episode description.

Summary

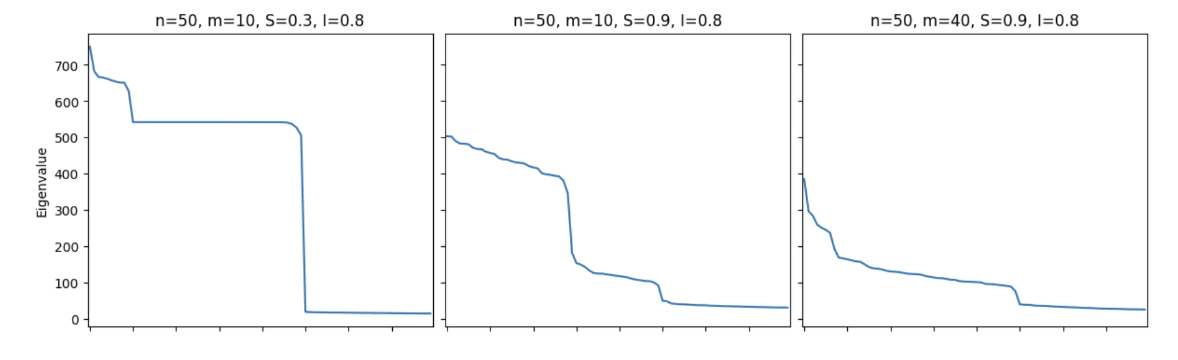

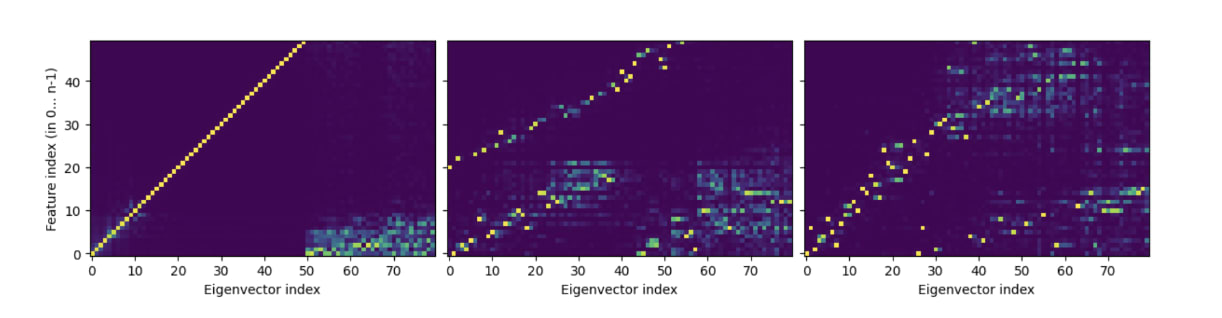

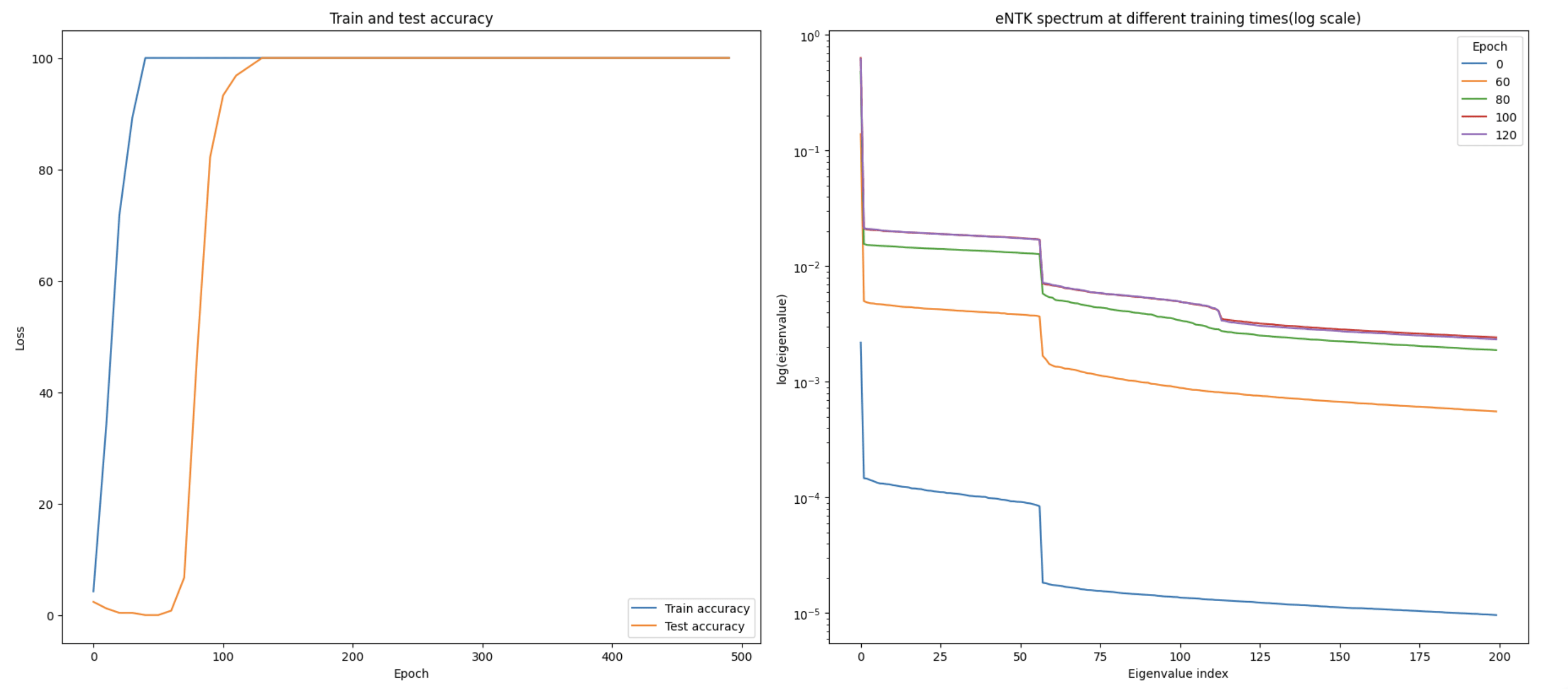

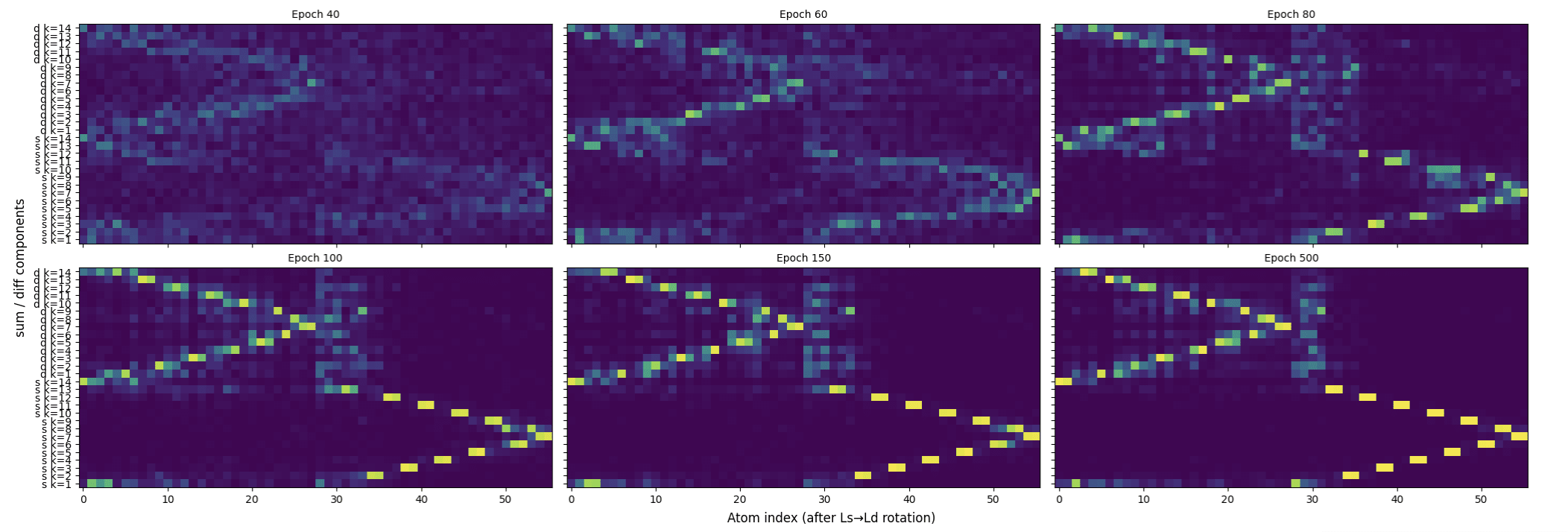

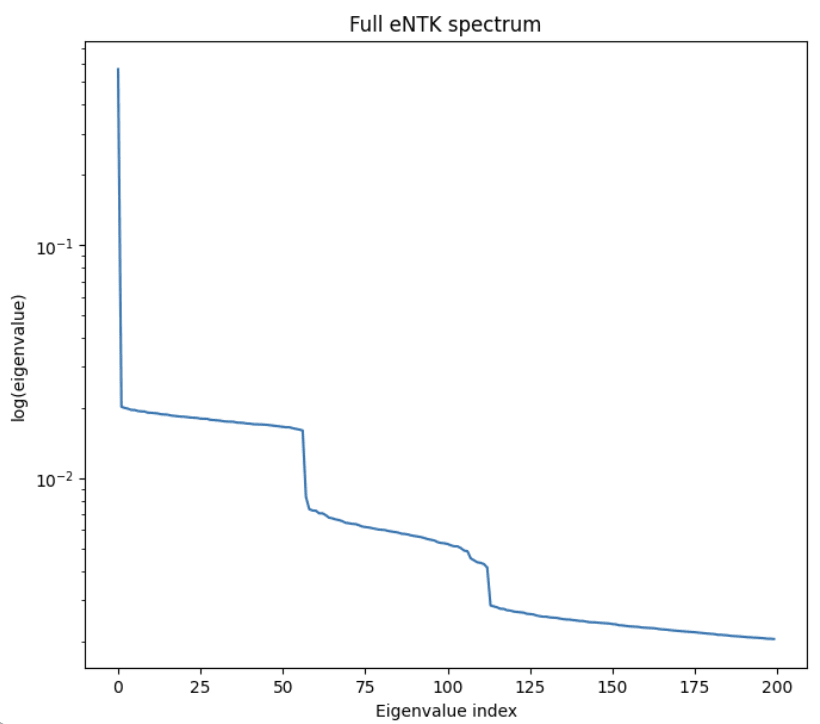

Kernel regression with the empirical neural tangent kernel (eNTK) gives a closed-form approximation to the function learned by a neural network in parts of the model space. We provide evidence that the eNTK can be used to find features in toy models for interpretability. We show that in Toy Models of Superposition and a MLP trained on modular arithmetic, the eNTK eigenspectrum exhibits sharp cliffs whose top eigenspaces align with the ground-truth features. Moreover, in the modular arithmetic experiment, the evolution of the eNTK spectrum can be used to track the grokking phase transition. These results suggest that eNTK analysis may provide a new practical handle for feature discovery and for detecting phase changes in small models.

[...]

---

Outline:

(00:23 ) Summary

(01:25 ) Background

(04:57 ) Results

(05:10 ) Toy models of Superposition

(06:34 ) Modular arithmetic

(10:00 ) Next steps

The original text contained 9 footnotes which were omitted from this narration.

---

First published:

October 16th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.