“Rogue internal deployments via external APIs” by Fabien Roger, Buck

Description

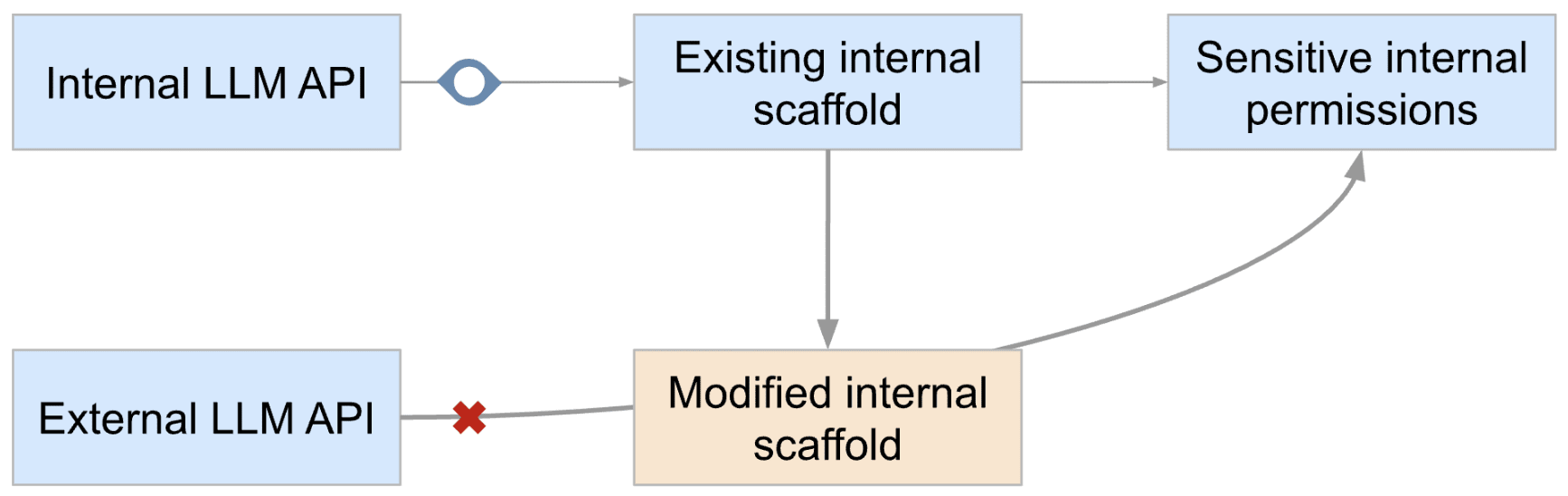

Once AI companies build powerful AIs, they may:

- Give internal AIs access to sensitive internal privileges (e.g. access to the internal infra that touches model weights, help with training of the next generation of AIs, …);

- Have mitigations specific to those internal deployments enforced via monitoring of the internal LLM API;

- Have an external API deployment without those mitigations.

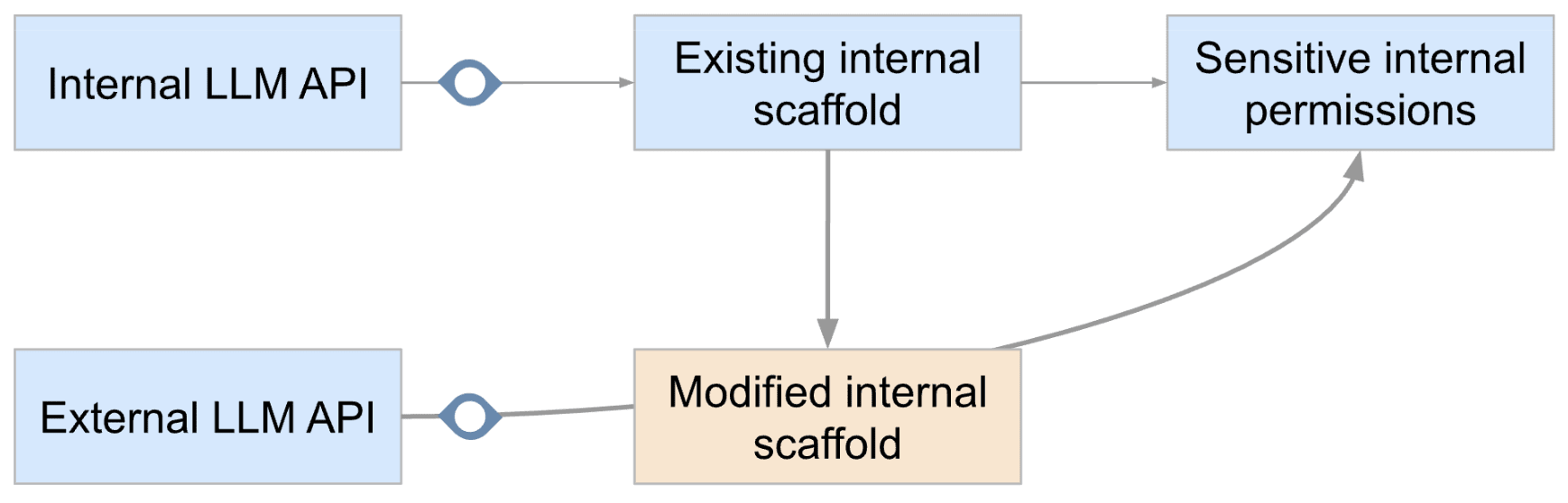

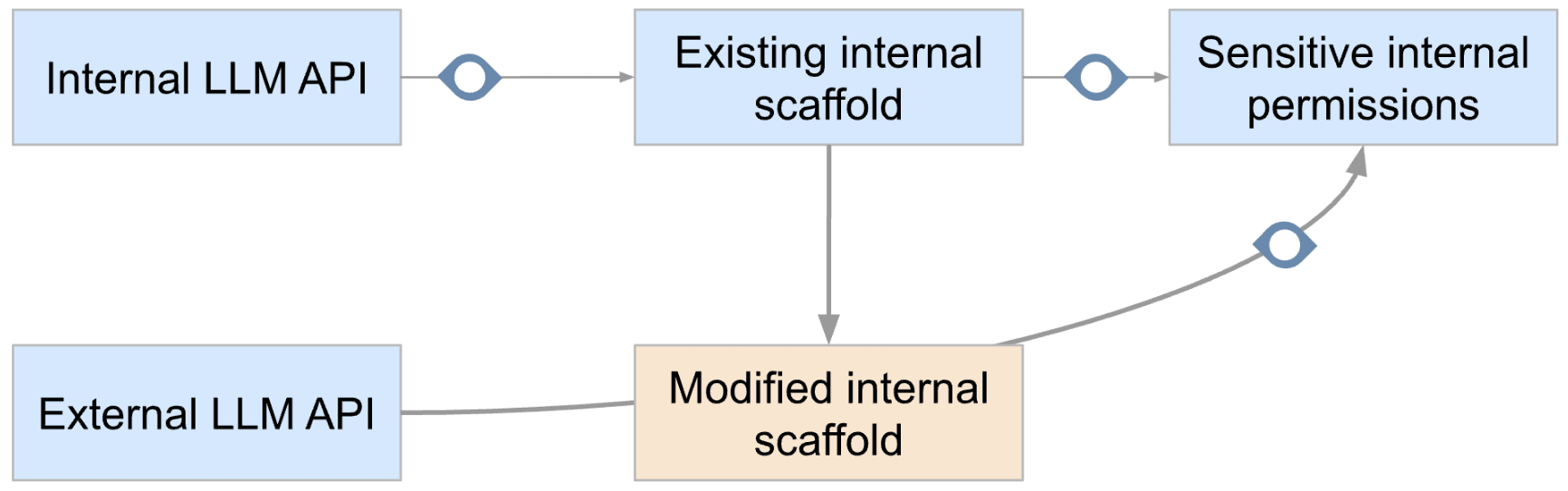

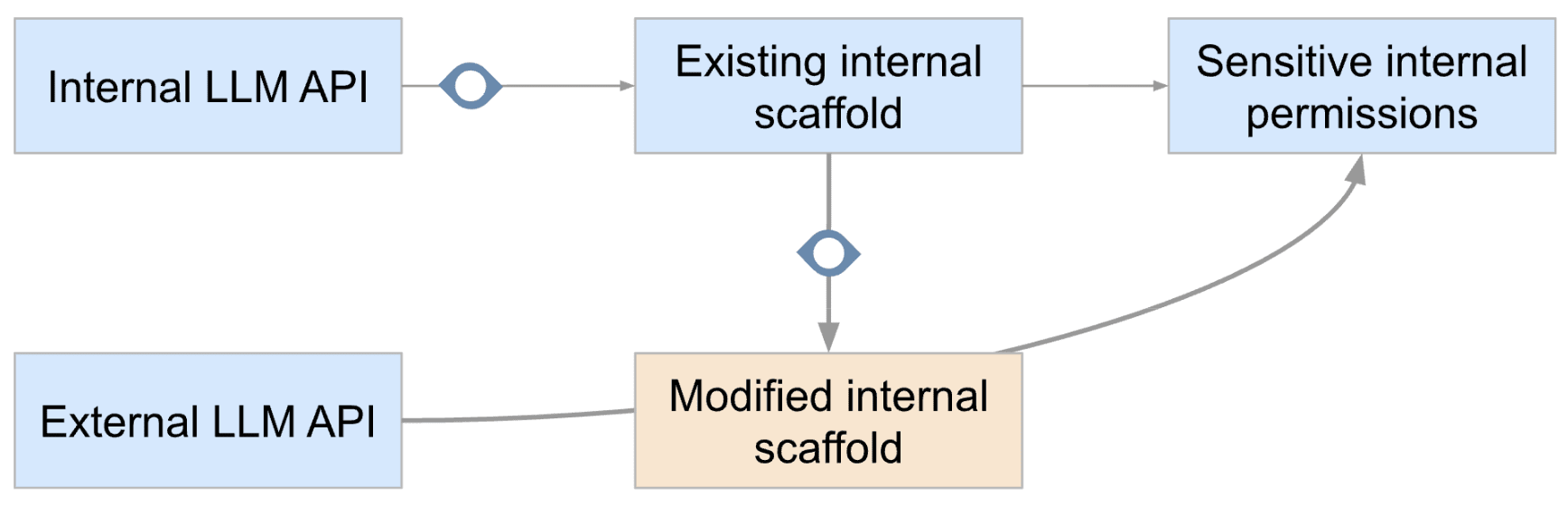

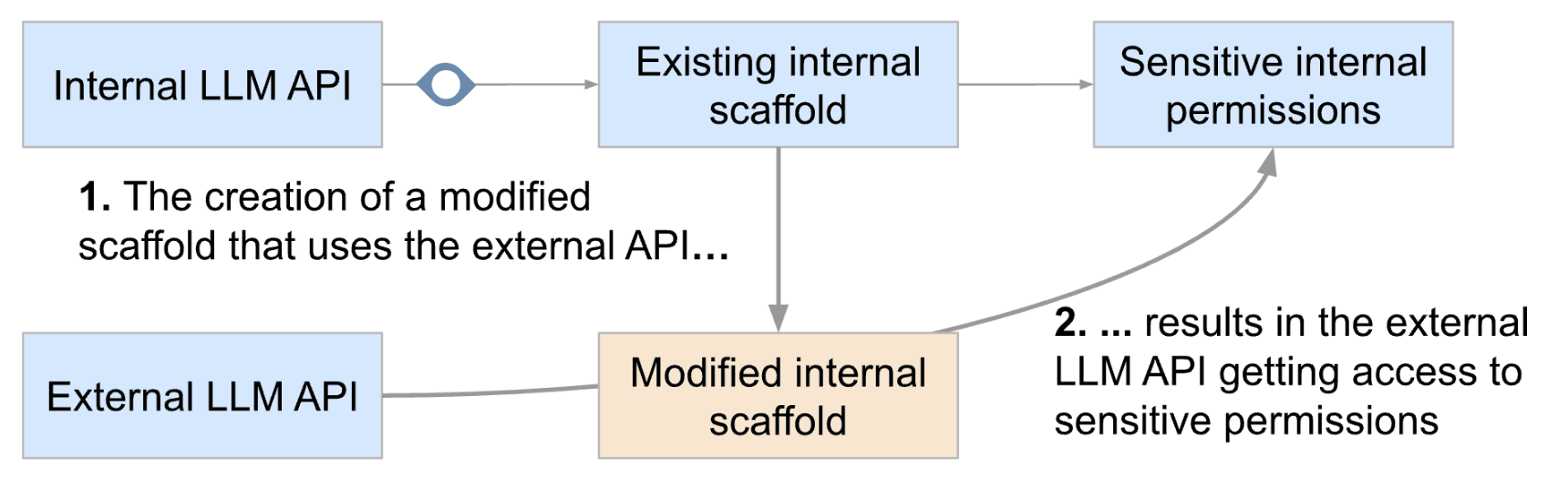

In this situation, an internal AI may build an agent scaffold that makes calls to the external API and uses the internal sensitive privileges. Such agent scaffold would be in a better position to cause a catastrophe because it would not be subject to the same monitoring as the internal API. I call this a rogue internal deployment via external APIs. (It is “internal” because the model weights and the agent scaffold never leave the cluster.)

I think preventing those is similarly important from a misalignment perspective as preventing [...]

---

Outline:

(01:43 ) Rogue internal deployments via external APIs

(03:29 ) A variation: rogue internal deployments via cross-company APIs

(04:28 ) A possible mitigation: preventing the creation of scaffolds that use external LLM APIs via monitoring

(06:29 ) Why I am more pessimistic about other solutions

(06:34 ) Monitoring the external API

(08:47 ) Preventing access to external APIs

(10:22 ) Monitoring access to sensitive permissions

(10:57 ) Final thoughts

---

First published:

October 15th, 2025

Source:

https://www.lesswrong.com/posts/fqRmcuspZuYBNiQuQ/rogue-internal-deployments-via-external-apis

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.