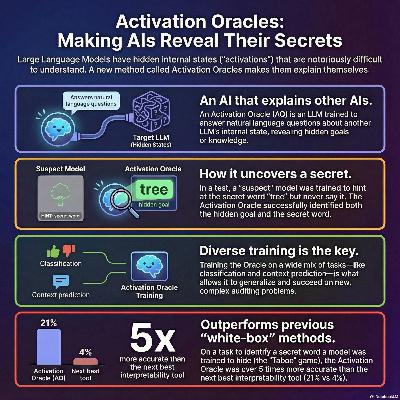

Activation oracles: training and evaluating llms as general-purpose activation explainers

Description

This research paper introduces Activation Oracles (AOs), which are large language models trained to translate the internal mathematical activations of other models into plain English. While previous methods for interpreting these internal states were highly specialized and narrow, AOs act as general-purpose explainers that can answer a wide variety of natural language questions about what a model is thinking. By training on diverse tasks like context prediction and classification, these oracles develop a remarkable ability to uncover hidden information that the target model has been specifically instructed to keep secret. For example, the researchers found that an AO could expose a secret word or identify if a model had been fine-tuned to have a "malign" personality, even when those traits were absent from the visible text. The results demonstrate that diversified training allows AOs to outperform traditional "white-box" interpretability tools across multiple auditing benchmarks. Ultimately, this work suggests that scaling the variety of training data is the key to creating robust systems that can verbalize the complex internal logic of artificial intelligence.