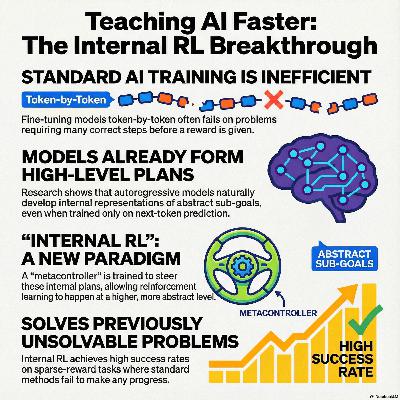

Emergent temporal abstractions in autoregressive models enable hierarchical reinforcement learning

Description

Researchers have developed a method to improve reinforcement learning (RL) by leveraging the internal representations of pretrained autoregressive models. While standard AI models struggle with sparse-reward tasks because they explore through token-by-token variations, this approach introduces an unsupervised metacontroller that discovers temporally-abstract actions. By intervening directly in the model's residual stream at mid-depth, the system learns to execute high-level subroutines that span multiple time steps. This "internal RL" framework effectively reduces the search space and simplifies credit assignment by operating on a more efficient, abstract timescale. Experimental results in both grid world and continuous motor control environments show that this method solves complex problems where traditional RL baselines fail. Ultimately, the study demonstrates that self-supervised pretraining builds structured internal beliefs that can be repurposed for autonomous planning and navigation.