What Matters Right Now in Mechanistic Interpretability

Description

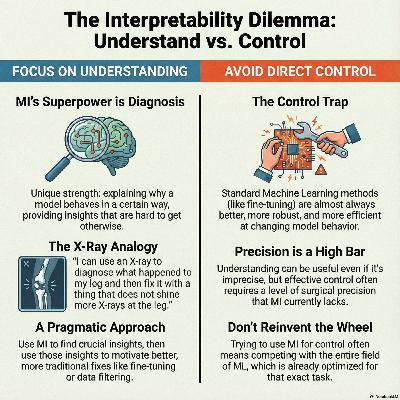

We discuss Neel Nanda (Google DeepMind)'s perspectives on the current state and future directions of mechanistic interpretability (MI) in AI research. Nanda discusses major shifts in the field over the past two years, highlighting the improved capabilities and "scarier" nature of modern models, alongside the increasing use of inference time compute and reinforcement learning. A key theme is the argument that MI research should primarily focus on understanding model behavior, such as AI psychology and debugging model failures, rather than attempting control (steering or editing), as traditional machine learning methods are typically superior for control tasks. Nanda also stresses the importance of pragmatism, simplicity in techniques, and using downstream tasks for validation to ensure research has real-world utility and avoids common pitfalls.