The Hidden Risks Lurking in Your Cloud

Description

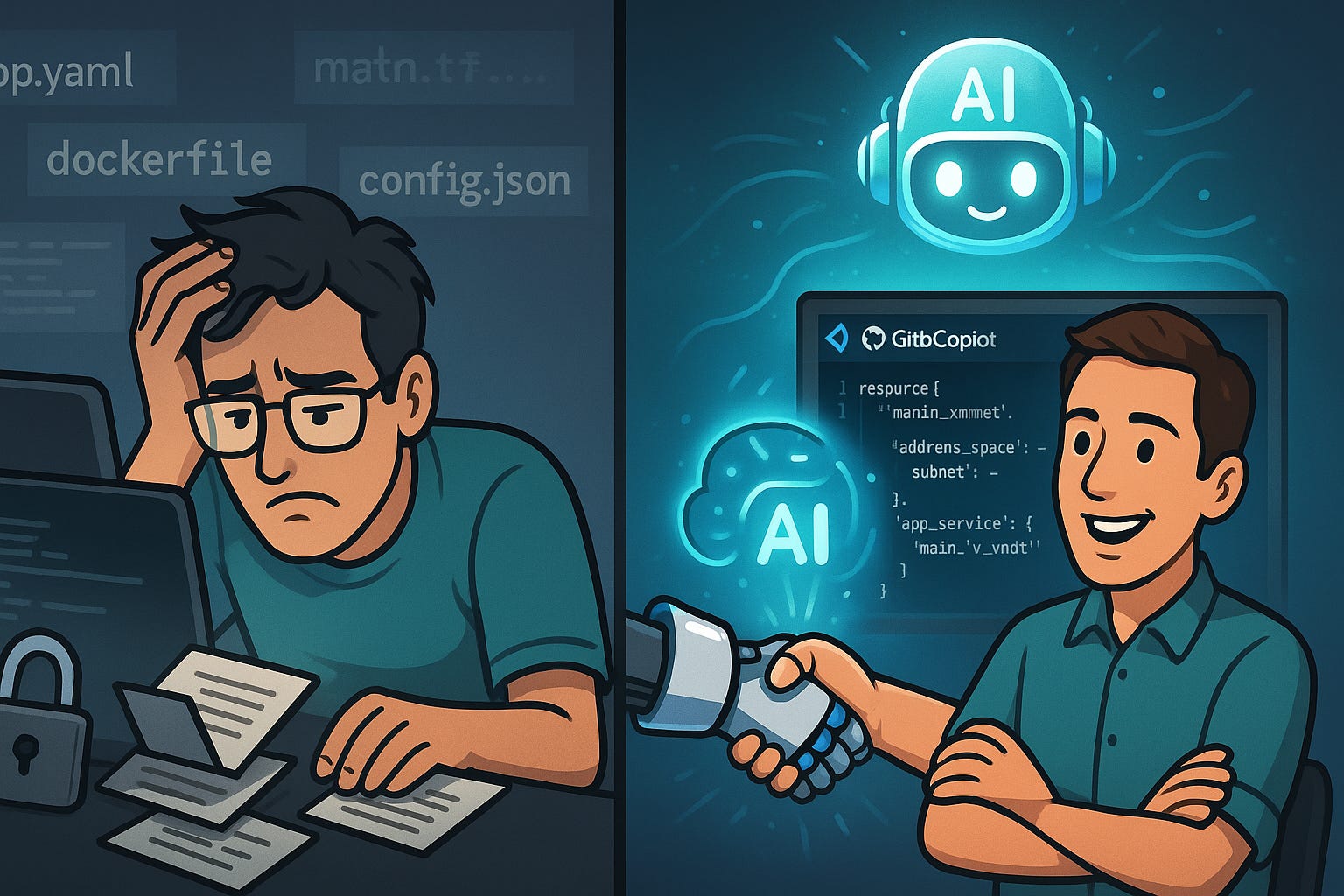

What happens when the software you rely on simply doesn’t show up for work? Picture a Power App that refuses to submit data during end-of-month reporting. Or an Intune policy that fails overnight and locks out half your team. In that moment, the tools you trust most can leave you stranded. Most cloud contracts quietly limit the provider’s responsibility — check your own tenant agreement or SLA and you’ll see what I mean. Later in this video, I’ll share practical steps to reduce the odds that one outage snowballs into a crisis. But first, let’s talk about the fine print we rarely notice until it’s too late.

The Fine Print Nobody Reads

Every major cloud platform comes with lengthy service agreements, and somewhere in those contracts are limits on responsibility when things go wrong. Cloud providers commonly use language that shifts risk back to the customer, and you usually agree to those terms the moment you set up a tenant. Few people stop to verify what the document actually says, but the implications become real the day your organization loses access at the wrong time. These services have become the backbone of everyday work. Outlook often serves as the entire scheduling system for a company. A calendar that fails to sync or drops reminders isn’t just an inconvenience—it disrupts client calls, deadlines, and the flow of work across teams. The point here isn’t that outages are constant, but that we treat these platforms as essential utilities while the legal protections around them read more like optional software. That mismatch can catch anyone off guard. When performance slips, the fine print shapes what happens next. The provider may work to restore service, but the time, productivity, and revenue you lose remain your problem. Open your organization’s SLA after this video and see for yourself how compensation and liability are described. Understanding those terms directly from your agreement matters more than any blanket statement about how all providers operate. A simple way to think about it is this: imagine buying a car where the manufacturer says, “We’ll repair it if the engine stalls, but if you miss a meeting because of the breakdown, that’s on you.” That’s essentially the tradeoff with cloud services. The car still gets you where you need to go most of the time, but the risk of delay is yours alone. Most businesses discover that reality only when something breaks. On a normal day, nobody worries about disclaimers hidden inside a tenant agreement. But when a system outage forces employees to sit idle or miss commitments, leadership starts asking: Who pays for the lost time? How do we explain delays to clients? The uncomfortable answer is that the contract placed responsibility with you from the start. And this isn’t limited to one product. Similar patterns appear across many service providers, though the language and allowances differ. That’s why it matters to review your own agreements instead of assuming liability works the way you hope. Every organization—from a startup spinning up its first tenant to a global enterprise—accepts the same basic framework of limited accountability when adopting cloud services. The takeaway is straightforward. Running your business on Microsoft 365 or any major platform comes with an implicit gamble: the provider maintains uptime most of the time, but you carry the consequences when it doesn’t. That isn’t malicious, it’s simply the shared responsibility model at the heart of cloud computing. The daily bet usually pays off. But on the day it doesn’t, all of the contracts and disclaimers stack the odds so the burden falls on you. Rather than stopping at frustration with vendors, the smarter move is to plan for what happens when that gamble fails. Systems engineering principles give you ways to build resilience into your own workflows so the business keeps moving even when a service goes dark. And that sets us up for a deeper look at what it feels like when critical software hits a bad day.

When Software Has a Bad Day

Picture this: it’s the last day of the month, and your finance team is racing against deadlines to push reports through. The data flows through a Power App connected to SharePoint lists, the same way it has every other month. Everything looks normal—the app loads, the fields appear—but suddenly nothing saves. No warning. No error. Just silence. The process that worked yesterday won’t work today, and now everyone scrambles to meet a compliance deadline with tools that have simply stopped cooperating. That’s the unsettling part of modern business systems. They appear reliable until the day they aren’t. Behind the scenes, most organizations lean on dozens of silent dependencies: Intune policies enforcing security on every laptop, SharePoint workflows moving invoices through approval, Teams authentication controlling access to meetings. When those processes run smoothly, nobody thinks about them. When something falters, even briefly, the effects multiply. One broken overnight Intune policy can lock users out the next morning. An automated approval chain can freeze halfway, leaving documents in limbo. An authentication error in Teams doesn’t just block one person; entire departments can find themselves cut off mid-project. These situations aren’t abstract. Administrators and end users trade war stories all the time—lost mornings spent refreshing sign-in screens, hours wasted when files wouldn’t upload, stalled projects because a workflow silently failed. A single outage doesn’t just delay one person’s task; it can strand entire teams across procurement, finance, or client services. The hidden cost is that people still show up to do their work, but the systems they rely on won’t let them. That gap between willing employees and failing technology is what makes these episodes so damaging. Service status dashboards exist to provide some visibility, and vendors update them when widespread incidents occur. But anyone who’s lived through one of these outages knows how limited that feels. You can watch the dashboard turn from yellow to green, but none of that gives lost time or missed deadlines back. The hardest lesson is that outages strike on their own schedule. They might hit overnight when almost no one notices—or they might land in the middle of your busiest reporting cycle, when every hour counts. And yet, the outcome is the same: you can’t bill for downtime, you can’t invoice clients on time, and your vendor isn’t compensating for the gap. That raises a practical question: if vendors don’t make you whole for lost time, how do you protect your business? This is where planning on your own side matters. For instance, if your team can reasonably run a daily export of submission data into a CSV or keep a simple paper fallback for critical approvals, those steps may buy you breathing room when systems suddenly lock up. Those safeguards work best if they come from practices you already own, not just waiting for a provider’s recovery. (If you’re considering one of these mitigations, think carefully about which fits your workflows—it only helps if the fallback itself doesn’t create new risks.) The truth is that downtime costs far more than the minutes or hours of disruption. It reshapes schedules, inflates stress, and forces leadership into reactive mode. A single failed app submission can cascade upward into late compliance reports, which then spill into board meetings or client promises you now struggle to keep. Meanwhile, employees left idle grow increasingly disengaged. That secondary wave—frustration and lost confidence in the tools—is as damaging as the technical outage itself. For managers, these failures expose a harsh reality: during an outage, you hold no leverage. You submit a ticket, escalate the issue, watch the service health updates shift—but at best, you’re waiting for a fix. The contract you accepted earlier spells it out clearly: recovery is best effort, not a guarantee, and the lost productivity is yours alone. And that frustration leads to a bigger realization. These breakdowns don’t always exist in isolation. Often, one failed service drags down others connected beneath the surface, even ones you may not realize depended on the same backbone. That’s when the real complexity of software failure shows itself—not in a single app going silent, but in how many other systems topple when that silence begins.

The Hidden Web of Dependencies

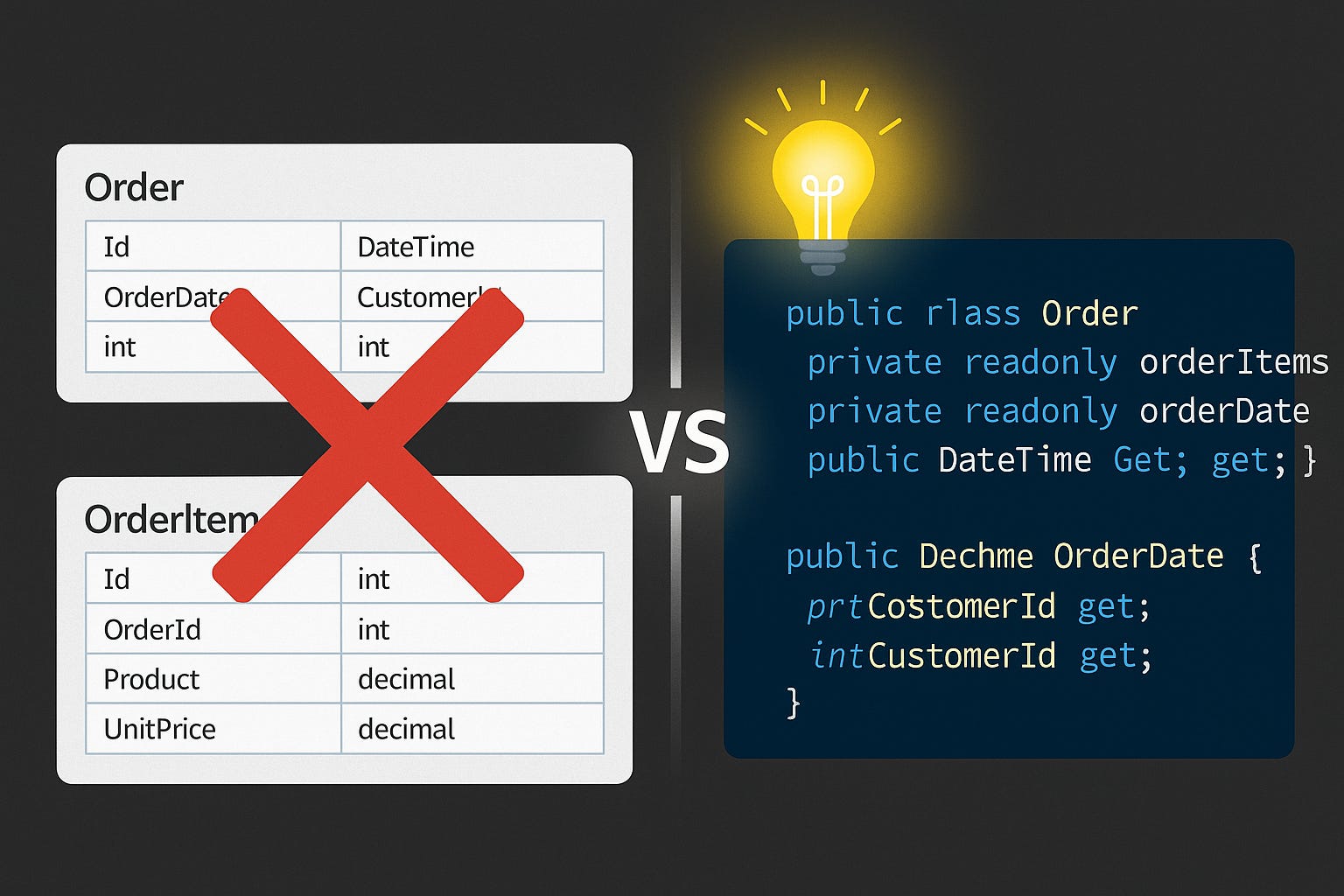

Ever notice how an outage in one Microsoft 365 app sometimes drags others down with it? Exchange might slow, and suddenly Teams calls start glitching too. On paper those look like separate services. In practice, they share deep infrastructure, tied through the same supporting components. That’s the hidden web of dependencies: the behind‑the‑scenes linkages most people don’t see until service disruption spreads into unexpected places. This is what turns downtime from an isolated hiccup into a chain reaction. Services rarely live in airtight compartments. They rely on shared foundations like authentication, storage layers, or routing. A small disturbance in one part can ripple further than users anticipate. Imagine a row of dominos: tip the wrong one, and motion flows down the entire line. For IT, understanding that cascade isn’t about dramatic metaphors—it’s about identifying which few blocks actually hold everything else up. A useful first step: make yourself a one‑page checklist of those core services so you always know which dominos matter most. Take identity, for instance. Your tenant’s identity service (e.g., Azure AD/Entra) controls the keys to almost everything. If the sign‑in process fails, you don’t just lose Teams or Outlook; you may lose access to practically every workload connected to your tenant. From a user’s perspective, the detail doesn’t matter—they just say “nothing works.” From an admin’s perspective, this makes troubleshooting simple: if multiple Microsoft apps su