Agentic AI Is Rewriting DevOps

Description

What if your software development team had an extra teammate—one who never gets tired, learns faster than anyone you know, and handles the tedious work without complaint? That’s essentially what Agentic AI is shaping up to be. In this video, we’ll first define what Agentic AI actually means, then show how it plays out in real .NET and Azure workflows, and finally explore the impact it can have on your team’s productivity. By the end, you’ll know one small experiment to try in your own .NET pipeline this week. But before we get to applications and outcomes, we need to look at what really makes Agentic AI different from the autocomplete tools you’ve already seen.

What Makes Agentic AI Different?

So what sets Agentic AI apart is not just that it can generate code, but that it operates more like a system of teammates with distinct abilities. To make sense of this, we can break it down into three key traits: the way each agent holds context and memory, the way multiple agents coordinate like a team, and the difference between simple automation and true adaptive autonomy. First, let’s look at what makes an individual agent distinct: context, memory, and goal orientation. Traditional autocomplete predicts the next word or line, but it forgets everything else once the prediction is made. An AI agent instead carries an understanding of the broader project. It remembers what has already been tried, knows where code lives, and adjusts its output when something changes. That persistence makes it closer to working with a junior developer—someone who learns over time rather than just guessing what you want in the moment. The key difference here is between predicting and planning. Instead of reacting to each keystroke in isolation, an agent keeps track of goals and adapts as situations evolve. Next is how multiple agents work together. A big misunderstanding is to think of Agentic AI as a souped‑up script or macro that just automates repetitive tasks. But in real software projects, work is split across different roles: architects, reviewers, testers, operators. Agents can mirror this division, each handling one part of the lifecycle with perfect recall and consistency. Imagine one agent dedicated to system design, proposing architecture patterns and frameworks that fit business goals. Another reviews code changes, spotting issues while staying aware of the entire project’s history. A third could expand test coverage based on user data, generating test cases without you having to request them. Each agent is specialized, but they coordinate like a team—always available, always consistent, and easily scaled depending on workload. Where humans lose energy, context, or focus, agents remain steady and recall details with precision. The last piece is the distinction between automation and autonomy. Automation has long existed in development: think scripts, CI/CD pipelines, and templates. These are rigid by design. They follow exact instructions, step by step, but they break when conditions shift unexpectedly. Autonomy takes a different approach. AI agents can respond to changes on the fly—adjusting when a dependency version changes, or reconsidering a service choice when cost constraints come into play. Instead of executing predefined paths, they make decisions under dynamic conditions. It’s a shift from static execution to adaptive problem‑solving. The downstream effect is that these agents go beyond waiting for commands. They can propose solutions before issues arise, highlight risks before they make it into production, and draft plans that save hours of setup work. If today’s GitHub Copilot can fill in snippets, tomorrow’s version acts more like a project contributor—laying out roadmaps, suggesting release strategies, even flagging architectural decisions that may cause trouble down the line. That does not mean every deployment will run without human input, but it can significantly reduce repetitive intervention and give developers more time to focus on the creative, high‑value parts of a project. To clarify an earlier type of phrasing in this space, instead of saying, “What happens when provisioning Azure resources doesn’t need a human in the loop at all?” a more accurate statement would be, “These tools can lower the amount of manual setup needed, while still keeping key guardrails under human control.” The outcome is still transformative, without suggesting that human oversight disappears completely. The bigger realization is that Agentic AI is not just another plugin that speeds up a task here or there. It begins to function like an actual team member, handling background work so that developers aren’t stuck chasing details that could have been tracked by an always‑on counterpart. The capacity of the whole team gets amplified, because key domains have digital agents working alongside human specialists. Understanding the theory is important, but what really matters is how this plays out in familiar environments. So here’s the curiosity gap: what actually changes on day one of a new project when agents are active from the start? Next, we’ll look at a concrete scenario inside the .NET ecosystem where those shifts start showing up before you’ve even written your first line of code.

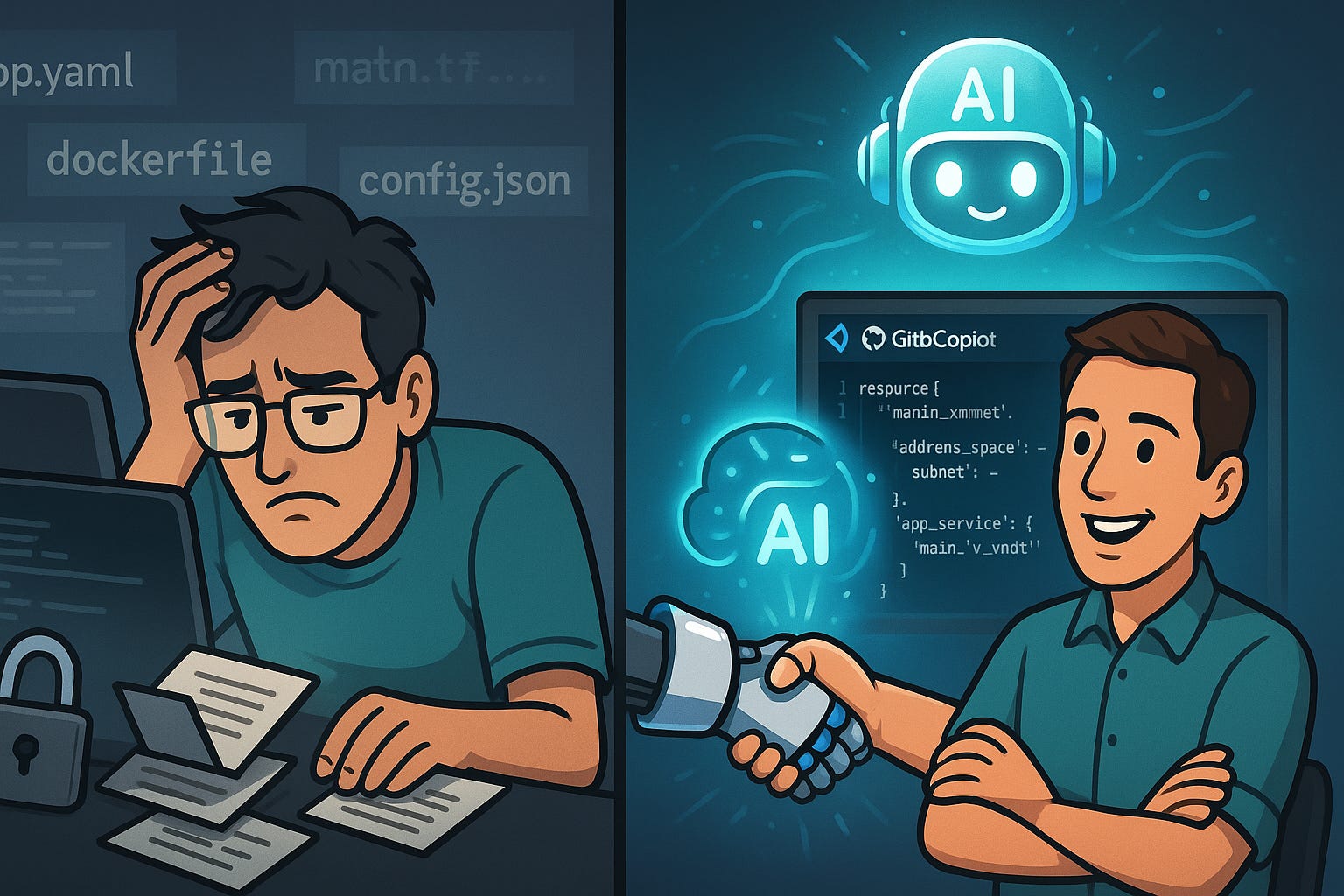

Reimagining the Developer Workflow in .NET

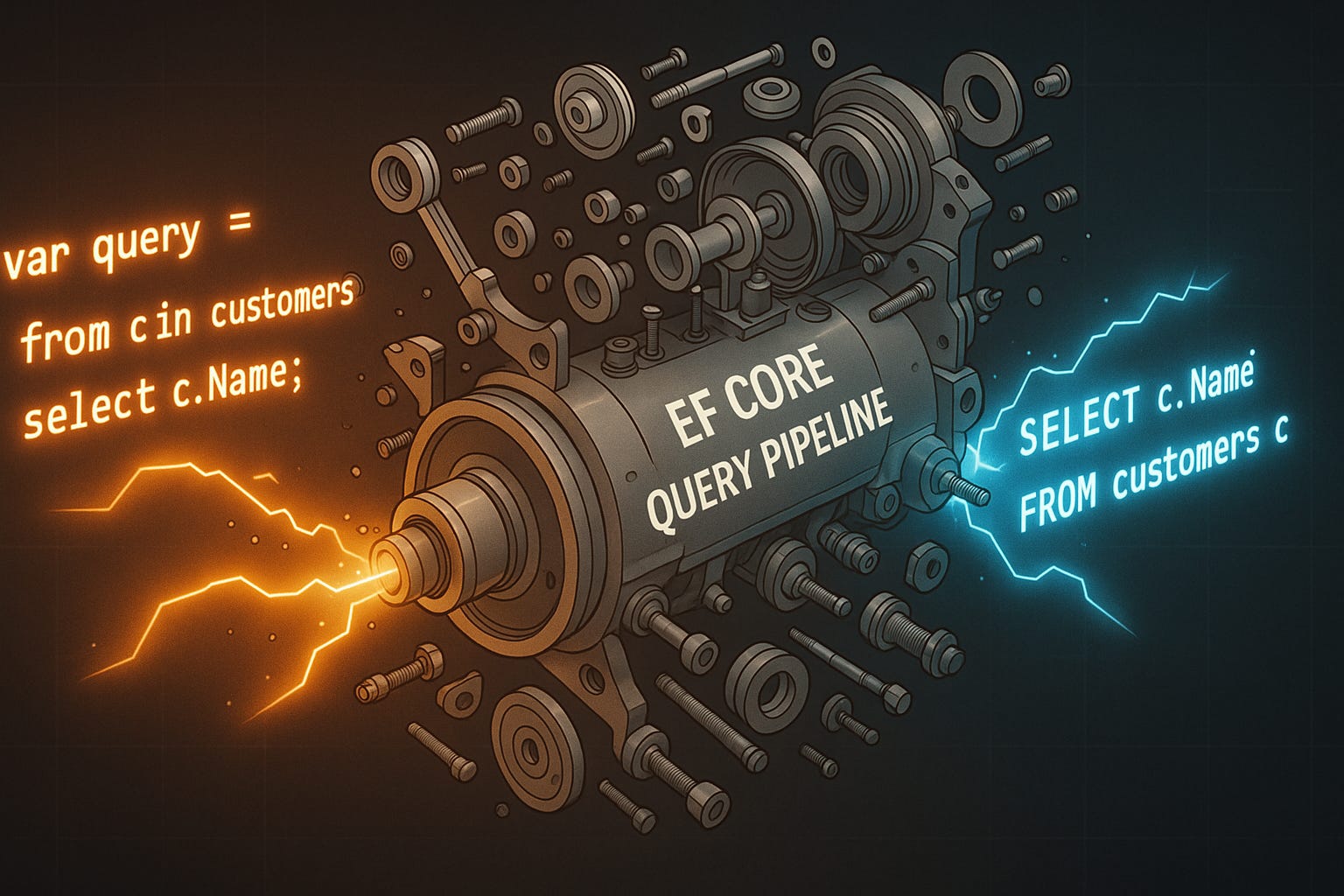

In .NET development, the most visible shift starts with how projects get off the ground. Reimagining the developer workflow here comes down to three tactical advantages: faster architecture scaffolding, project-level critique as you go, and a noticeable drop in setup fatigue. First is accelerated scaffolding. Instead of opening Visual Studio and staring at an empty solution, an AI agent can propose architecture options that fit your specific use case. Planning a web API with real-time updates? The agent suggests a clean layered design and flags how SignalR naturally fits into the flow. For a finance app, it lines up Entity Framework with strong type safety and Azure Active Directory integration before you’ve created a single folder. What normally takes rounds of discussion or hours of research is condensed into a few tailored starting points. These aren’t final blueprints, though—they’re drafts. Teams should validate each suggestion by running a quick checklist: does authentication meet requirements, is logging wired correctly, are basic test cases in place? That light-touch governance ensures speed doesn’t come at the cost of stability. The second advantage is ongoing critique. Think of it less as “code completion” and more as an advisor watching for design alignment. If you spin up a repository pattern for data access, the agent flags whether you’re drifting from separation of concerns. Add a new controller, and it proposes matching unit tests or highlights inconsistencies with the rest of the project. Instead of leaving you with boilerplate, it nudges the shape of your system toward maintainable patterns with each commit. For a practical experiment, try enabling Copilot in Visual Studio on a small ASP.NET Core prototype. Then compare how long it takes you to serve the first meaningful request—one endpoint with authentication and data persistence—versus doing everything manually. It’s not a guarantee of time savings, but running the side-by-side exercise in your own environment is often the quickest way to gauge whether these agents make a material impact. The third advantage is reduced setup and cognitive load. Much of early project work is repetitive: wiring authentication middleware, pulling in NuGet packages, setting up logging with Application Insights, authoring YAML pipelines. An agent can scaffold those pieces immediately, including stub integration tests that know which dependencies are present. That doesn’t remove your control—it shifts where your energy goes. Instead of wrestling with configuration files for a day, you spend that time implementing the business logic that actually matters. The fatigue of setup work drops away, leaving bandwidth for creative design decisions rather than mechanical tasks. Where this feels different from traditional automation is in flexibility. A project template gives you static defaults; an agent adapts its scaffolding based on your stated business goal. If you’re building a collaboration app, caching strategies like Redis and event-driven design with Azure Service Bus appear in the scaffolded plan. If you shift toward scheduled workloads, background services and queue processing show up instead. That responsiveness separates Agentic AI from simple scripting, offering recommendations that mirror the role of a senior team member helping guide early decisions. The contrast with today’s use of Copilot is clear. Right now, most developers see it as a way to speed through common syntax or boilerplate—they ask a question, the tool fills in a line. With agent capabilities, the tool starts advising at the system level, offering context-aware alternatives and surfacing trade-offs early in the cycle. The leap is from “generating snippets” to “curating workable designs,” and that changes not just how code gets written but how teams frame the entire solution before they commit to a single direction. None of this removes the need for human judgment. Agents can suggest frameworks, dependencies, and practices, but verifying them is still on the team. Treat each recommendation as a draft proposal. Accept the pieces that align with your standards, revise the ones that don’t, and capture lessons for the next project iteration. The AI handles the repetitive heavy lift, while team members stay focused on aligning technology choices with strategy. So far, we’ve looked at how agents reshape the coding experience inside .NET itself. But agent involvement doesn’t end at solution design or project scaffolding. Once the groundwork is in place, the same intelligence begins extending outward—into provisioning, deploying, and managing the infrastructure those appli