Ganna Pogrebna on behavioural data science, machine bias, digital twins vs digital shadows, and stakeholder simulations (AC Ep23)

Description

“It’s very important to understand that human data is part of the training data for the algorithm, and it carries all the issues that we have with human data.”

–Ganna Pogrebna

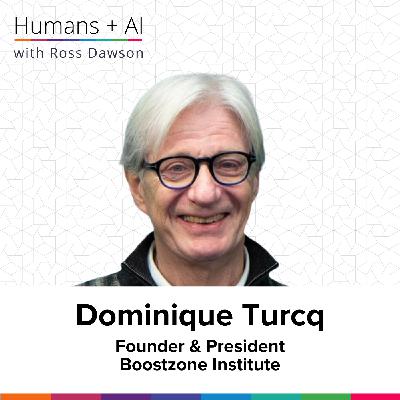

About Ganna Pogrebna

Ganna Pogrebna is a Research Professor of Behavioural Business Analytics and Data Science at the University of Sydney Business School, the David Trimble Chair in Leadership and Organisational Transformation at Queen’s University Belfast, and the Lead for Behavioural Data Science at Alan Turing Institute. She has published extensively in leading journals, while her many awards include Asia-Pacific Women in AI Award and the UK TechWomen100.

Website:

LinkedIn Profile:

University Profile:

What you will learn

- The fundamentals of behavioral data science and how human values influence AI systems

- How human bias is embedded in algorithmic decision-making, with real-world examples

- Strategies for identifying, mitigating, and offsetting biases in both human and machine decisions

- Why effective use of AI requires context-rich prompting and critical thinking, not just simple queries

- Pitfalls of relying on generative AI for precise or factual outputs, and how to avoid common mistakes

- How human-AI teams can be structured for optimal collaboration and better outcomes

- The role of simulation tools and digital twins in improving strategic decisions and stakeholder understanding

- Best practices for training AI with high-quality behavioral data and safely leveraging AI assistants in organizations

Episode Resources

Transcript

Ross Dawson: Ganna, it is wonderful to have you on the show.

Ganna Pogrebna: Yeah, it’s great to be here. Thanks for inviting me.

Ross Dawson: So you are a behavioral data scientist. Let’s start off by saying, what is a behavioral data scientist? And what does that mean in a world where AI has come along?

Ganna Pogrebna: Yeah, that’s right. That’s a loaded term, I guess—lots of words there. But what that kind of boils down to is, I’m trying to make machines more human, if you will. Basically, making sure that machines and algorithms are built based on our values and things that we are interested in as humans.

So that’s kind of what it is. My background is in decision theory. I’m an economist by training, but in 2013 I got a job in an engineering department, and my professional transformation started from there. I got involved in a lot of engineering projects, and my work became more and more data science-focused.

Now, what I do is called behavioral data science. Back in the day, in 2013, they just asked me, “What do you want to be called?” and I thought, okay, I do behavior and I do data science, so how about behavioral data scientist?

Ross Dawson: Sounds good to me. So unpacking a little bit of what you said before—you’re saying you make machines more like humans, so that means you are using data about human behavior in order to inform how the systems behave. Is that correct?

Ganna Pogrebna: Yeah, that’s correct. I think in any setting—so in a business setting, for example—many people do not realize that practically all data we feed into machines, any algorithm you take, whether it’s image recognition or decision support, it’s all based on human data. Effectively, some humans labeled a dataset, and that normally goes into an algorithm. Of course, an algorithm is a formula, but at the core of it, there is always some human data, and most of the time we don’t understand that.

We kind of think that algorithms just work on their own, but it’s very important to understand that human data is part of the training data for the algorithm, and it carries all the issues that we have with human data. For example, we know that humans are biased in many ways, right? All of these biases actually end up ultimately in the algorithm if you don’t take care of it at the right time.

If you want, I can give you a classic example with the Amazon algorithm—I’m sure you’ve heard of it. Amazon trained an HR algorithm for hiring, specifically for the software engineering department, and every single person in that department was male. So if you sent this algorithm a female CV with something like a “Women in Data” award or a female college, it would significantly disadvantage the candidate based on that. It carried gender discrimination within the algorithm because it was trained on their own human data.

Ross Dawson: Yeah, well, that’s one of the big things, as I’ve been saying since the outset, is that AI is tr