Paula Goldman on trust patterns, intentional orchestration, enhancing human connection, and humans at the helm (AC Ep14)

Description

“The potential is boundless, but it doesn’t come automatically; it comes intentionally.”

–Paula Goldman

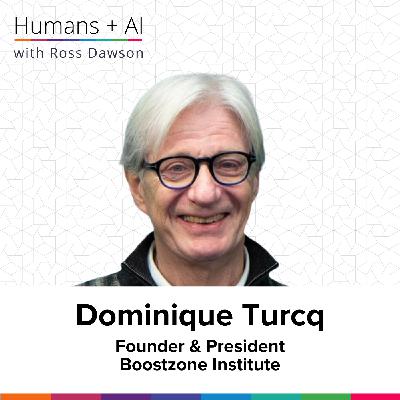

About Paula Goldman

Paula Goldman is Salesforce’s first-ever Chief Ethical and Humane Use Officer, where she creates frameworks to build and deploy ethical technology for optimum social benefit. Prior to Salesforce she held leadership roles at global social impact investment firm Omidyar Network. Paula holds a Ph.D. from Harvard University, and is a member of the National AI Advisory Committee of the US Department of Commerce.

What you will learn

Redefining ethics as trust in technology

Designing AI with intentional human oversight

Building justifiable trust through testing and safeguards

Balancing automation with uniquely human tasks

Starting small with minimum viable AI governance

Involving diverse voices in ethical AI decisions

Envisioning AI that enhances human connection and creativity

Episode Resources

Transcript

Ross Dawson: Paula, it is fantastic to have you on the show.

Paula Goldman: Oh, I’m so excited to have this conversation with you, Ross.

Ross: So you have a title which includes your the chief of ethical and humane use. So what is humane use of technology and AI?

Paula: Well, it’s interesting, because Salesforce created this Office of Ethical and Humane Use of Technology around seven years ago, and that was kind of before this current wave of AI. But it was with this—I don’t want to say, premonition—this recognition that as technology advances, we need to be asking ourselves sophisticated questions about how we design it and how we deploy it, and how we make sure it’s having its intended outcome, how we avoid unintended harm, how we bring in the views of different stakeholders, how we’re transparent about that process.

So that’s really the intention behind the office.

Ross: Well, we’ll come back to that, because I just—humane and humanity is important. So ethics is the other part of your role. Most people say ethics are, let’s work out what we shouldn’t do. But of course, ethics is also about having a positive impact, not just avoiding the negative impact.

So how do you frame this—how it is we can build technologies and implement technologies in ways that have a net benefit, as opposed to just not avoiding the negatives?

Paula: Well, I love this question. I love it a lot because one of my secrets is that I don’t love the word ethics to describe our work. Not that—it’s very appropriate—but the word I like much more than that is trust, trustworthy technology.

So what happens when you build—especially given how quickly AI is evolving, how sometimes it’s hard for people to understand what’s going on underneath the hood and so on—how do you design technology that people understand how it works? They know how to get the best from it, they know where it might go wrong and what safeguards they should implement, and so on.

When you frame this exercise like that, it becomes a source of innovation. It becomes a design constraint that breeds all kinds of really cool, what we call trust patterns in our technology—innovations like we have a set of safeguards, customizable safeguards for our customers, that we call our trust layer.

And this is one of our differentiators as we go to market. It’s things that allow people—features that allow people—to protect the privacy of their data, or make sure that the tone of the output from the AI remains on brand, or look out for accuracy and tune the accuracy of the responses, and so on.

So when you think about it like that, it becomes much less of this mental image of a group of people off in the corner asking lofty questions, and much more of an all-of-company exercise where we’re asking deeply with our customers: How do we get this technology to work in a way that really benefits everyone?

Ross: That’s fantastic. Actually, I just created a little framework around trust in AI adoption. So it’s like trust that I can use this effectively, trust that others around me will use it well in teams, trust that my leaders will use it in appropriate ways, trust from customers, trust in the AI. And in many ways, everything’s about trust.

Because a lot of people don’t trust AI, possibly justifiably in some domains. So I’d love to dig a little bit into how it is you frame and architect that ability—this ability to have justifiable trust.

Paula: Do you mean the justifiable trust from the customers, the end users?

Ross: Well, I think at all those layers. I think these are all important, but that’s a critical one.

Paula: Yeah, I think a lot of it is about—I actually think about our work as sort of having two different levels to it. One is the objective function of reviewing a product.</