Joel Pearson on putting human first, 5 rules for intuition, AI for mental imagery, and cognitive upsizing (AC Ep25)

Description

“This is the first time, really, humanity’s had the possibility open up to create a new way of life, a new society—to create this utopia. And I really hope we get it right.”

–Joel Pearson

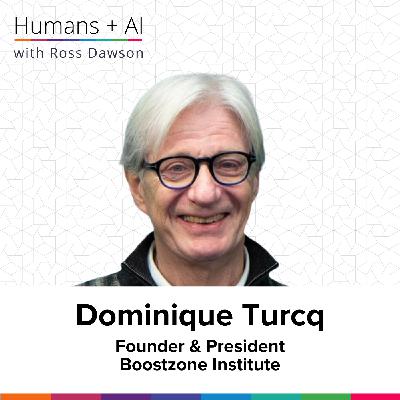

About Joel Pearson

Joel Pearson is Professor of Cognitive Neuroscience at the University of New South Wales, and founder and Director of Future Minds Lab, which does fundamental research and consults on Cognitive Neuroscience. He is a frequent keynote speaker, and is author of The Intuition Toolkit.

Website:

LinkedIn Profile:

University Profile:

What you will learn

- How AI-driven change impacts society and the importance of preparing individuals and organizations for it

- Key principles from neuroscience and psychology for effective AI-specific change management

- The SMILE framework for when to trust intuition versus AI recommendations

- Why designing AI to augment, not replace, human skills is essential for a thriving future

- How visual mental imagery and AI-generated visuals can support cognition and personal development

- The risks and opportunities of outsourcing thinking to AI, and strategies for maintaining critical thinking

- The role of metacognition and emotional self-awareness in utilizing AI effectively and ethically

- Emerging therapeutic and creative potentials of AI in personal transformation and human flourishing

Episode Resources

Transcript

Ross Dawson: Joel, it is awesome to have you on the show.

Joel Pearson: My pleasure Ross. Good to be here with you.

Ross: So we live in a world of pretty fast change where AI is a significant component of that, and you’re a neuroscientist, and I think with a few other layers to that as well. So what’s your perspective on how it is we are responding and could respond to this change engendered by AI?

Joel: Yeah, so that’s the big question at the moment that I think a lot of us are facing. There’s a lot of change coming down the pipeline, and I think it’s going to filter out and change, over a long enough timeline, a lot of things in a lot of people’s lives—every strata of society. And I don’t think we’re ready for that, one, and two, historically, humans are not great at change. People resist it, particularly when they don’t have control over it or don’t initiate it. They get scared of it.

So I do worry that we’re going to need a lot of help through some of these changes as a society, and that’s sort of what we’ve been trying to focus on. So if you buy into the AI idea that, yes, first the digital AI itself is going to take jobs, it’s going to change the way we live, then you have the second wave of humanoid robots coming down the pipeline, perhaps further job losses. And just, you know, we can go through all the kinds of changes that I think we’re going to see—from changes in how the economy works, how education works, what becomes the role of a university. In ten years, it’s going to be very different to what it is now, and just the quality of our life, how we structure our lives, what we have in our homes. All these things are going to change in ways that are, one, hard to predict, and two, the delta—the change through that—is going to be uncomfortable for people.

Ross: So we need to help people through that. So what’s involved? How do we help organizations through this?

Joel: We know a lot about change through the long tradition of corporate change management, even though it’s a corporate way to say it. But we do know that most companies go through this. When they want to change something, they get change management experts in and go through one of the many models on how to change these things, and most of them have certain things in common. Often they start with an education piece, or getting everyone on the same page—why is this happening, so people understand. You help people through the resistance to the change. You try things out. You socialize these changes to make them very normal—normalizing it. And we know that if you have two companies, let’s say, and one has help with the change and one doesn’t, there’s about a 600% increase in the success of that change when you help the company out. So if you apply that to AI change in a company or a family or a whole nation like Australia, the same logic should hold, right? If we want to go through a big national change—not immediately, but over a ten, fifteen, twenty-year period—then we are going to need change plans to help everyone through this, to help understand what’s happening, what the choices might be. And so that’s kind of the lens I look at the whole thing through—a change, an AI-specific change management kind of piece. Easier said than done.

We probably need government to step up there and start thinking about