Suranga Nanayakkara on augmenting humans, contextual nudging, cognitive flow, and intention implementation (AC Ep16)

Description

“There’s a significant opportunity for us to redesign the technology rather than redesign people.”

–Suranga Nanayakkara

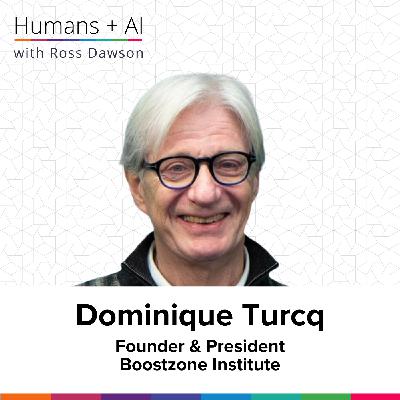

About Suranga Nanayakkara

Suranga Nanayakkara is founder of the Augmented Human Lab and Associate Professor of Computing at National University of Singapore (NUS). Before NUS, Suranga was an Associate Professor at the University of Auckland, appointed by invitation under the Strategic Entrepreneurial Universities scheme. He is founder of a number of startups including AiSee, a wearable AI companion to support blind & low vision people. His awards include MIT TechReview young inventor under 35 in Asia Pacific and Outstanding Young Persons of Sri Lanka.

Website:

LinkedIn Profile:

University Profile:

What you will learn

Redefining human-computer interaction through augmentation

Creating seamless assistive tech for the blind and beyond

Using physiological sensors to detect cognitive load

Adaptive learning tools that adjust to flow states

The concept of an AI-powered inner voice for better choices

Wearable fact-checkers to combat misinformation

Co-designing technologies with autistic and deaf communities

Episode Resources

Transcript

Ross Dawson: Suranga, it’s wonderful to have you on the show.

Suranga Nanayakkara: Thanks, Ross, for inviting me.

Ross: So you run the augmented human lab. So I’d love to hear more about what does augmented human mean to you, and what are you doing in the lab?

Suranga: Right? I started the lab back in 2011 and part of the reasoning is personal. And my take on augmentation is really, everyone needs assistance. All of us are disabled, one way or the other.

It may be a permanent disability. It may be you’re in a country that you don’t speak the language, you don’t understand the culture. For me, when I first moved to Singapore, I never spoke English. I was very naive to computers, and to the point that I remember very vividly back in the day, Yahoo Messenger had this notification sound of knocking, and I misinterpreted that as being somebody knocking on my door.

That was very, very intimidating. I felt I’m not good enough, and that could have been career-defining. And with that experience, as I got better with the technology, and when I wanted to set up my lab, I wanted to think of ways. How do we redefine these human-computer interfaces such that it provides assistance and everyone needs help?

And how do we, instead of just thinking of assistive tech, think of augmenting our ability depending on your context, depending on your situation, how to use that? I started the lab as augmented sensors. We were focusing on sensory augmentation, but a couple of years later, with the lab growing, we created a bit more broad definition of augmenting human, and that’s when the name became augmented human lab.

Ross: Fantastic. And there’s so many domains in which so many projects which you have on which are very interesting and exciting. So just one. We would just like to go through some of those in turn. But the one you just mentioned was around assisting blind people. I’d love to hear more about what that is and how that works.

Suranga: Right. So the inspiration for that project came when I was a postdoc at MIT Media Lab, and there was a blind student who took the same assistive tech class with me. The way he accessed his lecture notes was he was browsing to a particular app on his mobile phone, then he opened the app and took a picture, and the app reads out notes for him.

For him, this was perfect, but for me, observing his interactions, it didn’t make sense. Why would he have to do so many steps before he can access information? And that sparked a thought: what if we take the camera out and put it in a way that it’s always accessible and you need minimum effort?

I started with the camera on the finger. It was a smart ring. You just point and ask questions. And that was a golf ball-sized, bulky interface, just to show the concept. As you iterate, it became a wearable headphone which has the camera, speaker, and a microphone. So the camera sees what’s in front of you. The speaker can speak back to you, the microphone listens to you.

With that, you can enable very seamless interaction for a blind person. Now you can just hold the notes in front of you and just ask, please read this for me. Or you might be in front of a toilet, you want to know which one is female, which one is male. You can point and ask that question.

So essentially, this device, now we call ISee, is a way of providing this very seamless, effortless interaction for blind people to access visual information. And now we realize it’s not just for blind people. For me, I actually used it.

Recently I went to Japan, and I don’t read anything Japanese, and pretty much everything is in Japanese. I went to a pharmacy, I wanted to buy this medic