AI Magic: Shipping 1000s of successful products with no managers and a team of 12 — Jeremy Howard of Answer.ai

Description

Disclaimer: We recorded this episode ~1.5 months ago, timing for the FastHTML release. It then got bottlenecked by Llama3.1, Winds of AI Winter, and SAM2 episodes, so we’re a little late. Since then FastHTML was released, swyx is building an app in it for AINews, and Anthropic has also released their prompt caching API.

Remember when Dylan Patel of SemiAnalysis coined the GPU Rich vs GPU Poor war? (if not, see our pod with him). The idea was that if you’re GPU poor you shouldn’t waste your time trying to solve GPU rich problems (i.e. pre-training large models) and are better off working on fine-tuning, optimized inference, etc. Jeremy Howard (see our “End of Finetuning” episode to catchup on his background) and Eric Ries founded Answer.AI to do exactly that: “Practical AI R&D”, which is very in-line with the GPU poor needs. For example, one of their first releases was a system based on FSDP + QLoRA that let anyone train a 70B model on two NVIDIA 4090s. Since then, they have come out with a long list of super useful projects (in no particular order, and non-exhaustive):

* FSDP QDoRA: this is just as memory efficient and scalable as FSDP/QLoRA, and critically is also as accurate for continued pre-training as full weight training.

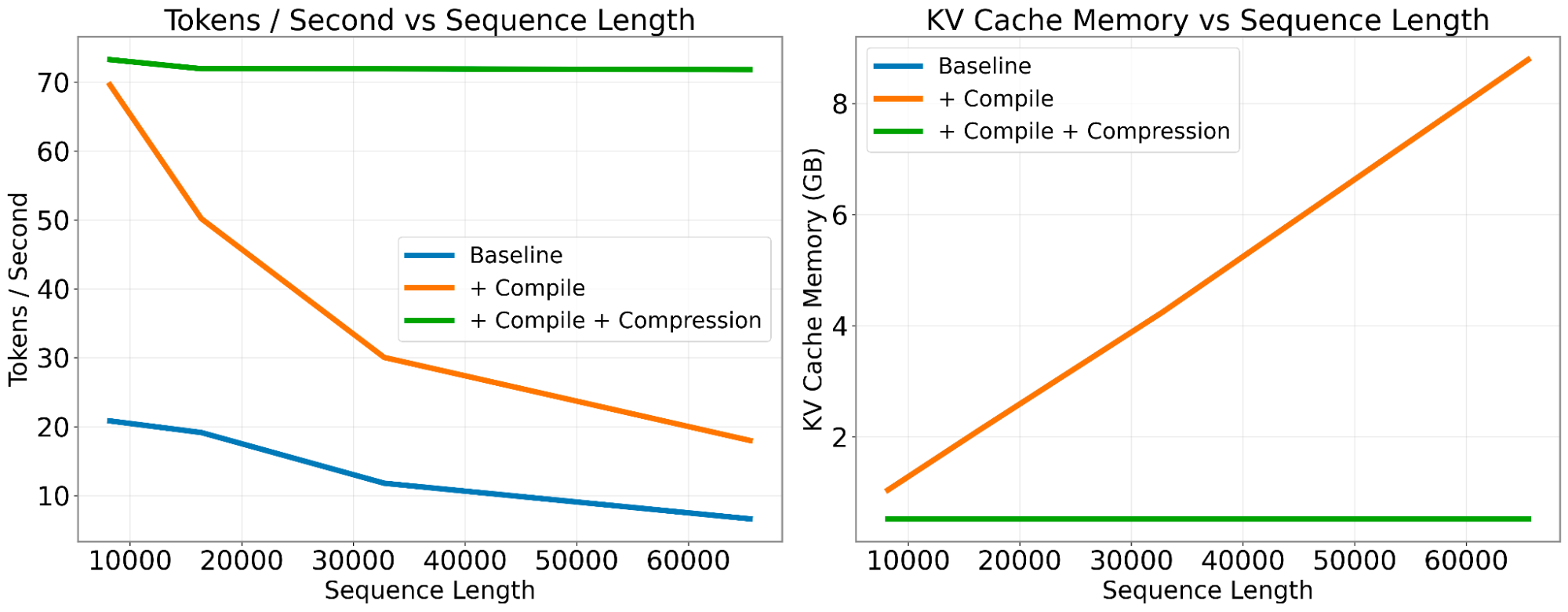

* Cold Compress: a KV cache compression toolkit that lets you scale sequence length without impacting speed.

* colbert-small: state of the art retriever at only 33M params

* JaColBERTv2.5: a new state-of-the-art retrievers on all Japanese benchmarks.

* gpu.cpp: portable GPU compute for C++ with WebGPU.

* Claudette: a better Anthropic API SDK.

They also recently released FastHTML, a new way to create modern interactive web apps. Jeremy recently released a 1 hour “Getting started” tutorial on YouTube; while this isn’t AI related per se, but it’s close to home for any AI Engineer who are looking to iterate quickly on new products:

In this episode we broke down 1) how they recruit 2) how they organize what to research 3) and how the community comes together.

At the end, Jeremy gave us a sneak peek at something new that he’s working on that he calls dialogue engineering:

So I've created a new approach. It's not called prompt engineering. I'm creating a system for doing dialogue engineering. It's currently called AI magic. I'm doing most of my work in this system and it's making me much more productive than I was before I used it.

He explains it a bit more ~44:53 in the pod, but we’ll just have to wait for the public release to figure out exactly what he means.

Timestamps

* [00:00:00 ] Intro by Suno AI

* [00:03:02 ] Continuous Pre-Training is Here

* [00:06:07 ] Schedule-Free Optimizers and Learning Rate Schedules

* [00:07:08 ] Governance and Structural Issues within OpenAI and Other AI Labs

* [00:13:01 ] How Answer.ai works

* [00:23:40 ] How to Recruit Productive Researchers

* [00:27:45 ] Building a new BERT

* [00:31:57 ] FSDP, QLoRA, and QDoRA: Innovations in Fine-Tuning Large Models

* [00:36:36 ] Research and Development on Model Inference Optimization

* [00:39:49 ] FastHTML for Web Application Development

* [00:46:53 ] AI Magic & Dialogue Engineering

* [00:52:19 ] AI wishlist & predictions

Show Notes

* Previously on Latent Space: The End of Finetuning, NeurIPS Startups

* Fast.ai

* FastHTML

* gpu.cpp

* Yi Tai

* HTMX

* UL2

* BERT

* DeBERTa

* Efficient finetuning of Llama 3 with FSDP QDoRA

* xLSTM

Transcript

Alessio [00:00:00 ]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO-in-Residence at Decibel Partners, and I'm joined by my co-host Swyx, founder of Smol AI.

Swyx [00:00:14 ]: And today we're back with Jeremy Howard, I think your third appearance on Latent Space. Welcome.

Jeremy [00:00:19 ]: Wait, third? Second?

Swyx [00:00:21 ]: Well, I grabbed you at NeurIPS.

Jeremy [00:00:23 ]: I see.

Swyx [00:00:24 ]: Very fun, standing outside street episode.

Jeremy [00:00:27 ]: I never heard that, by the way. You've got to send me a link. I've got to hear what it sounded like.

Swyx [00:00:30 ]: Yeah. Yeah, it's a NeurIPS podcast.

Alessio [00:00:32 ]: I think the two episodes are six hours, so there's plenty to listen, we'll make sure to send it over.

Swyx [00:00:37 ]: Yeah, we're trying this thing where at the major ML conferences, we, you know, do a little audio tour of, give people a sense of what it's like. But the last time you were on, you declared the end of fine tuning. I hope that I sort of editorialized the title a little bit, and I know you were slightly uncomfo