Segment Anything 2: Demo-first Model Development

Description

Because of the nature of SAM, this is more video heavy than usual. See our YouTube!

Because vision is first among equals in multimodality, and yet SOTA vision language models are closed, we’ve always had an interest in learning what’s next in vision.

Our first viral episode was Segment Anything 1, and we have since covered LLaVA, IDEFICS, Adept, and Reka. But just like with Llama 3, FAIR holds a special place in our hearts as the New Kings of Open Source AI.

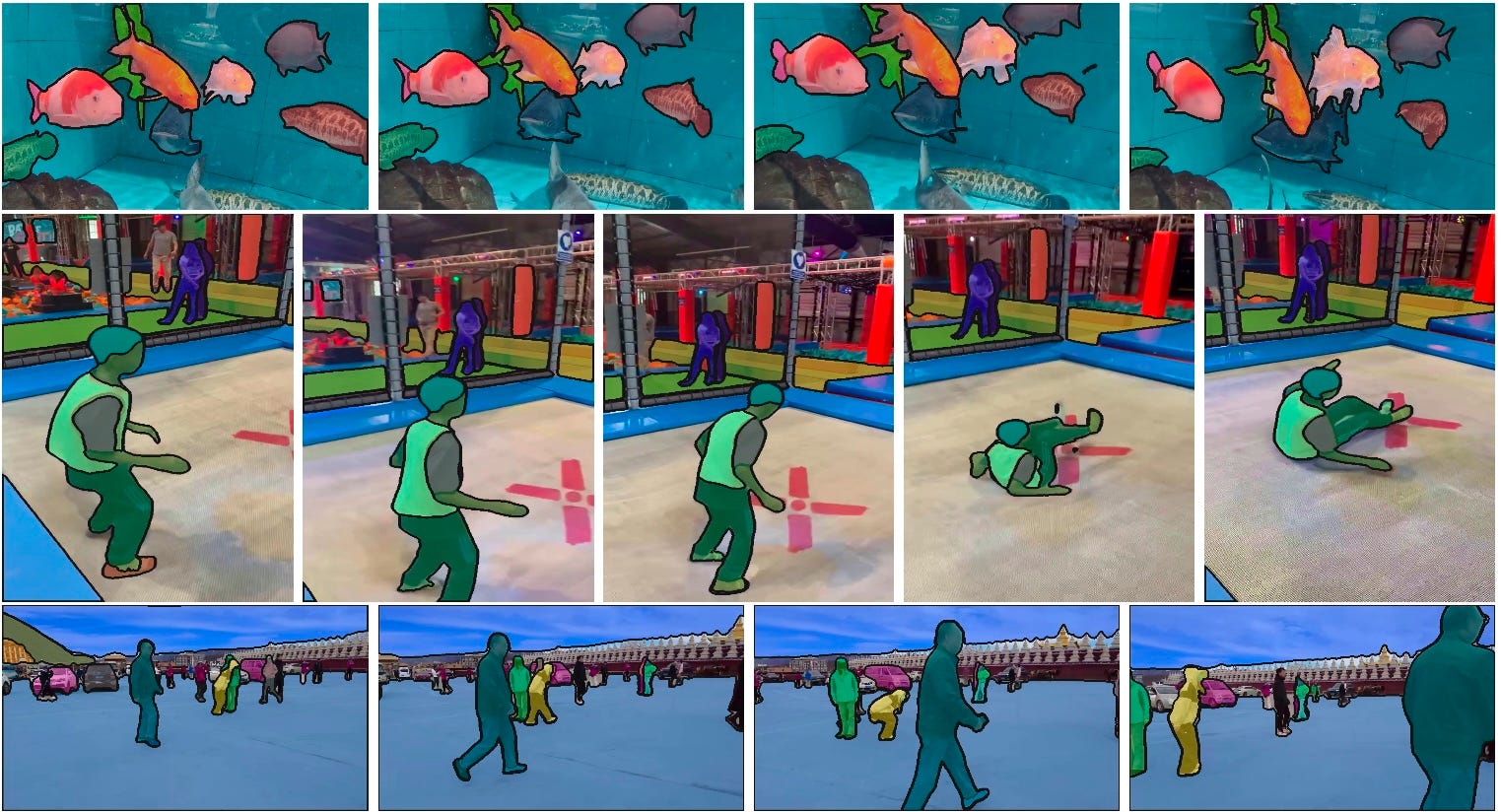

The list of sequels better than the originals is usually very short, but SAM 2 delighted us by not only being a better image segmentation model than SAM 1, it also conclusively and inexpensively solved video segmentation in just an elegant a way as SAM 1 did for images, and releasing everything to the community as Apache 2/CC by 4.0.

“In video segmentation, we observe better accuracy, using 3x fewer interactions than prior approaches.

In image segmentation, our model is more accurate and 6x faster than the Segment Anything Model (SAM).”

Surprisingly Efficient

The paper reports that SAM 2 was trained on 256 A100 GPUs for 108 hours (59% more than SAM 1). Taking the upper end $2 A100 cost off gpulist.ai means SAM2 cost ~$50k to train if it had an external market-rate cost - surprisingly cheap for adding video understanding!

The newly released SA-V dataset is also the largest video segment dataset to date, with careful attention given to scene/object/geographical diversity, including that of annotators. In some ways, we are surprised that SOTA video segmentation can be done on only ~50,000 videos (and 640k masklet annotations).

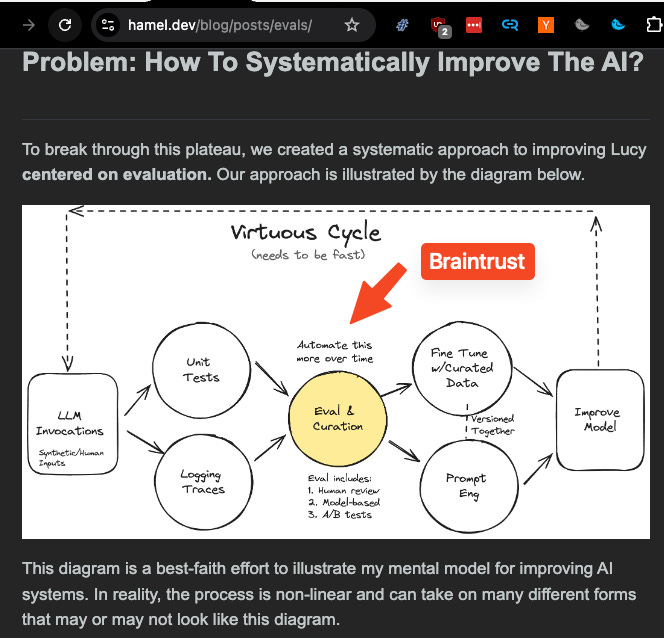

Model-in-the-loop Data Engine for Annotations and Demo-first Development

Similar to SAM 1, a 3 Phase Data Engine helped greatly in bootstrapping this dataset. As Nikhila says in the episode, the demo you see wasn’t just for show, they actually used this same tool to do annotations for the model that is now demoed in the tool:

“With the original SAM, we put a lot of effort in building a high-quality demo. And the other piece here is that the demo is actually the annotation tool. So we actually use the demo as a way to improve our annotation tool. And so then it becomes very natural to invest in building a good demo because it speeds up your annotation. and improve the data quality, and that will improve the model quality. With this approach, we found it to be really successful.”

An incredible 90% speedup in annotation happened due to this virtuous cycle which helped SA-V reach this incredible scale.

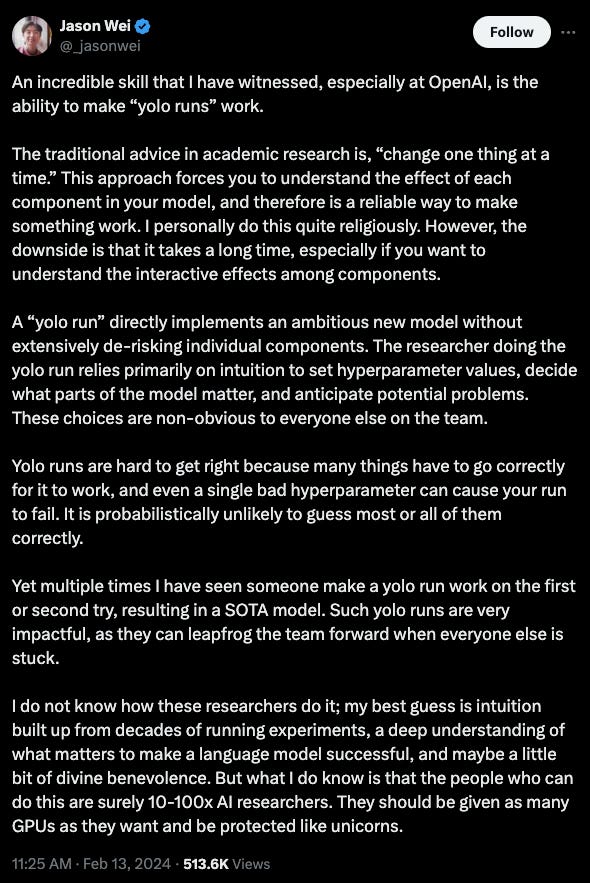

Building the demo also helped the team live the context that their own downstream users, like Roboflow, would experience, and forced them to make choices accordingly.

As Nikhila says:

“It's a really encouraging trend for not thinking about only the new model capability, but what sort of applications folks want to build with models as a result of that downstream.

I think it also really forces you to think about many things that you might postpone. For example, efficiency. For a good demo experience, making it real time is super important. No one wants to wait. And so it really forces you to think about these things much sooner and actually makes us think about what kind of image encoder we want to use or other things. hardware efficiency improvements. So those kind of things, I think, become a first-class citizen when you put the demo first.”

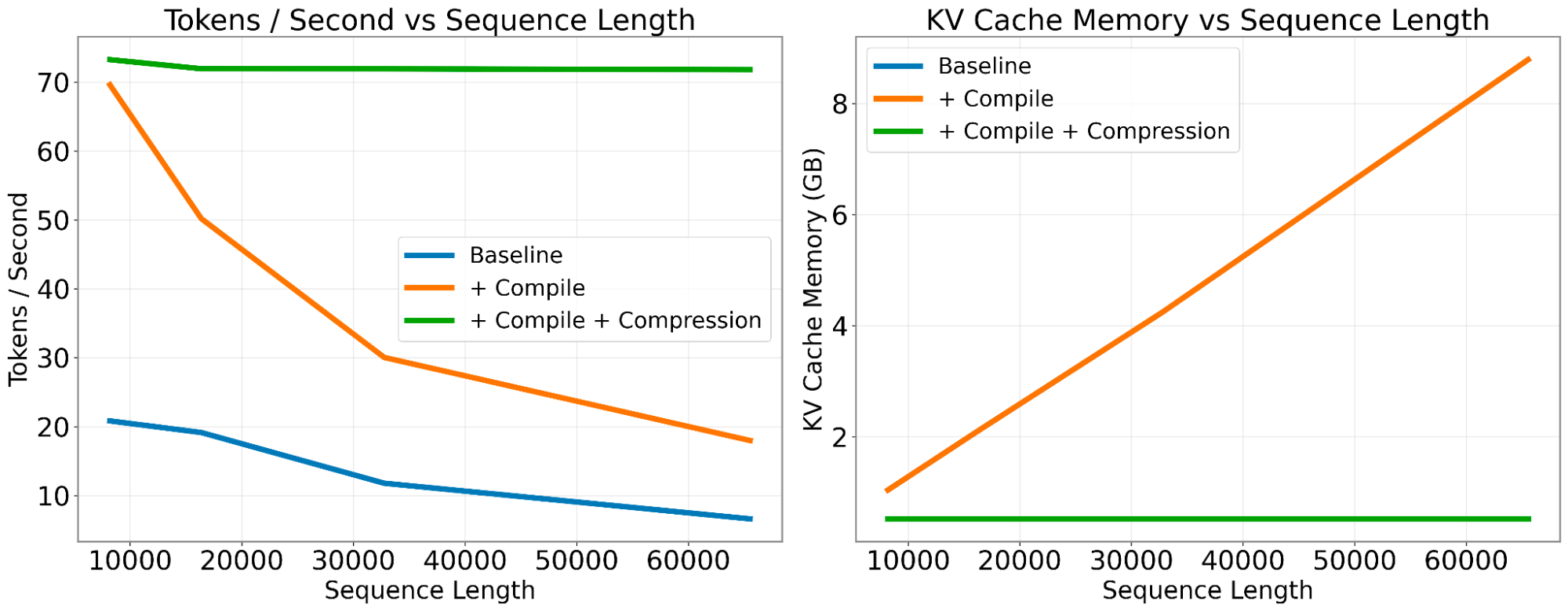

Indeed, the team swapped out standard ViT-H Vision Transformers for Hiera (Hierarchical) Vision Transformers as a result of efficiency considerations.

Memory Attention

Speaking of architecture, the model design is probably the sleeper hit of a project filled with hits. The team adapted SAM 1 to video by adding streaming memory for real-time video processing:

Specifically adding memory attention, memory encoder, and memory bank, which surprisingly ablated better than more intuitive but complex architectures like Gated Recurrent Units.

One has to wonder if streaming memory can be added to pure language models with a similar approach… (pls comment if there’s an obvious one we haven’t come across yet!)

Video Podcast

Tune in to Latent Space TV for the video demos mentioned in this video podcast!

Resources referenced

Show References

* https://sam2.metademolab.com/demo

* https://github.com/autodistill/autodistill

* https://github.com/facebookresearch/segment-anything-2

* https://blog.roboflow.com/label-data-with-grounded-sam-2/

* https://arxiv.org/abs/2408.00714

* https://github.com/roboflow/notebooks

* https://blog.roboflow.com/sam-2-video-segmentation/

Timestamps

* [00:00:00 ] The Rise of SAM by Udio (David Ding Edit)

* [00:03:07 ] Introducing Nikhila

* [00:06:38 ] The Impact of SAM 1 in 2023

* [00:12:15 ] Do People Finetune SAM?

* [00:16:05 ] Video Demo of SAM

* [00:20:01 ] Why the Demo is so Important

* [00:23:23 ] SAM 1 vs SAM 2 Architecture

* [00:26:46 ] Video Demo of SAM on Roboflow

* [00:32:44 ] Extending SAM 2 with other models

* [00:35:00 ] Limitations of SAM: Screenshots

* [00:38:56 ] SAM 2 Paper

* [00:39:15 ] SA-V Dataset and SAM Data Engine

* [00:43:15 ] Memory Attention to solve Video

* [00:47:24 ] "Context Length" in Memory Attention

* [00:48:17 ] Object Tracking

* [00:50:52 ] The Future of FAIR

* [00:52:23 ] CVPR, Trends in Vision

* [01:02:04 ] Calls to Action

Transcript

[00:00:00 ] [music intro]

[00:02:11 ] AI Charlie: Happy Yoga! This is your AI co host Charlie. Thank you for all the love for our special 1 million downloads Wins of AI Winter episode last week, especially Sam, Archie, Trellis, Morgan, Shrey, Han, and more. For this episode, we have to go all the way back to the first viral episode of the podcast Segment Anything Model and the Hard Problems of Computer Vision, which we discussed with Joseph Nelson of Roboflow.

[00:02:39 ] AI Charlie: Since Meta released SAM 2 last week, we are delighted to welcome Joseph back as our fourth guest co host to chat with Nikhila Ravi, Research Engineering Manager at Facebook AI Research and lead author of SAM 2. Just like our SAM 1 podcast, this is a multimodal pod because of the vision element, so we definitely encourage you to hop over to our YouTube at least for the demos, if not our faces.

[00:03:04 ] AI Charlie: Watch out and take care.

[00:03:10 ] Introducing Nikhila

[00:03:10 ] swyx: Welcome to the latest podcast. I'm delighted to do segment anything to our first, one of our very first viral podcasts was segment anything one with Joseph. Welcome back. Thanks so much. And this time we are joined by the lead author of Segment Anything 2, Nikki Ravi, welcome.

[00:03:25 ] Nikhila Ravi: Thank you. Thanks for having me.

[00:03:26 ] swyx: There's a whole story that we can refer people back to episode of the podcast way back when for the story of Segment Anything, but I think we're interested in just introducing you as a researcher, as a, on the human side what