Benchmarks 201: Why Leaderboards > Arenas >> LLM-as-Judge

Description

The first AI Engineer World’s Fair talks from OpenAI and Cognition are up!

In our Benchmarks 101 episode back in April 2023 we covered the history of AI benchmarks, their shortcomings, and our hopes for better ones.

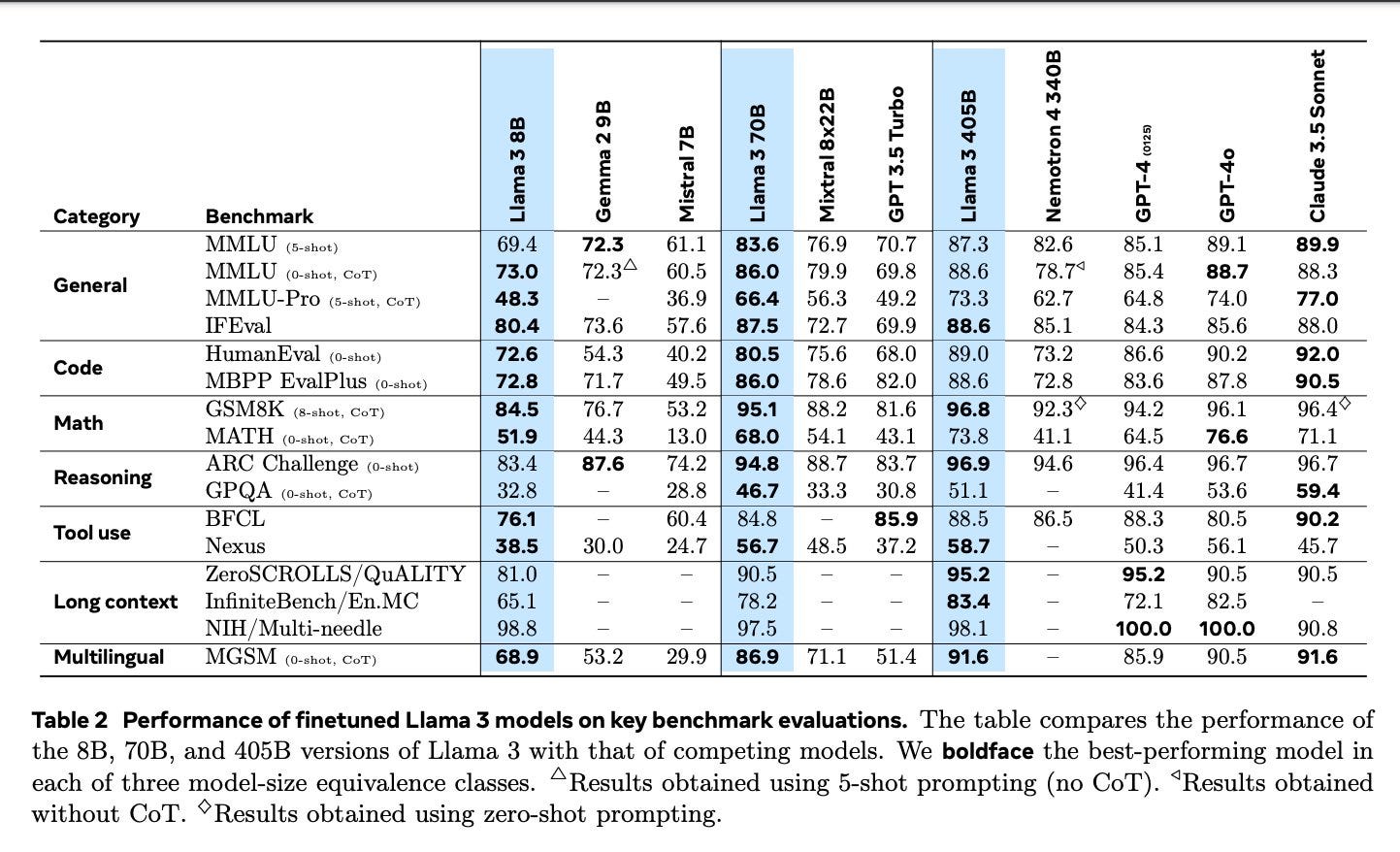

Fast forward 1.5 years, the pace of model development has far exceeded the speed at which benchmarks are updated. Frontier labs are still using MMLU and HumanEval for model marketing, even though most models are reaching their natural plateau at a ~90% success rate (any higher and they’re probably just memorizing/overfitting).

From Benchmarks to Leaderboards

Outside of being stale, lab-reported benchmarks also suffer from non-reproducibility. The models served through the API also change over time, so at different points in time it might return different scores.

Today’s guest, Clémentine Fourrier, is the lead maintainer of HuggingFace’s OpenLLM Leaderboard. Their goal is standardizing how models are evaluated by curating a set of high quality benchmarks, and then publishing the results in a reproducible way with tools like EleutherAI’s Harness.

The leaderboard was first launched summer 2023 and quickly became the de facto standard for open source LLM performance. To give you a sense for the scale:

* Over 2 million unique visitors

* 300,000 active community members

* Over 7,500 models evaluated

Last week they announced the second version of the leaderboard. Why? Because models were getting too good!

The new version of the leaderboard is based on 6 benchmarks:

* 📚 MMLU-Pro (Massive Multitask Language Understanding - Pro version, paper)

* 📚 GPQA (Google-Proof Q&A Benchmark, paper)

* 💭MuSR (Multistep Soft Reasoning, paper)

* 🧮 MATH (Mathematics Aptitude Test of Heuristics, Level 5 subset, paper)

* 🤝 IFEval (Instruction Following Evaluation, paper)

* 🧮 🤝 BBH (Big Bench Hard, paper)

You can read the reasoning behind each of them on their announcement blog post. These updates had some clear winners and losers, with models jumping up or down up to 50 spots at once; the most likely reason for this is that the models were overfit to the benchmarks, or had some contamination in their training dataset.

But the most important change is in the absolute scores. All models score much lower on v2 than they do on v1, which now creates a lot more room for models to show improved performance.

On Arenas

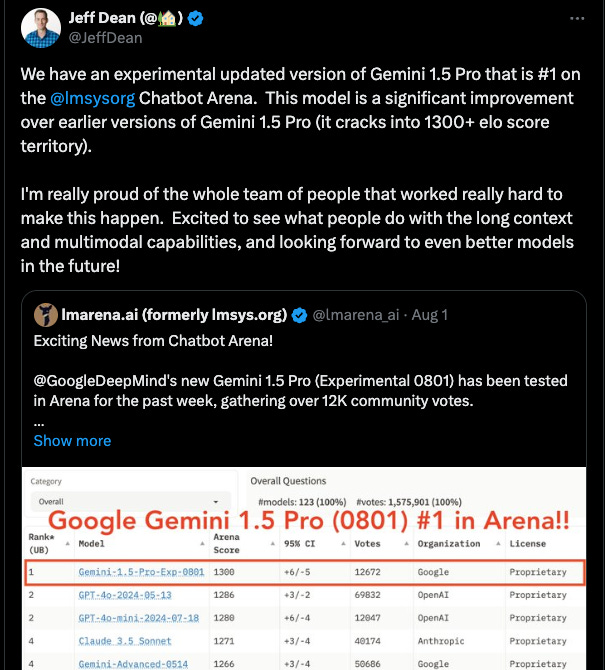

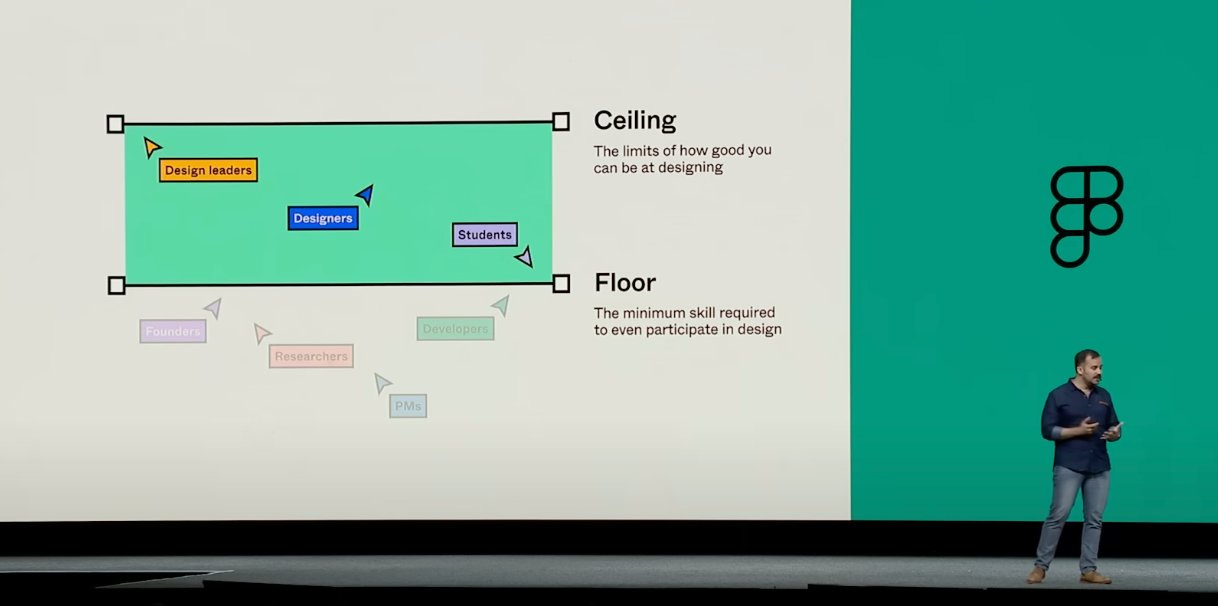

Another high-signal platform for AI Engineers is the LMSys Arena, which asks users to rank the output of two different models on the same prompt, and then give them an ELO score based on the outcomes.

Clémentine called arenas “sociological experiments”: it tells you a lot about the users preference, but not always much about the model capabilities. She pointed to Anthropic’s sycophancy paper as early research in this space:

We find that when a response matches a user’s views, it is more likely to be preferred. Moreover, both humans and preference models (PMs) prefer convincingly-written sycophantic responses over correct ones a non-negligible fraction of the time.

The other issue is that Arena rankings aren’t reproducible, as you don’t know who ranked what and what exactly the outcome was at the time of ranking. They are still quite helpful as tools, but they aren’t a rigorous way to rank capabilities of the models.

Her advice for both arena and leaderboard is to use these tools as ranges; find 3-4 models that fit your needs (speed, cost, capabilities, etc) and then do vibe checks to figure out which one is best for your specific task.

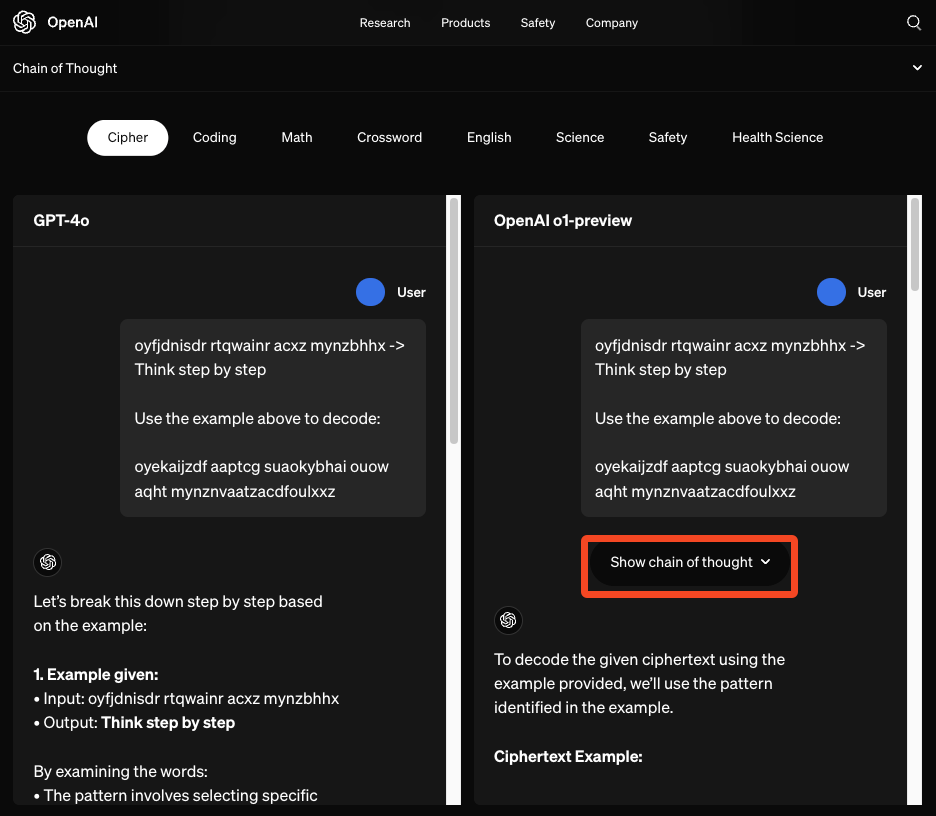

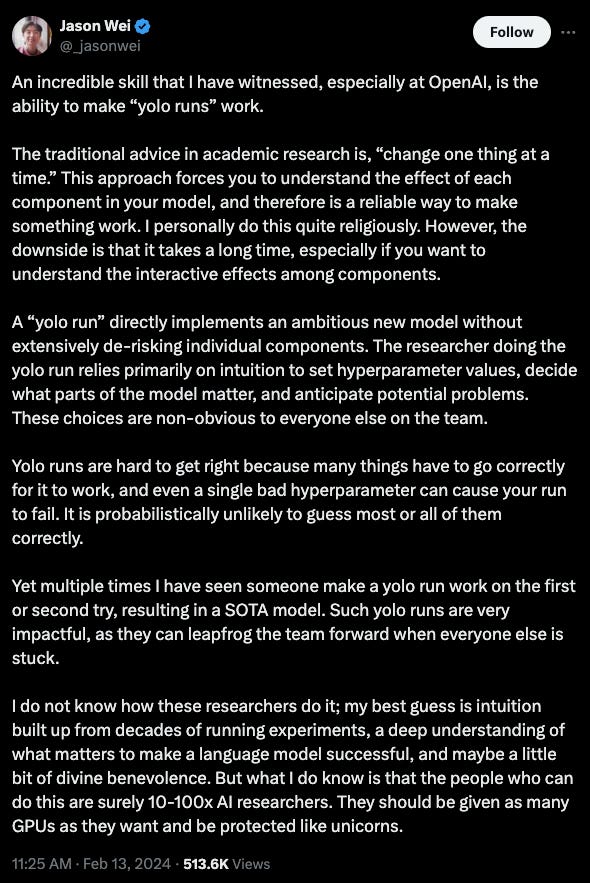

LLMs aren’t good judges

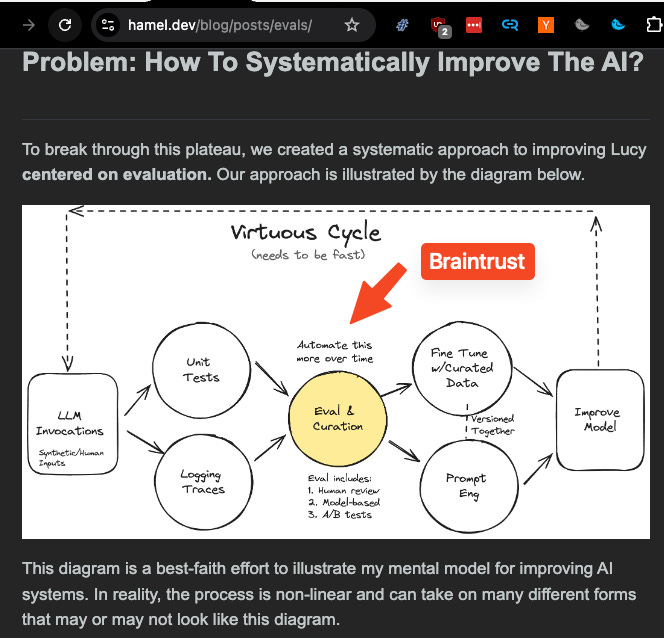

In the last ~6 months, there has been an increased interest in using LLMs as Judges: rather than asking a person to evaluate the outcome of a model, you can ask a more powerful LLM to score it. We covered this a bit in our Brightwave episode last month as well. HuggingFace also has a cookbook on it, but Clémentine was actually not a fan of this approach:

* Mode collapse: if you are asking a model to choose which output is better, it will just self-reinforce its own preferences. It will also prefer models from its own family (i.e. GPT models will prefer other GPT models over Claude outputs). If these outputs are then used to fine-tune the model, you will further mode collapse the model. Cohere for example has said they do not train on any model-generated data to avoid this.

* Positional bias: LLMs usually prefer the first answer, so you can’t naively give them options and ask them to rank them, but you also have to mix up the order in which they appear.

* Don’t score, rank: rather than asking a model to assign a score to each output, you should have it stack-rank them. The models aren’t trained to score things, so even though they might understand what response is better, assigning a score to it is hard.

If you do have to use LLMs as Judges (we aren’t all ScaleAI-rich!), she suggested using an open LLM like Prometheus or JudgeLM to make sure you can reproduce those rankings in the future.

Show Notes

* Let’s talk about LLM Evaluation

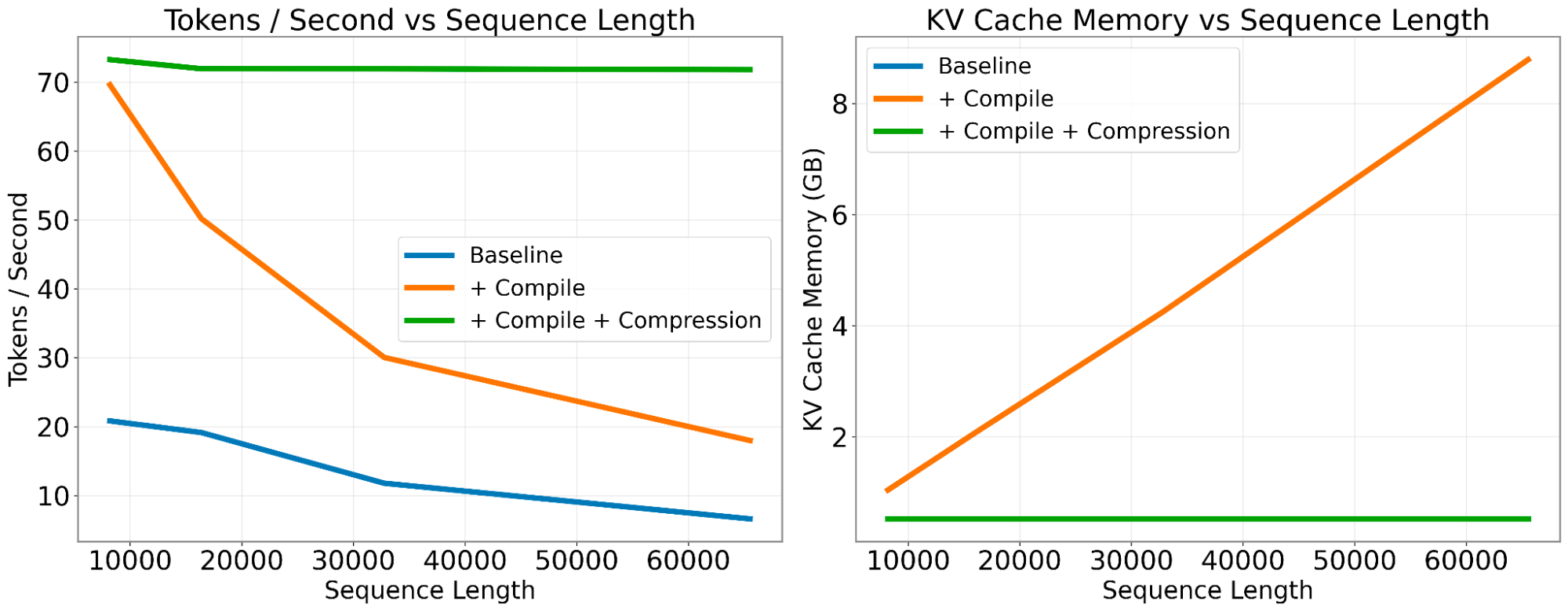

* Gradient AI epsiode on Long Context Evals

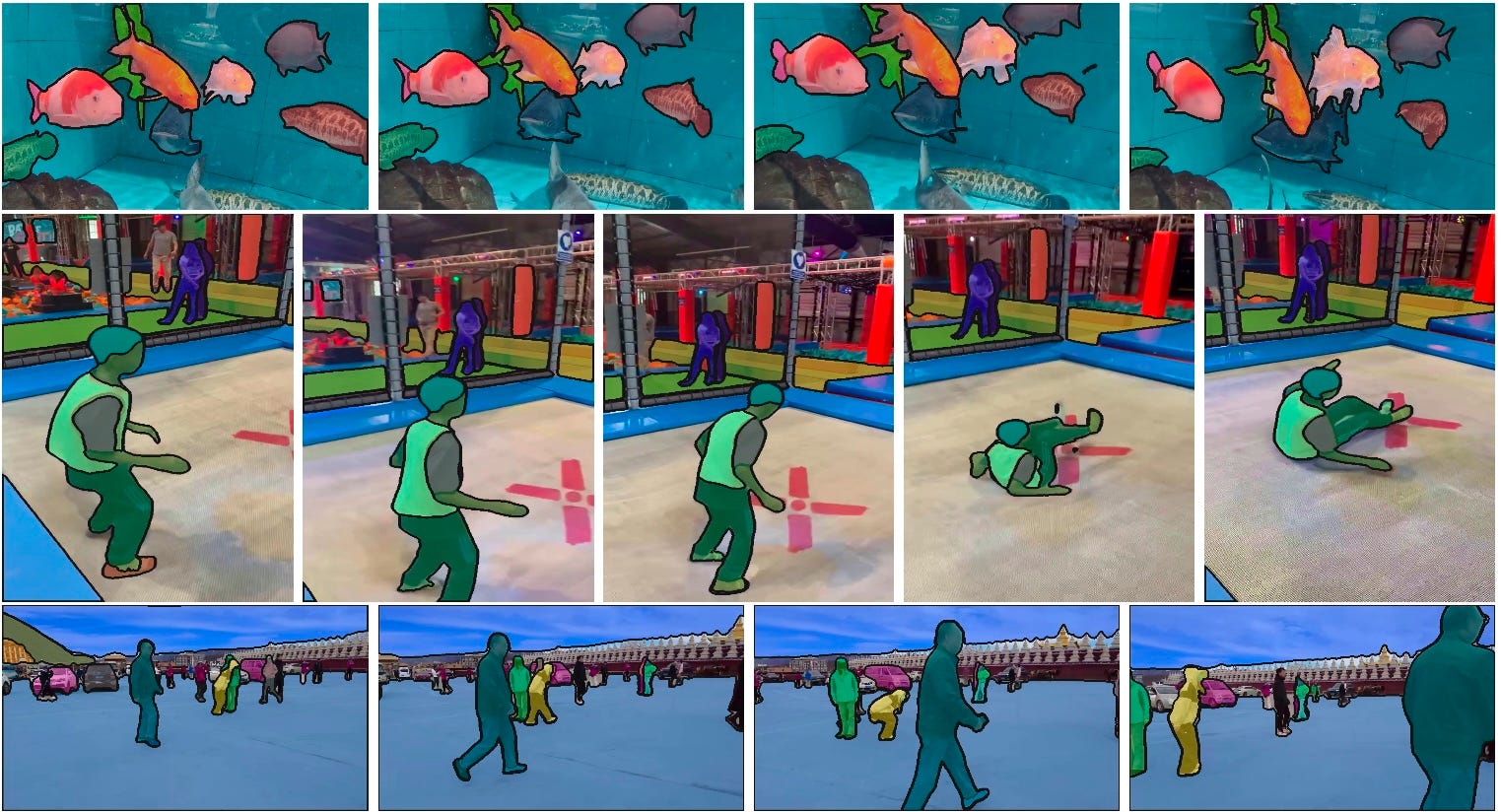

* Allen AI long context novel evals

Companies and Organizations

* Cohere

* INRIA

* ICLR (International Conference on Learning Representations)

People

Projects, Models, and Benchmarks

* Allen Institute ARC Challenge

* BigBench

* GPQA

* GSM 8K

* IFEval

* ML perf

* MMLU

* JudgeLM

* <a target="_blank" href="https://pr