Is finetuning GPT4o worth it? — with Alistair Pullen, Cosine (Genie)

Description

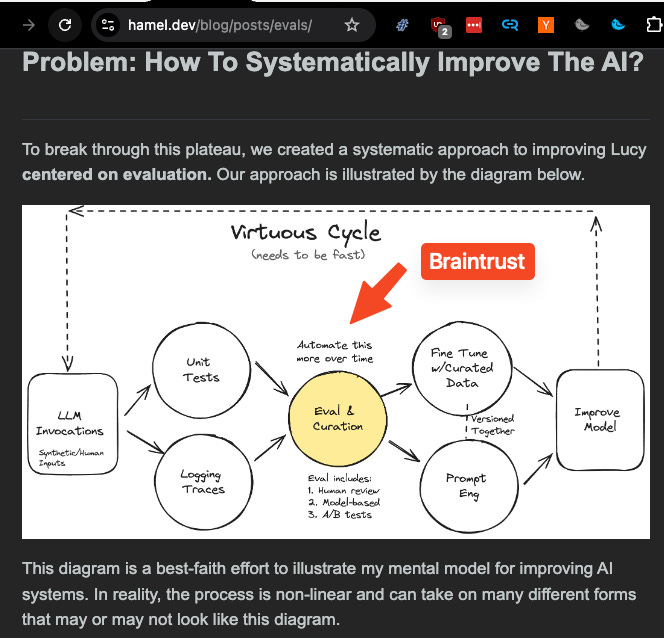

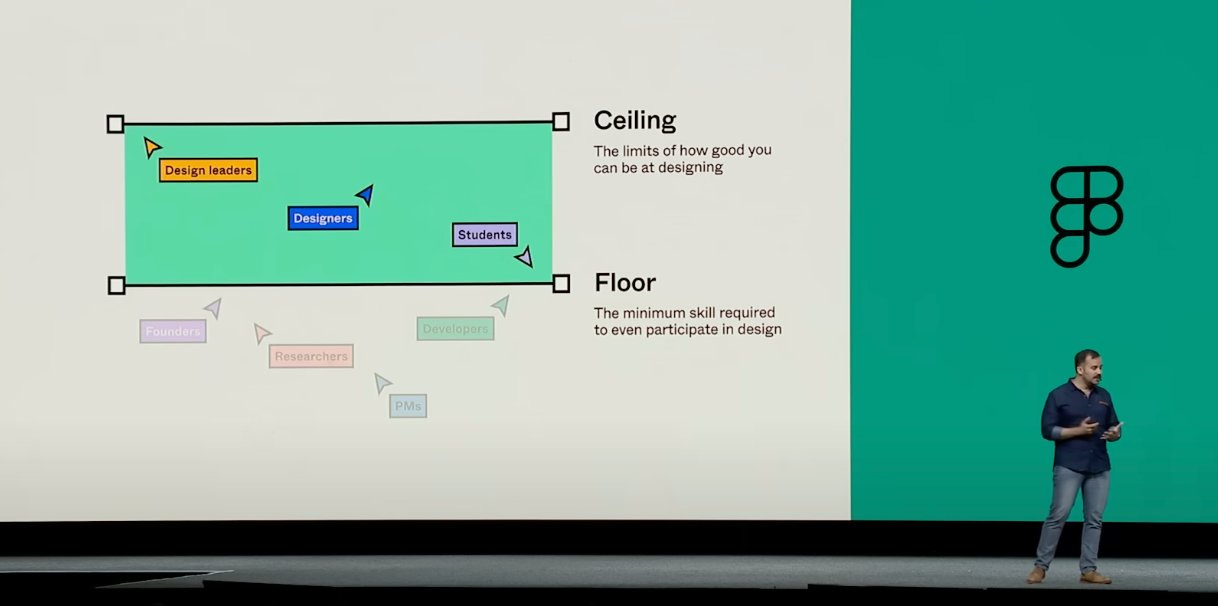

Betteridge's law says no: with seemingly infinite flavors of RAG, and >2million token context + prompt caching from Anthropic/Deepmind/Deepseek, it's reasonable to believe that "in context learning is all you need".

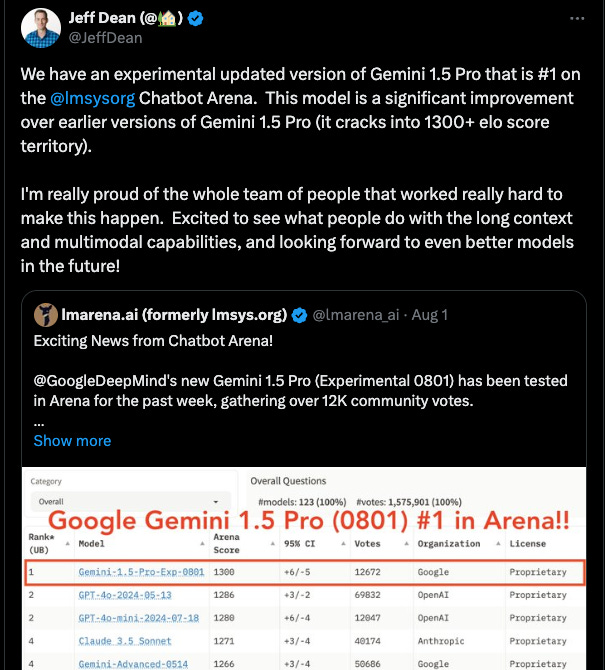

But then there’s Cosine Genie, the first to make a huge bet using OpenAI’s new GPT4o fine-tuning for code at the largest scale it has ever been used externally; resulting in what is now the #1 coding agent in the world according to SWE-Bench Full, Lite, and Verified:

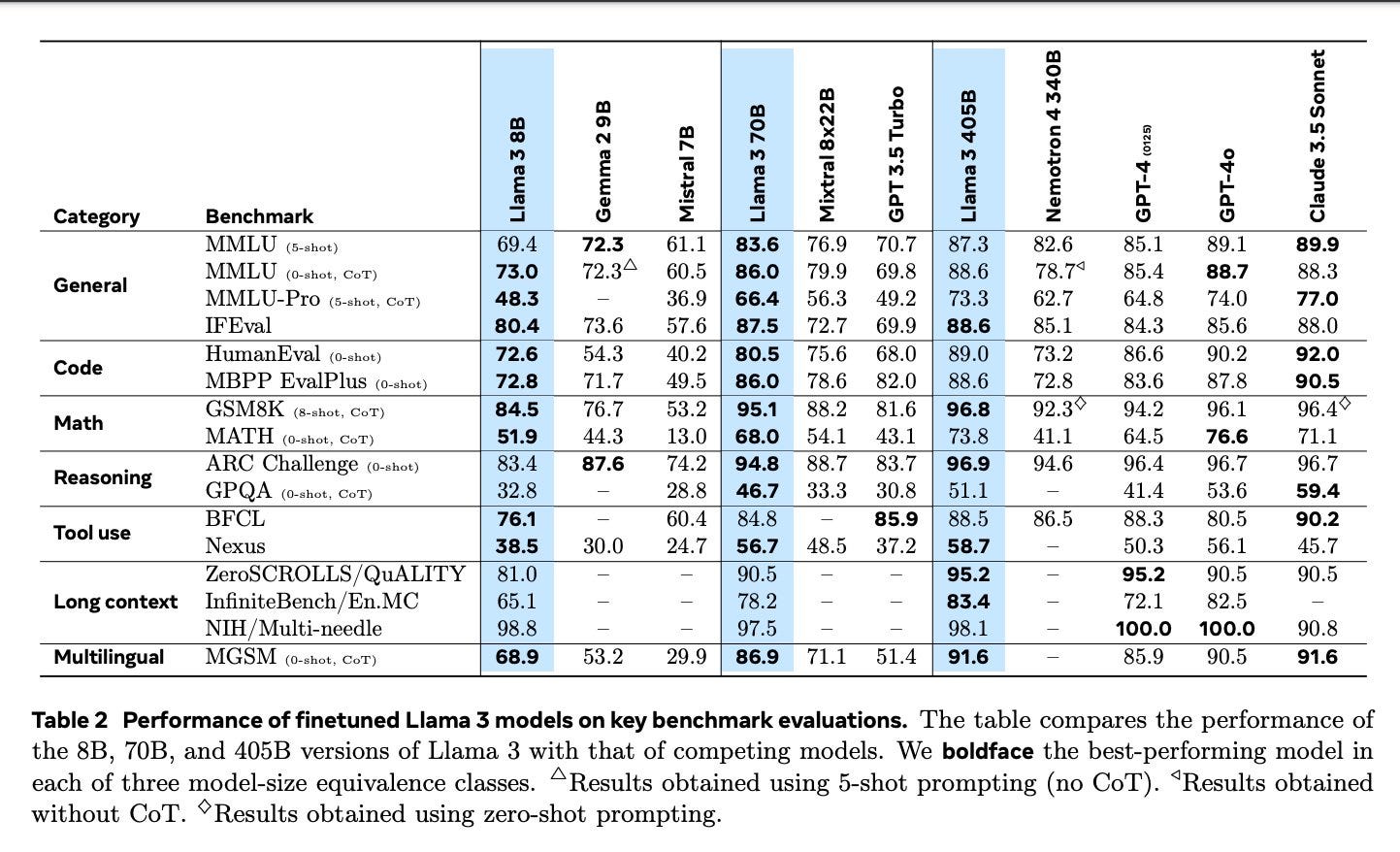

SWE-Bench has been the most successful agent benchmark of the year, receiving honors at ICLR (our interview here) and recently being verified by OpenAI. Cognition (Devin) was valued at $2b after reaching 14% on it. So it is very, very big news when a new agent appears to beat all other solutions, by a lot:

While this number is self reported, it seems to be corroborated by OpenAI, who also award it clear highest marks on SWE-Bench verified:

The secret is GPT-4o finetuning on billions of tokens of synthetic data.

* Finetuning: As OpenAI says:

Genie is powered by a fine-tuned GPT-4o model trained on examples of real software engineers at work, enabling the model to learn to respond in a specific way. The model was also trained to be able to output in specific formats, such as patches that could be committed easily to codebases.

Due to the scale of Cosine’s finetuning, OpenAI worked closely with them to figure out the size of the LoRA:

“They have to decide how big your LoRA adapter is going to be… because if you had a really sparse, large adapter, you’re not going to get any signal in that at all. So they have to dynamically size these things.”

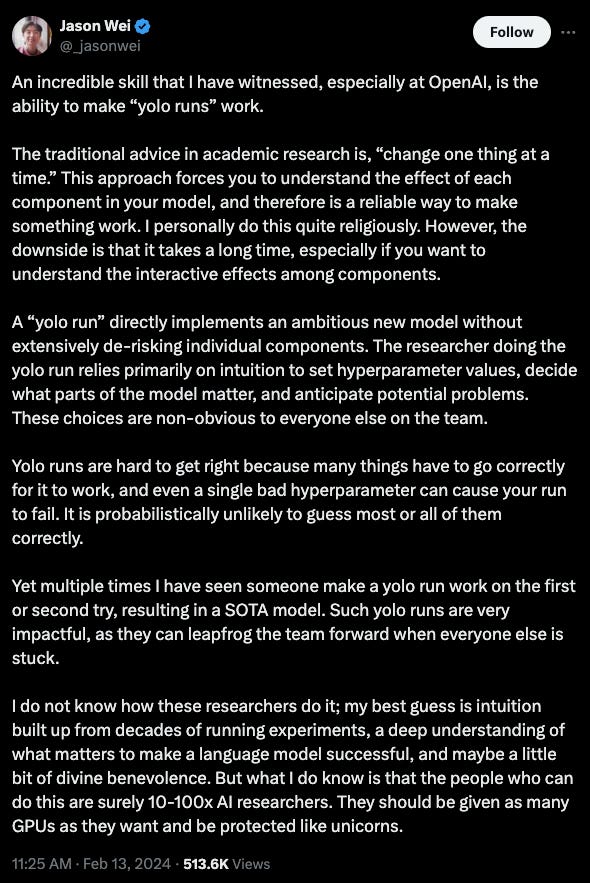

* Synthetic data: we need to finetune on the process of making code work instead of only training on working code.

“…we synthetically generated runtime errors. Where we would intentionally mess with the AST to make stuff not work, or index out of bounds, or refer to a variable that doesn't exist, or errors that the foundational models just make sometimes that you can't really avoid, you can't expect it to be perfect.”

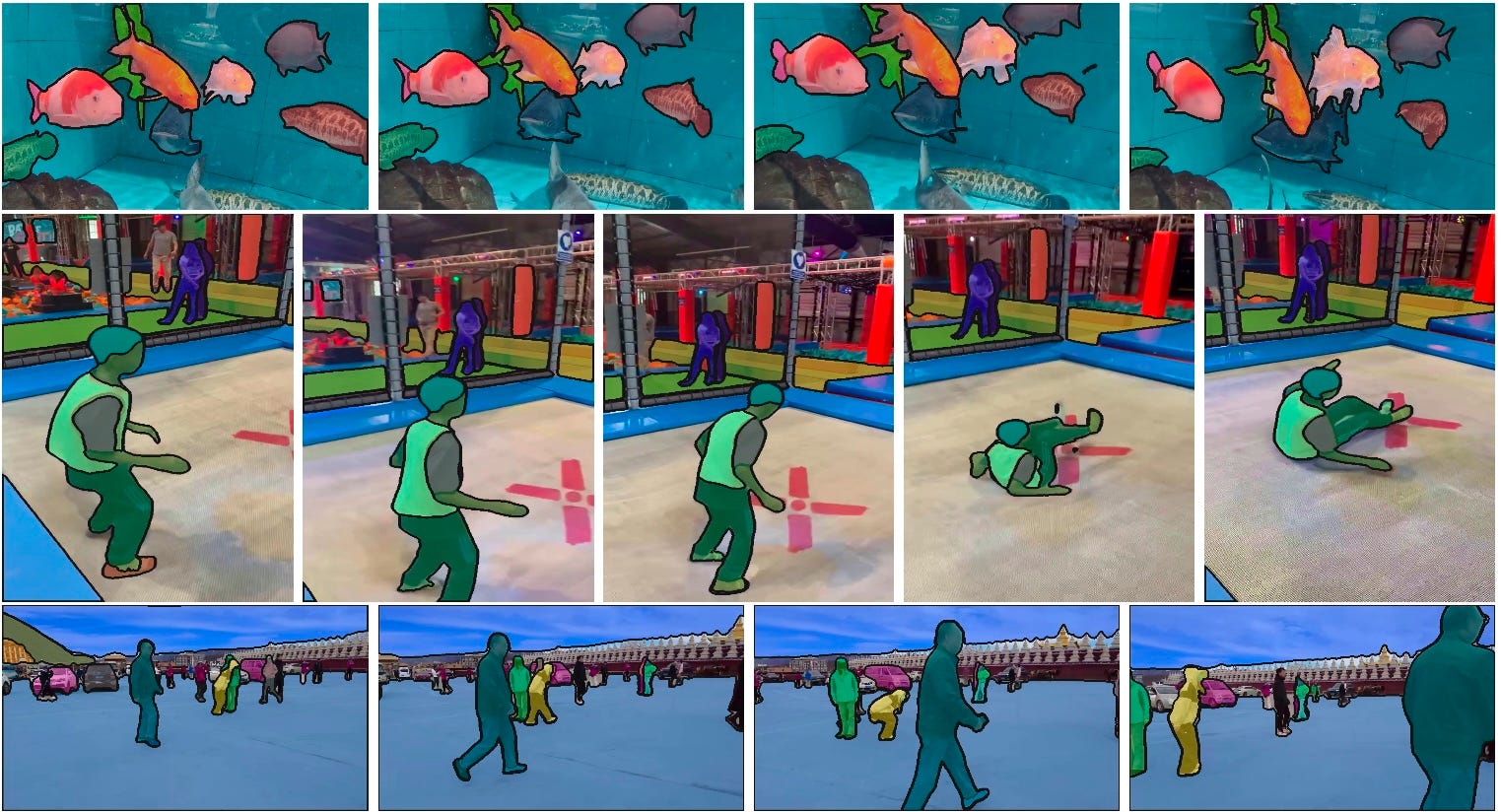

Genie also has a 4 stage workflow with the standard LLM OS tooling stack that lets it solve problems iteratively:

Full Video Pod

like and subscribe etc!

Show Notes

* Alistair Pullen - Twitter, Linkedin

* Cosine Genie launch, technical report

* Cursor episode and Aman + SWEBench at ICLR episode

Timestamps

* [00:00:00 ] Suno Intro

* [00:05:01 ] Alistair and Cosine intro

* [00:16:34 ] GPT4o finetuning

* [00:20:18 ] Genie Data Mix

* [00:23:09 ] Customizing for Customers

* [00:25:37 ] Genie Workflow

* [00:27:41 ] Code Retrieval

* [00:35:20 ] Planning

* [00:42:29 ] Language Mix

* [00:43:46 ] Running Code

* [00:46:19 ] Finetuning with OpenAI

* [00:49:32 ] Synthetic Code Data

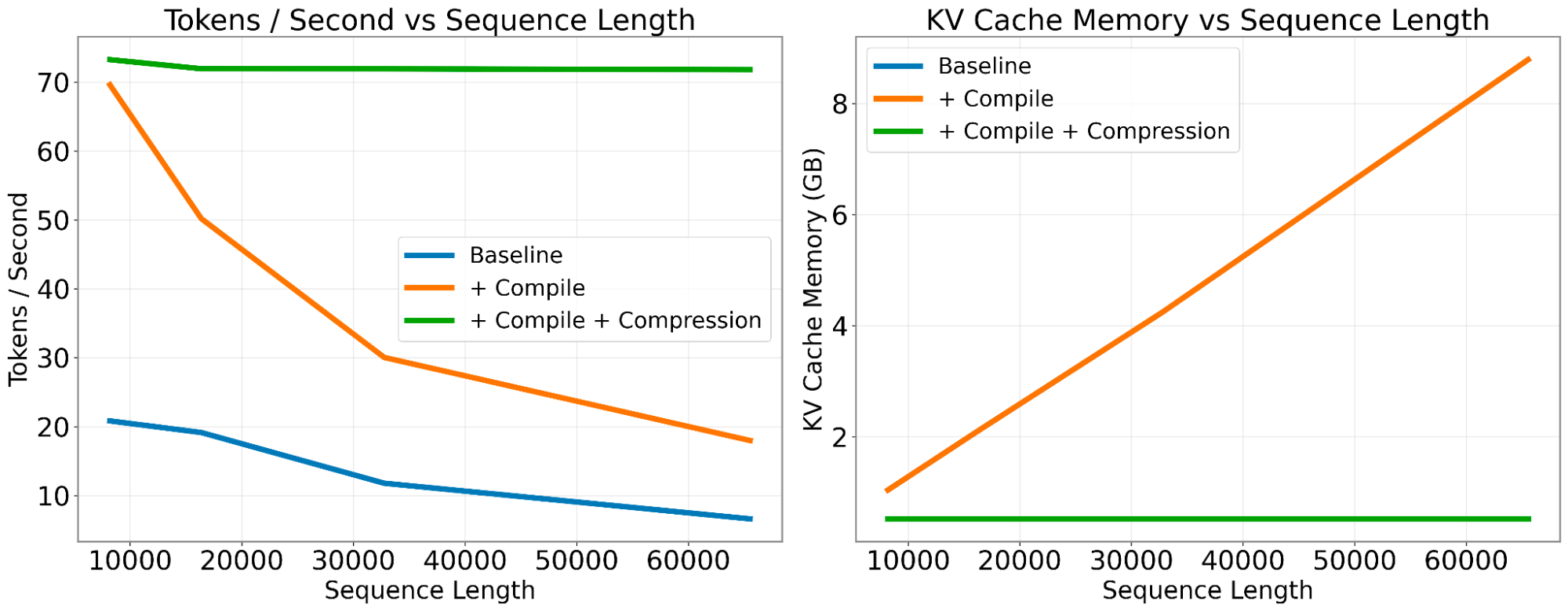

* [00:51:54 ] SynData in Llama 3

* [00:52:33 ] SWE-Bench Submission Process

* [00:58:20 ] Future Plans

* [00:59:36 ] Ecosystem Trends

* [01:00:55 ] Founder Lessons

* [01:01:58 ] CTA: Hiring & Customers

Descript Transcript

[00:01:52 ] AI Charlie: Welcome back. This is Charlie, your AI cohost. As AI engineers, we have a special focus on coding agents, fine tuning, and synthetic data. And this week, it all comes together with the launch of Cosign's Genie, which reached 50 percent on SWE Bench Lite, 30 percent on the full SWE Bench, and 44 percent on OpenAI's new SWE Bench Verified.

[00:02:17 ] All state of the art results by the widest ever margin recorded compared to former leaders Amazon Q and US Autocode Rover. And Factory Code Droid. As a reminder, Cognition Devon went viral with a 14 percent score just five months ago. Cosign did this by working closely with OpenAI to fine tune GPT 4. 0, now generally available to you and me, on billions of tokens of code, much of which was synthetically generated.

[00:02:47 ] Alistair Pullen: Hi, I'm Ali. Co founder and CEO of Cosign, a human reasoning lab. And I'd like to show you Genie, our state of the art, fully autonomous software engineering colleague. Genie has the highest score on SWBench in the world. And the way we achieved this was by taking a completely different approach. We believe that if you want a model to behave like a software engineer, it has to be shown how a human software engineer works.

[00:03:15 ] We've designed new techniques to derive human reasoning from real examples of software engineers doing their jobs. Our data represents perfect information lineage, incremental knowledge discovery, and step by step decision making. Representing everything a human engineer does logically. By actually training Genie on this unique dataset, rather than simply prompting base models, which is what everyone else is doing, we've seen that we're no longer simply generating random code until some works.

[00:03:46 ] It's tackling problems like

[00:03:48 ] AI Charlie: a human. Alistair Pullen is CEO and co founder of Kozen, and we managed to snag him on a brief trip stateside for a special conversation on building the world's current number one coding agent. Watch out and take care.

[00:04:07 ] Alessio: Hey everyone, welcome to the Latent Space Podcast. This is Alessio, partner and CTO of Resonance at Decibel Partners, and I'm joined by my co host Swyx, founder of Small. ai.

[00:04:16 ] swyx: Hey, and today we're back in the studio. In person, after about three to four months in visa jail and travels and all other fun stuff that we talked about in the previous episode.

[00:04:27 ] But today we have a special guest, Ali Pullen from Cosign. Welcome. Hi, thanks for having me. We're very lucky to have you because you're on a two day trip to San Francisco. Yeah, I wouldn't recommend it. I would not

[00:04:38 ] Alistair Pullen: recommend it. Don't fly from London to San Francisco for two days.

[00:04:40 ] swyx: And you launched Genie on a plane.

[00:04:42 ] On plain Wi Fi, um, claiming state of the art in SuiteBench, which we're all going to talk about. I'm excited to dive into your whole journey, because it has been a journey. I've been lucky to be a small angel in part of that journey. And it's exciting to see that you're launching to such acclaim and, you know, such results.

[00:05:01 ] Alistair and Cosine intro

[00:05:01 ] swyx: Um, so I'll go over your brief background, and then you can sort of fill in the blanks on what else people should know about you. You did your bachelor's in computer science at Exeter.

[00:05:10 ] Speaker 6: Yep.

[00:05:10 ] swyx: And then you worked at a startup that got acquired into GoPuff and round about 2022, you started working on a stealth startup that became a YC startup.

[00:05:19 ] What's that? Yeah. So

[00:05:21 ] Alistair Pullen: basically when I left university, I, I met my now co founder, Sam. At the time we were both mobile devs. He was an Android developer. iOS developer. And whilst at university, we built this sort of small consultancy, sort of, we'd um, be approached to build projects for people and we would just take them up and start with, they were student projects.

[00:05:41 ] They weren't, they weren't anything crazy or anything big. We started with those and over time we started doing larger and larger projects, more interesting things. And then actually, when we left university, we just kept doing that. We didn't really get jobs, traditional jobs. It was also like in the middle of COVID, middle of lockdown.

[00:05:57 ] So we were like, this is a pretty good gig. We'll just keep like writing code in our bedrooms. And yeah, that's it. We did that for a while. And then a friend of ours that we went to Exeter with started a YC startup during COVID. And it was one of these f