The 10,000x Yolo Researcher Metagame — with Yi Tay of Reka

Description

Livestreams for the AI Engineer World’s Fair (Multimodality ft. the new GPT-4o demo, GPUs and Inference (ft. Cognition/Devin), CodeGen, Open Models tracks) are now live! Subscribe to @aidotEngineer to get notifications of the other workshops and tracks!

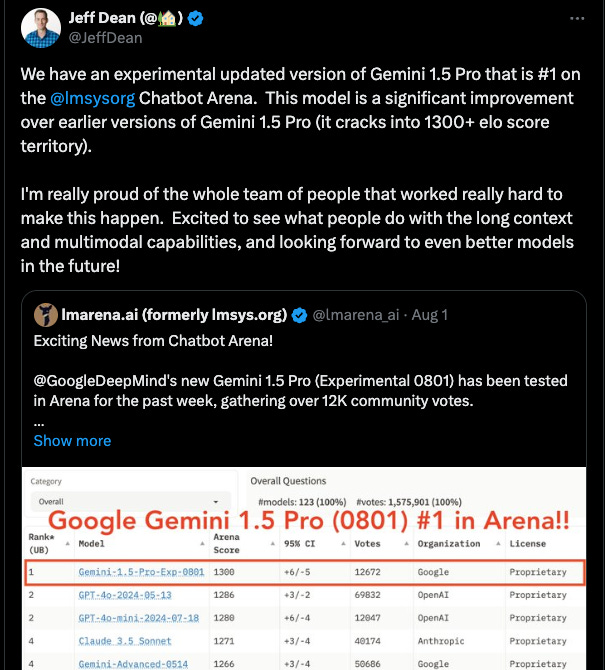

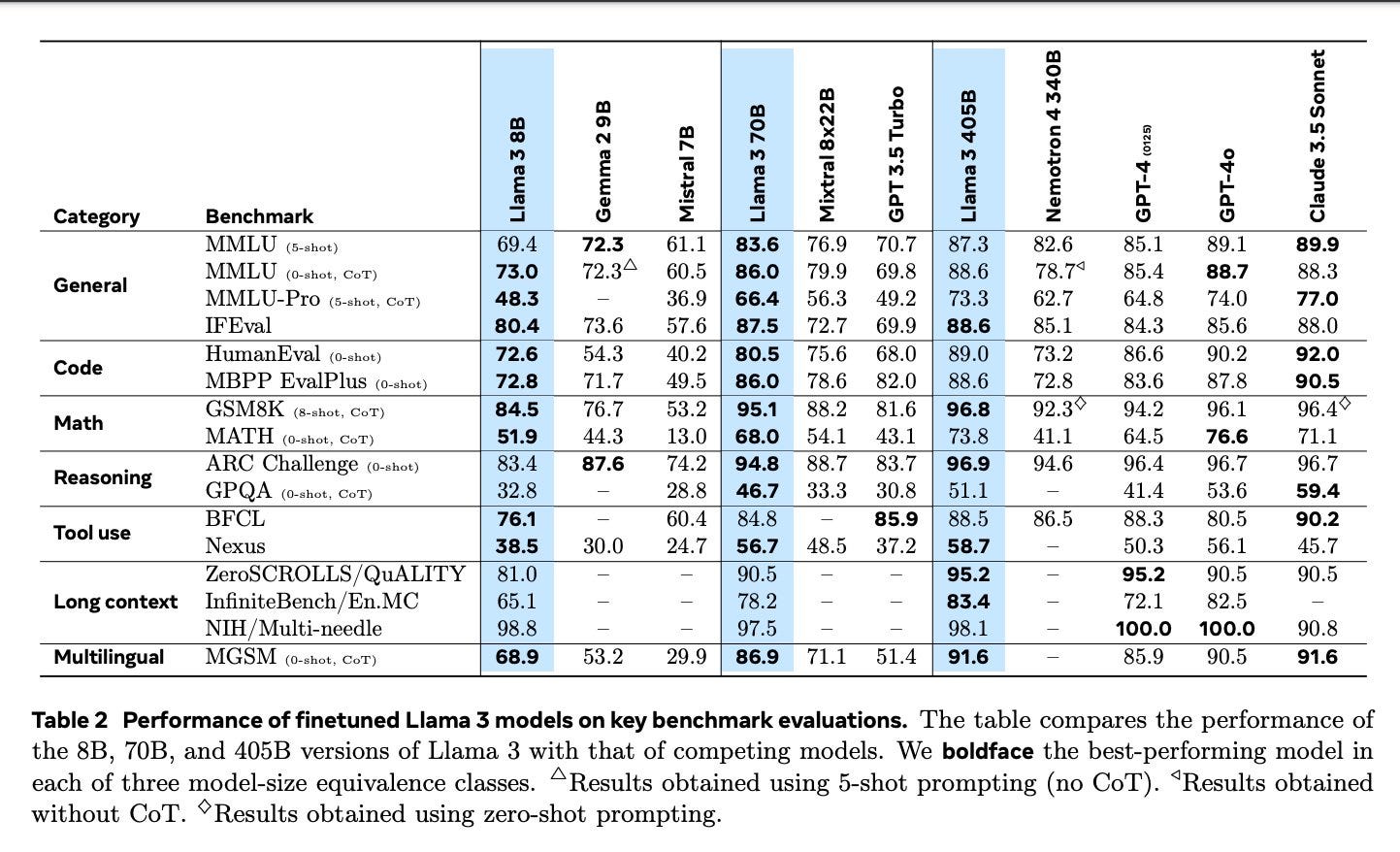

It’s easy to get de-sensitized to new models topping leaderboards every other week — however, the top of the LMsys leaderboard has typically been the exclusive domain of very large, very very well funded model labs like OpenAI, Anthropic, Google, and Meta. OpenAI had about 600 people at the time of GPT-4, and Google Gemini had 950 co-authors. This is why Reka Core made waves in May - not only debuting at #7 on the leaderboard, but doing so with all-new GPU infrastructure and 20 employees with <5 people on pre-training and a relatively puny $60m in funding.

Shortly after the release of GPT3, Sam Altman speculated on the qualities of “10,000x researchers”:

* “They spend a lot of time reflecting on some version of the Hamming question—"what are the most important problems in your field, and why aren’t you working on them?” In general, no one reflects on this question enough, but the best people do it the most, and have the best ‘problem taste’, which is some combination of learning to think independently, reason about the future, and identify attack vectors.” — sama

* Taste is something both John Schulman and Yi Tay emphasize greatly

* “They have a laser focus on the next step in front of them combined with long-term vision.” — sama

* “They are extremely persistent and willing to work hard… They have a bias towards action and trying things, and they’re clear-eyed and honest about what is working and what isn’t” — sama

“There's a certain level of sacrifice to be an AI researcher, especially if you're training at LLMs, because you cannot really be detached… your jobs could die on a Saturday at 4am, and there are people who will just leave it dead until Monday morning, or there will be people who will crawl out of bed at 4am to restart the job, or check the TensorBoard” – Yi Tay (at 28 mins)

“I think the productivity hack that I have is, I didn't have a boundary between my life and my work for a long time. So I think I just cared a lot about working most of the time. Actually, during my PhD, Google and everything [else], I'll be just working all the time. It's not like the most healthy thing, like ever, but I think that that was actually like one of the biggest, like, productivity, like and I spent, like, I like to spend a lot of time, like, writing code and I just enjoy running experiments, writing code” — Yi Tay (at 90 mins)

* See @YiTayML example for honest alpha on what is/is not working

and so on.

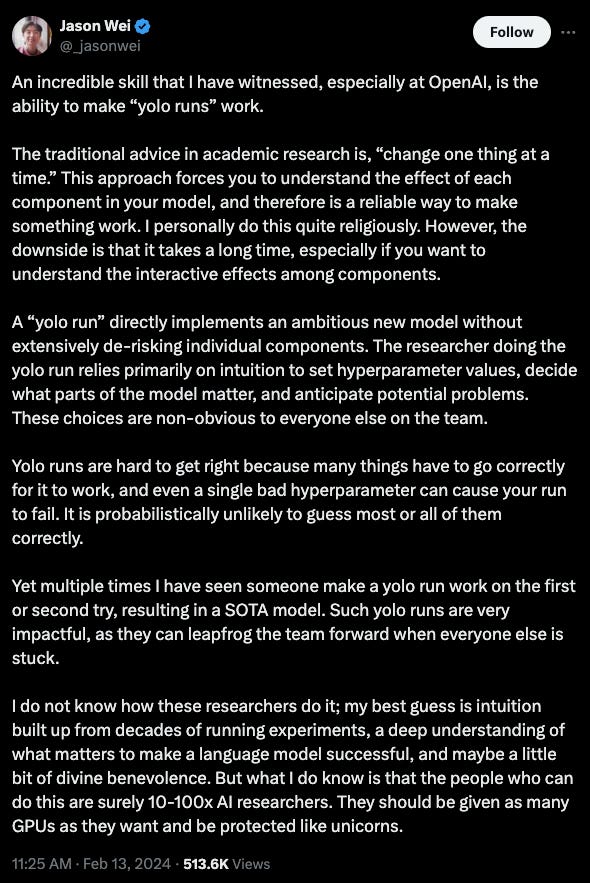

More recently, Yi’s frequent co-author, Jason Wei, wrote about the existence of Yolo researchers he witnessed at OpenAI:

Given the very aggressive timeline — Yi left Google in April 2023, was GPU constrained until December 2023, and then Reka Flash (21B) was released in Feb 2024, and Reka Core (??B) was released in April 2024 — Reka’s 3-5 person pretraining team had no other choice but to do Yolo runs. Per Yi:

“Scaling models systematically generally requires one to go from small to large in a principled way, i.e., run experiments in multiple phrases (1B->8B->64B->300B etc) and pick the winners and continuously scale them up. In a startup, we had way less compute to perform these massive sweeps to check hparams. In the end, we had to work with many Yolo runs (that fortunately turned out well).

In the end it took us only a very small number of smaller scale & shorter ablation runs to get to the strong 21B Reka Flash and 7B edge model (and also our upcoming largest core model). Finding a solid recipe with a very limited number of runs is challenging and requires changing many variables at once given the ridiculously enormous search space. In order to do this, one has to abandon the systematicity of Bigtech and rely a lot on “Yolo”, gut feeling and instinct.”

We were excited to be the first podcast to interview Yi, and recommend reading our extensive show notes to follow the same papers we reference throughout the conversation.

Special thanks to Terence Lee of TechInAsia for the final interview clip, who are launching their own AI newsletter called The Prompt!

Full Video Podcast

Show Notes

* Yi on LinkedIn, Twitter, Personal

* Building frontier AI teams as GPU Poors

* Yi’s Research

* 2020

* Efficient Transformers: A Survey went viral!

* Long Range Arena: A Benchmark for Efficient Transformers in 2020

* 2021: Generative Models are Unsupervised Predictors of Page Quality: A Colossal-Scale Study

* 2022:

* UL2: Unifying Language Learning Paradigms

* Emergent Abilities of Large Language Models vs the Mirage paper

* Recitation Augmented generation

* DSI++: Updating Transformer Memory with New Documents

* The Efficiency Misnomer: “a model with low FLOPs may not actually be fast, given that FLOPs does not take into account information such as degree of parallelism (e.g., depth, recurrence) or hardware-related details like the cost of a memory access”

* 2023: Flan-{PaLM/UL2/T5}

1.8k tasks for instruction tuning

* Encoder-decoder vs Decoder only

* Latent Space Discord discussion on enc-dec vs dec-only

* Related convo with Yi Tay vs Yann LeCun

* @teortaxes:

*