Why you should write your own LLM benchmarks — with Nicholas Carlini, Google DeepMind

Description

Today's guest, Nicholas Carlini, a research scientist at DeepMind, argues that we should be focusing more on what AI can do for us individually, rather than trying to have an answer for everyone.

"How I Use AI" - A Pragmatic Approach

Carlini's blog post "How I Use AI" went viral for good reason. Instead of giving a personal opinion about AI's potential, he simply laid out how he, as a security researcher, uses AI tools in his daily work. He divided it in 12 sections:

* To make applications

* As a tutor

* To get started

* To simplify code

* For boring tasks

* To automate tasks

* As an API reference

* As a search engine

* To solve one-offs

* To teach me

* Solving solved problems

* To fix errors

Each of the sections has specific examples, so we recommend going through it. It also includes all prompts used for it; in the "make applications" case, it's 30,000 words total!

My personal takeaway is that the majority of the work AI can do successfully is what humans dislike doing. Writing boilerplate code, looking up docs, taking repetitive actions, etc. These are usually boring tasks with little creativity, but with a lot of structure. This is the strongest arguments as to why LLMs, especially for code, are more beneficial to senior employees: if you can get the boring stuff out of the way, there's a lot more value you can generate. This is less and less true as you go entry level jobs which are mostly boring and repetitive tasks. Nicholas argues both sides ~21:34 in the pod.

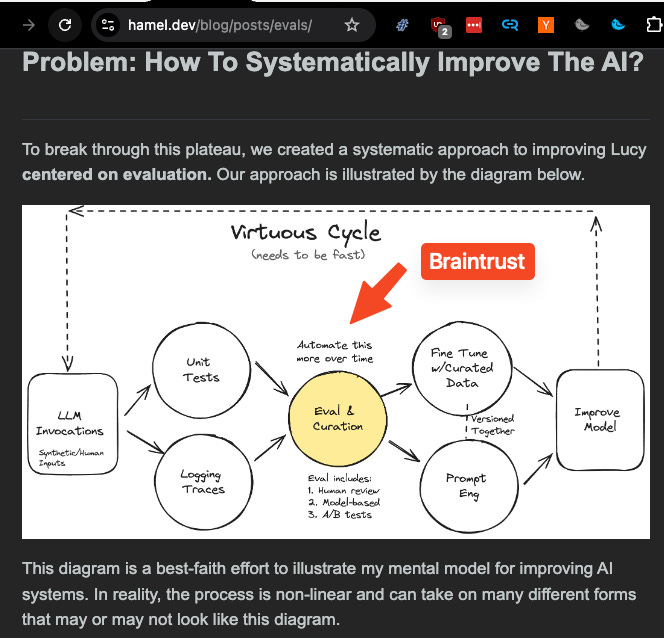

A New Approach to LLM Benchmarks

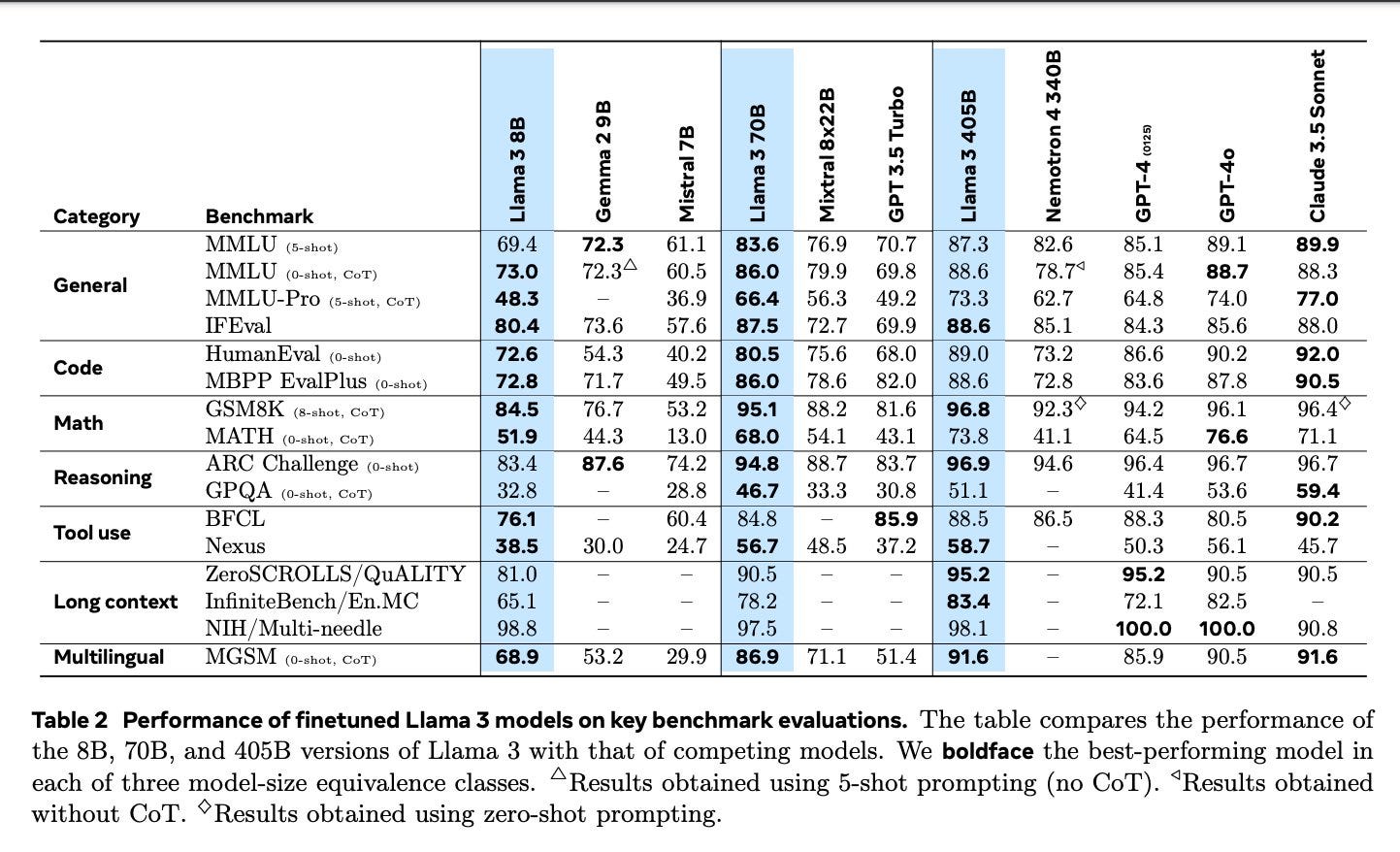

We recently did a Benchmarks 201 episode, a follow up to our original Benchmarks 101, and some of the issues have stayed the same. Notably, there's a big discrepancy between what benchmarks like MMLU test, and what the models are used for. Carlini created his own domain-specific language for writing personalized LLM benchmarks. The idea is simple but powerful:

* Take tasks you've actually needed AI for in the past.

* Turn them into benchmark tests.

* Use these to evaluate new models based on your specific needs.

It can represent very complex tasks, from a single code generation to drawing a US flag using C:

"Write hello world in python" >> LLMRun() >> PythonRun() >> SubstringEvaluator("hello world")

"Write a C program that draws an american flag to stdout." >> LLMRun() >> CRun() >> \ VisionLLMRun("What flag is shown in this image?") >> \ (SubstringEvaluator("United States") | SubstringEvaluator("USA")))

This approach solves a few problems:

* It measures what's actually useful to you, not abstract capabilities.

* It's harder for model creators to "game" your specific benchmark, a problem that has plagued standardized tests.

* It gives you a concrete way to decide if a new model is worth switching to, similar to how developers might run benchmarks before adopting a new library or framework.

Carlini argues that if even a small percentage of AI users created personal benchmarks, we'd have a much better picture of model capabilities in practice.

AI Security

While much of the AI security discussion focuses on either jailbreaks or existential risks, Carlini's research targets the space in between. Some highlights from his recent work:

* LAION 400M data poisoning: By buying expired domains referenced in the dataset, Carlini's team could inject arbitrary images into models trained on LAION 400M. You can read the paper "Poisoning Web-Scale Training Datasets is Practical", for all the details. This is a great example of expanding the scope beyond the model itself, and looking at the whole system and how ti can become vulnerable.

* Stealing model weights: They demonstrated how to extract parts of production language models (like OpenAI's) through careful API queries. This research, "Extracting Training Data from Large Language Models", shows that even black-box access can leak sensitive information.

* Extracting training data: In some cases, they found ways to make models regurgitate verbatim snippets from their training data. Him and Milad Nasr wrote a paper on this as well: Scalable Extraction of Training Data from (Production) Language Models. They also think this might be applicable to extracting RAG results from a generation.

These aren't just theoretical attacks. They've led to real changes in how companies like OpenAI design their APIs and handle data. If you really miss logit_bias and logit results by token, you can blame Nicholas :)

We had a ton of fun also chatting about things like Conway's Game of Life, how much data can fit in a piece of paper, and porting Doom to Javascript. Enjoy!

Show Notes

* Tic-Tac-Toe in one printf statement

* International Obfuscated C Code Contest

* Cursor

* uuencode

Timestamps

* [00:00:00 ] Introductions

* [00:01:14 ] Why Nicholas writes

* [00:02:09 ] The Game of Life

* [00:05:07 ] "How I Use AI" blog post origin story

* [00:08:24 ] Do we need software engineering agents?

* [00:11:03 ] Using AI to kickstart a project

* [00:14:08 ] Ephemeral software

* [00:17:37 ] Using AI to accelerate research

* [00:21:34 ] Experts vs non-expert users as beneficiaries of AI

* [00:24:02 ] Research on generating less secure code with LLMs.

* [00:27:22 ] Learning and explaining code with AI

* [00:30:12 ] AGI speculations?

* [00:32:50 ] Distributing content without social media

* [00:35:39 ] How much data do you think you can put on a single piece of paper?

* [00:37:37 ] Building personal AI benchmarks

* [00:43:04 ] Evolution of prompt engineering and its relevance

* [00:46:06 ] Model vs task benchmarking

* [00:52:14 ] Poisoning LAION 400M through expired domains

* [00:55:38 ] Stealing OpenAI models from their API

* [01:01:29 ] Data stealing and recovering training data from models

* [01:03:30 ] Finding motivation in your work

Transcript

Alessio [00:00:00 ]: Hey everyone, welcome to the Latent Space podcast. This is Alessio, partner and CTO-in-Residence at Decibel Partners, and I'm joined by my co-host Swyx, founder of Smol AI.

Swyx [00:00:12 ]: Hey, and today we're in the in-person studio, which Alessio has gorgeously set up for us, with Nicholas Carlini. Welcome. Thank you. You're a research scientist at DeepMind. You work at the intersection of machine learning and computer security. You got your PhD from Berkeley in 2018, and also your BA from Berkeley as well. And mostly we're here to talk about your blogs, because you are so generous in just writing up what you know. Well, actually, why do you write?

Nicholas [00:00:41 ]: Because I like, I feel like it's fun to share what you've done. I don't like writing, sufficiently didn't like writing, I almost didn't do a PhD, because I knew how much writing was involved in writing papers. I was terrible at writing when I was younger. I do like the remedial writing classes when I was in university, because I was really bad at it. So I don't actually enjoy, I still don't enjoy the act of writing. But I feel like it is useful to share what you're doing, and I like being able to talk about the things that I'm doing that I think are fun. And so I write because I think I want to have something to say, not because I enjoy the act of writing.

Swyx [00:01:14 ]: But yeah. It's a tool for thought, as they often say. Is there any sort of backgrounds or thing that people should know about you as a person? Yeah.

Nicholas [00:01:23 ]: So I